Volume 11, No. 3, Art. 10 – September 2010

Balanced Evaluation: Monitoring the "Success" of a Knowledge Management Project

Patricia Wolf

Abstract: This article reports on the course and the findings of a two year longitudinal study aimed at investigating the impact of a knowledge management project on an organization's communication and decision structures. The knowledge management project introduced cross-functional Communities of Practice into a division of a large automotive company. The author-researcher applied a multi-perspective and multi-method research approach called "balanced evaluation" for being able to unravel changes in organizational knowledge and decision patterns. This system-theoretic approach to evaluation of transformation processes is described and compared to more traditional approaches; findings are presented and discussed.

Key words: communities of practice; knowledge management; participant observation; interviews; group discussions; balanced evaluation

Table of Contents

1. Introduction

2. The Implementation of Knowledge Management into a Social System

3. Approaches to Success Monitoring

3.1 What organizations mean by success monitoring: Traditional approaches

3.2 Why systemic researchers talk about "evaluation" rather than "success monitoring"

3.3 A systemic research design: Balanced evaluation

4. The Case Study—Data Gathering, Analysis and Findings

4.1 The conception phase

4.2 The implementation phase

4.2.1 Content criteria

4.2.2 Perspectives and actors

4.2.3 Processes

4.2.4 Horizons

4.3 The improvement phase

4.3.1 Content criteria

4.3.2 Perspectives and actors

4.3.3 Processes

4.3.4 Horizons

4.4 The reflection phase

4.4.1 Content criteria

4.4.2 Perspectives and actors

4.4.3 Processes

4.4.4 Horizons

4.5 Main insights

5. Discussion

In 1999, a division of a large automotive company decided to set up Communities of Practice (CoPs) in eleven technology fields in order to foster horizontal collaboration across model ranges. Communities of Practice are "groups of people informally bound together by shared experience and passion for a joint enterprise" (WENGER & SNYDER, 2000, p.139). Formal teams and Communities of Practice vary in four characteristics: First, the purpose of CoPs is self defined and not preset by management like in formal teams. Second, CoP membership is not assigned like in formal teams but self-selected. Third, engagement in CoPs is driven by a passion for a topic domain instead of formal job requirements. Fourth, CoPs exist as long as members maintain their interest and engagement (p.142). Organizational CoPs create an context that enables and stimulates experts to share their knowledge and to learn from each other (LAVE, 1988; WENGER, 1999; WENGER & SNYDER, 2000; LAVE & WENGER, 1991). [1]

Together with the CoPs, the organization studied here created a searchable know-how database for supporting documentation of key experiences and lessons learned. At the beginning of the CoP implementation process, the organization set up a project team. The team was assigned the mandate to set up the cross-functional CoPs and to develop and implement the database within two years. The author of this article was a member of this project team and was mandated the task of monitoring the project's success. At the same time, she was researching this process in the frame of her doctoral thesis (WOLF, 2003, 2004, 2006). [2]

The task assigned to the author-researcher put her into a situation where she had to generate insights into the impact of a transformation process. Moreover, her results would on the one hand have to meet the requirements of the organization, i.e. allow it to assess the success of the knowledge management (KM) implementation process in a way that is meaningful to organizational members. On the other hand, the author-researcher had to make sure that the data she produced would be valid to the scientific community and provide her with data according to her epistemic interest. Other than her employer, the researcher was interested in whether the transformation process would have an(y) impact on the management of organizational knowledge. This caused a difficult situation because first her study had to happen in a very complex and political research environment with a multiplicity of process stakeholders, second she was both a member of the organization and a PhD student at a university, and third she had to generate data which would meet both scientific and organizational requirements. This is a very common dilemma that needs considerably more attention, and this article exemplifies how a system theoretic approach helps to deal with it. [3]

Extant studies aimed at evaluating complex interventions into local and regional, i.e. geographically defined systems propose a systemic approach inspired by complexity theory. They claim that this epistemic perspective enables a researcher to encompass the emerging theories of change of different stakeholders as the basis for evaluation. Authors of these studies developed and applied qualitative, multi-method, iterative and participative research designs in their studies (for a summary, see ROGERS, 2008, pp.38-40). LUHMANN's system theory (LUHMANN, 2000; further referred to as system theory) can be classified as an epistemic approach which is akin to complexity theory and offers an appropriate systemic perspective on organizations. The author-researcher therefore decided to use system theory as a theoretical framework. [4]

This article will report on the journey of the research process and proceed as follows: It starts by introducing he research question from a system theoretic point of view, reviews existing approaches for performance measurement and success monitoring and comes up with implications and requirements which should be met by an research design which would allow to observe a transformation process like the given one from a system theoretic point of view. Thereafter, the "balanced evaluation" approach is introduced. The author then reports on the evaluation process and its findings and discusses them. [5]

2. The Implementation of Knowledge Management into a Social System

From the perspective of the system theory, organizations are social systems reproducing themselves based upon self-referential operations in the circular mechanism of autopoiesis, more precisely by affiliating communications to communications (LUHMANN, 1997, p.97). Organizations communicate in the specific form of decisions (BAECKER, 1995; LUHMANN, 2000). They regulate internal and external complexity through selectivity, i.e. by constraining communication opportunities with the help of self-referential selections. Contact to the environment happens via structural links which are created in resonance processes. This implies "that systems react to external events solely based upon their own [decision, the author] structure" (LUHMANN, 1986, p.269; my translation). [6]

In this sense, organizations construct information from the plethora of data surrounding them according to their own criteria for what is relevant for an organizational decision (WOLF & HILSE, 2009). For processing information, social systems apply knowledge (LUHMANN, 1996, p.42). Organizational knowledge consists of pattern routines allowing organizations to deal with information, i.e. for classifying information as new and relevant, for combining it with other information or for rejecting information as irrelevant (BAECKER, 1998). Processing information based upon organizational knowledge is a circular mechanism itself: While it determines (and thereby stabilizes) the social system's patterns for information construction and allows processing information, in the same process organizational knowledge is also either confirmed or changed (WOLF, 2003). [7]

The implementation of KM into an organization is therefore strictly speaking aimed at gaining access to and an influence on the organization's patterns for information processing, on organizational knowledge. A lot of technology-based initiatives which are communicated as KM such as the implementation of databases, decision-support systems and file sharing servers (HELLSTRÖM & JACOB, 2003) are therefore mislabeled from a system theoretic point of view. Other initiatives aimed at initiating, enabling and facilitating social exchange or combinations of social and technology-based approaches concerning the patterns the organization applies for handling information can be classified as "KM projects" because they make (parts of) organizational knowledge explicit. Doing so is very risky because once made explicit, organizational knowledge can become a topic of an organizational decision. When organizational knowledge is rejected as not appropriate, the social system's identity becomes at risk (BAECKER, 1998, p.19). Therefore, KM projects usually focus on types of explicit organizational knowledge: Product knowledge and expert knowledge. Product knowledge is knowledge about whose and which problems can be solved by which product or service. Expert knowledge is external knowledge about the organization, for example constructed by consultants (pp.6-9). [8]

The KM initiative this article reports on represents a combination of social and technology-based approaches to KM. Communities of Practice were understood as groups where topic experts discuss product knowledge. The community approach focused "on the creation of those social contexts and processes which enable topic experts (…) to collectively develop solutions to problems" (HILSE, 2000, p.297; my translation). The know-how database where CoP members were expected to document their agreements and lessons learned functioned as a visualization tool. It stored information on problem solving patterns (organizational knowledge) which CoP members classified as appropriate basis for decisions. [9]

3. Approaches to Success Monitoring

"Success monitoring" of a KM implementation process means something different to a system theoretic researcher and to the managers of an organization starting a KM project. System theoretically, the management role is important because managers provide organizational sub-systems and members with an orientation and maintain the overall system's coherence in terms of defining which meanings and objectives are valid in the given context (WIMMER, 1996). Success monitoring is from their perspective a means for generating "objective" data on the process in question, and is often translated with "performance measurement" (RIST, 2006). Unlike them, the system theoretic researcher understands "success monitoring" as evaluation (BOHNI NIELSEN & EJLER, 2008). [10]

3.1 What organizations mean by success monitoring: Traditional approaches

Jody KUSEK and Ray RIST (2004, p.227) define performance measurement as "system of assessing performance of (development) interventions against stated goals." From a system theoretic point of view, success monitoring provides organizational members with an orientation about a risky organizational transformation. Related activities constitute a self-observation process enabling the organization to introduce criteria which make the before and after difference(s) of the KM implementation project visible, thereby allowing for the communication and further decisions about the transformation. Monitoring reduces uncertainty. The management of an organization is therefore interested in measurable, "objective" results which allow it to continuously decide on the KM implementation process and to feel in control of it. Therefore, monitoring results have to take on a shape which enables the organization to use them as the basis for decisions—in the case of a private company as numbers, figures and measures in Power Point presentations (WOLF, 2003). This form of "transferring" explicit knowledge marks information as relevant, thereby allowing deciding about it and rendering autopoiesis of social systems possible. [11]

Not surprisingly, the vast majority of literature describing approaches for monitoring the success of KM initiatives points to performance measurement approaches. Studies describe how managers define both process and outcome goals for their KM initiatives at the beginning of the implementation process which are then measured accordingly: Whereas process measures focus on monitoring the actual progress of the KM initiative, outcome measures assess final outcomes (HELLSTRÖM & JACOB, 2003). Frameworks for assessing outcomes of a KM process like the Intangible Asset Monitor (SVEIBY, 1997) or the Balanced Scorecard (KAPLAN & NORTON, 1992) apply measures based on scores for growths, renewal, stability, performance and efficiency (for a summary, see MARTIN, 2000). Approaches to monitoring process progress measure how successful the organization, teams or individuals have been in attaining the goals and milestones envisioned for the implementation process (e.g. LIEBOWITZ & SUEN, 2000). [12]

3.2 Why systemic researchers talk about "evaluation" rather than "success monitoring"

By contrast, a systemic researcher assigned to monitor the "success" of a KM implementation process is interested observing how organizational knowledge, i.e. the organizational patterns for processing information, are impacted by the intervention, both concerning patterns for applying organizational knowledge as well as for changing it. He or she is therefore much more interested in evaluation defined as "careful retrospective assessment of the merits, worth and value of (…) interventions, which is intended to play a role in future practical action situations" (VEDUNG, 2004, p.3) than they are with "success monitoring." [13]

This is not trivial as it asks for a goal-free evaluation approach which takes into account that KM implementation processes have moving targets, because "(…) when we advance, the goals change due to what is learnt in the process" (HELLSTRÖM & JACOB, 2003, p.57). From this, two major challenges arise which put the researcher into a paradoxical situation (WOLF, 2003): First, changes in organizational knowledge cannot be observed in real time but are constructed only retrospectively as difference between before and after. A systemic researcher faces the difficult task of concluding from (observable) communications and the actions of organizational members, as well as from the analysis of artifacts like documents, e-mails or posters, on the impact of the KM implementation project on organizational knowledge. This implies a long term evaluation, in which the researcher should best become acquainted with the organization, become a member. Second, at the same time, he or she would have to apply mechanisms of self-observation and include reflection phases which would allow staying away from organizational logic and reflect findings. The researcher should be able to observe impacts of the intervention which the organization ignores, but also to surprise him- or herself (KNUDSEN, 2010). He or she would have to carefully reconstruct on the one hand the organizational logic of attributing changes to the intervention and on the other hand to generate an understanding why other changes are not observed by the organization or attributed to other processes. [14]

The above discussed suggests a qualitative, explorative research design that allows for including multiple actor perspectives, thereby generating a multifaceted description of the logic(s) according to which the social system operates and allowing conclusions on the impact of the KM initiative on organizational knowledge. [15]

3.3 A systemic research design: Balanced evaluation

The field of constructionist learning and assessment has discussed the problem of goal-free learning and developed approaches for goal-free evaluations. Related work is focused on relational processes of perceiving and debating process outcomes by organizational members. Some scholars have highlighted the importance of integrating multiple perspectives and voices into the evaluation process (e.g. JONASSEN, 1991; WILLIAMS & BURDEN, 1997; HELLSTRÖM & JACOB, 2003). [16]

There are several relevant studies inspired by complexity theory which evaluate interventions into local and regional, i.e. geographically defined social systems. Scholars incorporated the basic ideas of constructionist approaches, but as they were more interested into systemic than relational processes, they altered assumptions and recommendations for evaluation procedures in such a way that researchers would be able to observe systemic decision and communication patterns. These approaches are characterized by the aim to encompass the emerging theories of change of different stakeholders as a basis for the evaluation; all of them apply qualitative, multi-method, iterative and participative research designs (for a summary, see ROGERS, 2008, pp.38-40). [17]

When searching for similar evaluation approaches applicable to interventions and transformation processes in organizations (instead of regions) understood as social systems, the author-researcher recognized a relative paucity of contemporary studies into the evaluation of interventions into an organization and the need for a more modern body of work. She came across a system theoretical evolutionary evaluation approach visible called "balanced evaluation" (ROEHL & WILLKE, 2001) which was aimed at integrating multiple perspectives and using multiple methods for making these perspectives visible in organization studies. This approach was at a conceptual stage, developed based upon experiences of the authors but not yet systematically applied and tested in scientific studies. However, in the light of the conceptual appropriateness of this approach for her epistemic interest and theoretical perspective, she chose this approach for her two and a half year longitudinal study. In balanced transformation processes, both change requirements and the organizational willingness and capability to change are high. This makes them different from traditional organizational development projects (low change requirements but high organizational willingness and capability to change) as well as from turn around projects (high change requirements but low organizational willingness and capability to change). Balanced transformation processes usually rely on a mix of top down and bottom up approaches to the implementation process (HEITGER, 2000, p.5). The described KM implementation process can be classified as such balanced transformation process. [18]

Heiko ROEHL and Helmut WILLKE (2001) suggest the need to monitor a balanced transformation process with a balanced evaluation process. Their basic assumption is that the decision to select a particular change process from different possible strategies between rationalization and organization development is determined by how organizational reality is co-constructed by the members of the organization. Thus, evaluation cannot produce "objective results" because it is based upon presuppositions:

"The term evaluation labels a systematic assessment of a complex process. Evaluation presupposes objectives, criteria and measures and has (in contrast to controlling) an interest in developing an understanding of the whole non-trivial interrelation of elements in the evaluated process" (ROEHL & WILLKE, 2001, p.28; my translation). [19]

The objective of balanced evaluation is to establish an "emergent" evaluation practice which would be customized to organizational reality and accommodate the evolutionary nature of the transformation process and its particular objectives. The evaluation therefore should include a broad number of criteria and be conducted in parallel with the transformation process. Criteria that guide observation have to be questioned and adapted continuously. In essence, according to Heiko ROEHL and Helmut WILLKE (2001) "balanced evaluation" means to continuously reflect on the evaluation process according to four groups of questions:

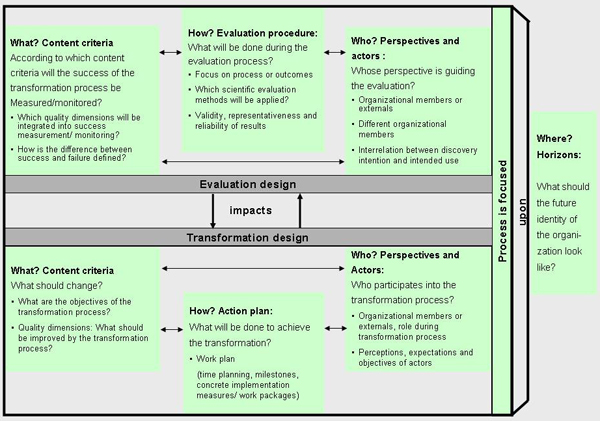

Content criteria: What is evaluated? The evaluation criteria applied lead to statements on elements of the change process classified as relevant for its success or failure. Criteria therefore should be customized to organizational reality and the change process and defined by different process stakeholders. An intentional and formal process of defining, selecting and validating evaluation criteria (how do we decide that we have been successful?) ensures that they stay revisable. It is of particular importance to formulate also non-financial objectives, for example related to learning, knowing or process improvements.

Perspectives and actors: Who evaluates? An evaluation incorporates the perspective and interest of the evaluator. He or she thus has significant "definition power" by classifying evaluation results as good or bad, right or wrong. Balanced evaluation argues for the inclusion of multiple perspectives for minimizing the impact of single perspectives on evaluation results. Transformation and evaluation processes should be treated as separate and not lead by the same person.

Processes: How is evaluation processed? Traditional evolution processes often have the character of an inventory, i.e. they capture the results of a transformation process at a certain point in time based upon previously defined criteria. This is problematic against the background of the evolutionary nature of transformation processes: It is almost impossible to define criteria at the beginning which would allow a statement on its success or failure at any later point in time. Balanced evaluation therefore questions the adequacy of evaluation criteria continuously by reviewing the objectives of the transformation process: Are they still the same or have they changed? In case of a change, evaluation criteria have to be adapted.

Horizons: Where does the evaluation lead to? Both the objectives of the transformation process as well as his evaluation criteria incorporate a statement on the future of the organization. Evaluation thus has to communicate evaluation results in a way that they meaningfully contribute to the process of the definition of the future organizational identity. [20]

Compared to traditional approaches, balanced evaluation has three significant advantages (ROEHL & WILLKE, 2001, p.33): First, the formal definition and continuous discussion of the adequacy of evaluation criteria enables a researcher to develop and apply criteria that reflect patterns of sense making of the organization. This supports the organization in observing the change process as it provides it with customized lenses and "monitoring tools." Second, the integration of multiple process stakeholder perspectives enhances the legitimacy of the evaluation results. Third, the future orientation of the evaluation connects evaluation criteria and aspired organizational identities. Findings from balanced evaluations therefore contribute to translating implicit pictures of the future organization into a language which can become an object of a decision. Figure 1 visualizes the interdependency between the balanced evaluation and the balanced transformation process:

Figure 1: Interdependency between balanced evaluation and balanced transformation processes, adapted from WOLF (2003, p.107)

[21]

4. The Case Study—Data Gathering, Analysis and Findings

Balanced evaluation implies that the evaluation approach emerges and develops together with the transformation process. This section reports on the evaluation approach applied and the findings of this case study. [22]

Overall, a mixed evaluation (and research) design including a broad range of both qualitative and quantitative evaluation methods was applied over a two and a half years evaluation period (2000-2002). The transformation process was constantly adapted to the needs of the company. It consisted of four phases: Conception (months one to four), implementation (months five to twelve), improvement (months 13 to 24) and reflection (months 24 to 30). During all phases, the evaluation design was emergent; the author-researcher applied different methods for data gathering and analysis according to the needs of the organization and the dynamics of the transformation process. Methods include participant observation, qualitative interviews, group discussions and quantitative measures such as regular statistical investigation of data related to CoP characteristics and data base usage. Findings have been consolidated, analyzed and mirrored back to the organization regularly during the process. The phases and evaluation instruments used in the evaluation process are described in detail below. [23]

The description will include reflective text passages which are used to present the perspective of the author-researcher on the emergent research process that forms the focus of the paper. These text passages are marked with italic font and written in the first person singular. [24]

During the first four months of the project, the aim of the project team was to develop a CoP implementation plan which would convince the management board. Activities included reviewing organizational structure diagrams and interviewing experts to identify topics and needs for collaboration across the five car platforms. The project team developed a map of critical knowledge domains and relevant CoP topic areas as the basis for the implementation plan. Furthermore, a first prototype of the know-how database was designed. [25]

At the end of this phase, the management board approved the implementation plan. In addition, the project team was mandated to develop and apply a systematic performance measurement and success monitoring approach for ensuring management information during the project. At this point in time, the project team employed the author-researcher as a member of the project in the formal role of a PhD student. The author-researcher was assigned responsibility for success measurement. [26]

Because I became a team member, I was able to realize participant observation throughout the whole transformation process (GUBA & LINCOLN, 1981; PATTON, 2002). For being able to reflect upon my own role(s) and my own observation premises, I maintained a work-focused observation diary (WEBB, 2009) recording on a daily basis what happened, to whom I had been speaking and what I talked about to these people. The aim of this was to keep track on who or what influenced or guided my perceptions during different phases of the process. As I additionally wished to keep hold of changes in patterns of perception and sense making concerning the KM initiative exposed by the different process stakeholders, I used field notes where I documented statements of the different actors. These notes took the form of descriptions of situations/events or quotations of other people's utterances. I additionally documented my own interpretations on what the documented item meant in the context of the KM implementation process. [27]

During the implementation phase from months five to 12 of the project, 82 cross-functional CoPs and the know-how database were launched. Experts were invited into the CoPs, although the decision to join was totally voluntarily. One major problem was that line management did not necessarily support experts becoming CoP members because there was no time budget dedicated to the community work. However, by the end of the implementation phase approximately 1,000 employees became CoP members. The project team conducted kick-off events, trained CoP members and developed a concept for performance measurement and success monitoring. [28]

The criteria which the project team defined as relevant indicators for project success during this phase were strongly linked to the objectives defined in the implementation plan. It was obvious that the management board would measure the project team against the defined mile stones and objectives. The next significant milestone was the finalization of the CoP structure implementation. Until this milestone was reached, the project would have to show that all related short term objectives were met. One major restriction to data gathering and analysis was that it should be done with easy-to-handle instruments because there were limited resources which could be allocated to the task of performance measurement and success monitoring. The project team had promised the management board that by the end of the implementation phase, the CoPs would be running, i.e. meet and discuss relevant topics once per month and that the database would be filled up with a minimum of three articles per CoP. They therefore designed a template for the collection of statistical data from CoPs such as the frequency of meetings, the attendance rate of the community of practice members and the number of published and planned contributions for the database. [29]

In order to show at a later stage of the project that both the CoP work and the database were useful and used, the template for collecting statistical data incorporated an appraisal of the benefit of CoP membership for CoP members (scale: 1= very high, 4 = inexistent), and the access rates of documents at the know-how database was documented. Furthermore, a form for the documentation of success stories and (quantitative and qualitative) benefits from the community work was developed by the project team. The project team additionally ran two workshops with the CoP facilitators where—amongst other things—the approach to performance and success measurement was presented. Although not officially declared as part of the success monitoring, data on the perspectives of CoP facilitators on what works well, what was problematic and where support would be needed were collected. [30]

My documentation in the field notes was very open during these six months. I tried to record how different actors in the process construct meaning on the CoP implementation process. [31]

To summarize, the major focus during the implementation phase was on measuring short term implementation success. In addition, the project team developed some success monitoring instruments for gathering data on the long term benefits of the CoP work. [32]

The performance measurement concept developed and used during the implementation phase strongly reflected the need of the project team to demonstrate to the management board that all implementation objectives were met. Data gathering thus aimed at uncertainty reduction for the project team in the sense that it allowed team members to assess their work against the formal project objectives. At the same time, the data have been used for calming down the management board, enabling it to decide about implementation success at the end of the implementation phase and to support the project further. [33]

The perspectives of the CoP members were marginalized with the developed performance measurement and success monitoring approach. The form for the documentation of CoP success stories and (quantitative and qualitative) benefits was the instrument that should give them a voice, and the workshops allowed them to make their perspectives explicit. CoP facilitators stated in these workshops that from their point of view, the highest benefit of the CoP work was the opportunity to openly discuss important questions across car platform boundaries. According to them, problems arose from the double function of CoP members in vertical organizational line and the horizontal CoP structures, from the limited funding for CoP work and the missing competence to define cross-functional guidelines. Without decision making competence, cross functional work did not make much sense to them as the solutions developed were not considered as mandatory for all model ranges. [34]

I tried to gather perspectives of different actors involved into the implementation process. However, due to my organizational role I had only very limited access to members of the management board and CoP members. Thus, most of the field notes report on observations related to my work in the project team. [35]

Having developed the data gathering instruments for the required performance measurement and success monitoring approach, they were presented to the management board and the CoP facilitators. Whereas the management board was satisfied by this approach, the community facilitators were very indignant arguing that the indicators allowed for no conclusions on the quality of the work. The form of inherent control in the performance measurement activities was perceived as "stolen" time that should rather be spend on content. The form for documentation of success stories was largely neglected. [36]

Nevertheless, the management board asked the project team to collect the statistical data. Hardly surprising, all CoPs complied with the beforehand formulated expectations of the management board: All reported one CoP meeting per month with an average attendance of 80% of the members and three contributions published in the database. In order to meet the requirements, one large contribution was divided into three smaller ones if necessary. The management board was satisfied. [37]

During the implementation phase of the process, I tried to stay in the background and to observe which criteria for project success the organization itself would use. This placed me in a problematic situation: The other project team members felt that I did not engage enough, i.e. that I did not take over the required responsibility. They regarded me with suspicion because they knew that I was documenting events and utterances in my field notes but these documentations were not available to them. Some CoP members even expressed the suspicion that the data gathering was only done because I as PhD student was in need of data. [38]

As said above, I was mainly in contact with the members of the project team. Therefore, the two workshops with CoP facilitators were very important for me because they allowed for the gathering of insights into perception patterns of CoP members. [39]

For data analysis, I coded the data from my field notes at the end of the phase, identified themes and clustered them to topics (MILES & HUBERMANN, 1994). At this point in time, I did not perform member-checks for not influencing the perceptions and sense-making patterns of the team. I however discussed the results with assistants of the university where I wrote my PhD. [40]

In month 12, the deployment of communities of practice was considered complete and celebrated in a large event. However the community facilitators' spirit during the reflection workshops, held every six months, was ambivalent as described above. [41]

Overall, during the implementation phase the project team developed an observation structure which allowed the team members and the management board to decide whether the implementation phase was a success. For the next 12 months, the management board formulated new objectives: The number of required database publications was doubled, and the CoPs were expected to have monthly meetings with a minimum of 80% of CoP members participating. As a further requirement, the project team was assigned the task to improve the database functionality as well as CoP work in a way that CoPs could self-organize without project support after the end of the project. The knowledge about what should and could be improved was held by the CoP members. Thus it became clear that their perspective would have to be more integrated into performance measurement and success monitoring during the improvement phase. [42]

I felt that to holistically evaluate the impact of the transformation process on organizational knowledge I would have to get more access to the perspective of CoP members. I therefore planned to conduct expert interviews with CoP members on major topics which I deduced from a first qualitative analysis of my field notes (MILES & HUBERMAN, 1994). [43]

The improvement phase lasted from months 13 to 24 of the project. During that phase, efforts of the project team were focused on the optimization of the CoP work. [44]

The task to optimize the newly implemented working structure had an impact on the performance measurement and success monitoring approach: Beside the need to demonstrate to the management board that CoPs were performing well and that the knowledge database was filled up and used, the project team had to identify areas for improvement and make the CoP structure sustainable in a way that CoPs could work self-organized beyond the end of the KM implementation project. [45]

To demonstrating that the CoP's were active, the template for the collection of statistical data was further used during this phase (data collection every three months), and data base hits were documented. However, the data collected with these instruments did not provide any specific insights into the quality of the CoP work and its benefits for the organization. Furthermore, the project team understood that in order to unravel improvement needs it would have to rely on the perspective of the CoP members. Therefore, a complex CoP audit assessment was planned. Although with an explorative aim, project members felt that they would be in need of an audit assessment guideline to structure their observations and to generate results which would allow a comparison of the status of the CoP performance(s). [46]

At the beginning of this phase, I finished the interview guideline for my expert interviews. I asked members of the project team for their feedback. We then realized that my interview guideline could serve as guideline for the audit assessment. Project members felt that my expert interviews would duplicate the audit assessment and decided that I should not go for them. In return, all my questions would be asked during the audit assessments and I would be allowed to attend as many of them as (time wise) possible for me. [47]

The final audit guideline rated community performance in the following six core areas of CoP work:

Efficiency of community work coordination (organization and facilitation of meetings, roles)

Engagement (participation, collaboration, member fluctuation guests)

Focus on content which is strategic relevant to the organization

Interconnectedness with other CoPs, formal working groups, external experts etc.

Quality of contributions in the know-how database

Quality of meeting outcomes, i.e. achievement of community objectives [48]

The audit assessment rated the actual status of the community work for each of these six areas on a LIKERT scale between 1 (very bad) and 5 (excellent). The audit guideline furthermore consisted of field for open text where answers to the questions "What works well in the CoP?," "Where would you like to improve or need support by the project team or the management board?" and "What are tips and tricks you would like to suggest to other CoPs?" should be documented for each of the six core areas. In addition, project team members asked for success stories which were documented. [49]

Apart from the documentation of what CoP members said during the audit assessment, I intensively documented utterances and activities of further actors like the project members, employees not involved into the CoPs or management board members for gaining a holistic picture into sense making patterns concerning the CoP process and its benefits. Still, access to members of the management board was limited. [50]

At the end of this phase the organization ran an employee satisfaction survey. All of a sudden, the project team was invited to formulate four questions on the topic area "Knowledge Management" for the survey. The questions which the employees were asked to answer by selecting one of the options "yes" and "no" were:

I am well-informed on the newly implemented KM elements "CoPs" and "database."

I am expecting a noticeable value from the recently implemented KM elements "CoPs" and "database."

To my mind, in our department know-how is systematically gathered and applied.

In our department it is appreciated if employees engage in CoPs/database. [51]

Statistical data gathered with the performance measurement instruments for demonstrating that the CoPs are active did not indicate any performance change. Through the audit assessment, the perspective of the CoP members came into the focus of the performance measurement and success monitoring activities. The audit assessments were held between a group of three CoP members including the community facilitator and two project team members who were familiar with the respective CoP and had supported it from the very beginning. The author-researcher who was officially assigned the role of an "observer" participated in 60% of the audit assessments. [52]

The assessors and the CoP members prepared their answers to the questions in the audit assessment guideline prior to the audit assessment. They then negotiated an assessment mark assigned to the CoPs in each of the core areas. Additionally, project members documented qualitative answers and success stories. Overall, the audit assessments showed that the CoPs work well: 31% were assigned mark 1, 55% mark 2 and 14% mark 3. The remaining 14 percent were CoPs which had their Kick Off meeting relatively late, so that they were still in the phase of defining topics and structure. During the audit assessments, the CoP members talked mainly about four topics:

Integration into the existing decision structure: The CoP members wished to be assigned a decision making competency for being able to implement cross-functional guidelines—even against line management decisions. They expressed an anxiety about not being supported beyond the end of the project and asked for specific management attention.

Conflict with line function: CoP members reported conflicts with their line managers who had not necessarily supported their engagement in the CoPs. The biggest problem was that there was no official budget for the CoP work. At the same time, the management board expanded the tasks assigned to CoPs, for example as members of the new innovation management panel.

CoPs and performance monitoring: The CoP members stated that they participated in collecting the statistical data in response to pressure from the management board but that they still did not see its value. They declared that they saw value in performance measurement as long as their objectives were supported: Monitoring the number of database contributions was seen as speeding up the process of filling the database, the documentation of success stories was also understood as helping to argue the value of their work against the management board, and the audit assessment was classified as supportive through provision of advice and feedback. They disliked activities which they felt are related to controlling only, like having to document and report the number of members at the CoP meetings.

Benefits and values of the CoP work: As CoP members feared losing support after the end of the implementation project, they felt that talking about the benefits of their work and filling in the form for documentation of success stories would help them to record their value to the management board. This value which their stories talked about was related to cost cutting at the level of the organization as result of better information flow and standardization between departments (reduction of projects reinventing the wheel, usage of synergies, definition of best practices, consistent behavior in front of suppliers, fast access to information, shorter time to market) as well as the interconnectedness and opportunities to learn at personal level (knowing who the experts are, having a holistic picture of the development process in the organization, insights into what others do and new developments within their own topic area). [53]

Interestingly, the review assessments changed the CoP members' perception of the project team dramatically. The CoP members perceived the audit assessment as sensible and supportive. The project team advanced from a bogeyman held responsible for unpopular orders of the management board to a counseling unit representing the CoP members' interests towards the board. Accordingly, the self-perception of the project team members changed: They understood themselves more as a support and advisory unit than as an implementation project. [54]

Something similar happened to me: As I was very much engaged in the development, conduction and analysis of the audit assessment process, project team members felt that I was now much more committed to the project. Also, since CoP members knew that I was documenting observations and that I had a somewhat autonomous role in the project team, several CoP members started to pass insider information on to me and acted as a sparing partner for reflecting my observations. [55]

Apart from the insights on the perspective of the CoP members presented above, I came up with additional interpretations. To my mind, the CoP work must have produced more benefits to CoP members than conflicts with the line management, because there was no notion of boycott by the CoP members. Furthermore, I was able to document changes in the patterns of dealing with information, in the organizational knowledge: The CoPs became a new decision structure which was integrated into existing organizational structures. By the end of the improvement phase the management board had assigned them with the official competency to define cross-functional guidelines. Thereby, new horizontal decision boards have been created. These boards documented the basic principles of their decisions (what kind of information is relevant and how should it be dealt with) in the database. In addition, a new cross-functional language for talking about professional issues in the CoPs was developed and documented in glossaries in the database. It rapidly became important to be member of CoPs and to consult the database—even without time budget assigned—because there, decisions on cross-functional best practices were documented. This was a massive change in organizational knowledge. [56]

I discussed these interpretations with members of the project management team. They agreed that they might be valid interpretations but felt that my hypothesis that line management was not overly supportive to the CoP implementation process was too negative. This is not surprising because all of them were line managers themselves before they became project members, and an explicit discussion might have challenged them in their organizational identities. [57]

The employee satisfaction survey was filled in by (almost) all employees of the organization. It revealed not surprisingly that only between 25 and 30% of the employees felt that they were well informed on the CoPs and the database and expected new structure to benefit their own work. This was however interpreted as excellent result because only 20% of the employees were CoP members and so far, information of Non-CoP members was not a focus of the project. [58]

Prior to the audit, the community members discussed the six topic areas and prepared a self-assessment of their work. The project members attended at least one community meeting prior to the audit assessment. Audit assessments lasted approximately two hours and can be classified as a mix between group discussions and expert interviews. First, the CoP members outlined their perceptions concerning their work and where they would need some support by the project team or the management board. During this expert interview part of the assessment, the CoP members were treated as experts in their field of activity, i.e. their CoP work. The semi-structured interview guideline was used to restrict them to their area of expertise (FLICK, 2009). Thereafter, the project team members gave their own impressions. In a final step, the project team and CoP members negotiated an assessment mark for each of the six core areas. Project members documented the negotiated assessment mark and the result of the discussion on best practices and areas for improvement. Additionally, they discussed the value of the community work perceived by the CoP members and documented it in the form for success stories. This part of the assessment resembled a group discussion where participants negotiate consensus on a certain topic (KRÜGER, 1983) and develop problem-solving strategies through discussing alternatives (FLICK, 2009). The author-researcher took additional notes which were exclusively used for her PhD and transferred into her research diary after the assessment meeting. [59]

Data analysis can also be split into two steps: Quantitative data (assessment marks in the six core areas) were documented and consolidated into an overall assessment mark. Qualitative data were coded by the author-researcher with the software Atlas.ti, drawing on techniques for generating meaning as proposed by Matthew MILES and Michael HUBERMANN (1994): In a first step, the author-researcher noted themes, in a second step these were clustered and finally, relations between themes were mapped. For checking the validity of the coding, results have been presented to members of the project management team and selected CoP members. Validating interpretations through integrating the perspectives of others allows for ecologic and communicative credibility of results (MÜHLFELD, WINDOLF, LAMPERT & KRÜGER, 1981). [60]

The project team presented the audit results to the management board as part of the final project report. By this point in time, it became obvious that the CoP work created a significant benefit for the organization and that CoPs were performing very well in terms of the success criteria applied. The CoP members made clear that they would need further continuous support beyond the lifecycle of the initial project. The management board appreciated the work of the project team and changed its organizational status into a permanent Knowledge Management Department. In addition, CoPs received the official authorization to issue cross-functional guidelines. [61]

I included my observations on the change of organizational knowledge and the impact of the KM project on decision structures into a presentation to the management board. Other than the statistical data on CoP performance and the list of bullet points on the benefits which the CoPs provide to the organization, these insights did not cause any reaction but were largely ignored by the board members. [62]

The management board also discussed the results of the employee satisfaction survey. They assigned the new KM department two major tasks: First, to support the CoPs further and to maintain the platform, and second to advertise the benefits of CoPs and the database to employees who were non-CoP members. [63]

A new phase of community of practice performance measurement and success monitoring started with the end of the project. This phase was observed by the author-researcher for six month; thereafter she left the organization. As the members of the new KM department saw their task mainly in supporting the CoP work and as the management board did not ask for further reporting on the work performed by the CoPs, a new instrument for performance measurement and success monitoring was developed. [64]

The new instrument—the so-called "reflection form sheet"—was aimed at assisting the CoPs in the systematic reflection of their work. It can be characterized as a mixture of the audit documentation form and the form sheet for the documentation of success stories. In addition, some statistical like the frequency of meetings and their attendance rate should be documented. This form was meant to assist the CoP members in performing a regular self-evaluation. [65]

The KM team launched a big marketing campaign for the CoP structure in the organization. At this point in time, to complete my picture of perspectives on the KM implementation process, I was interested in how non-CoP members would assess the new KM structure. I therefore decided to conduct group discussions with non-CoP members where I asked:

What the interviewees knew about the new KM structure, and

Where they expected a benefit for the organization and for themselves. [66]

The perspective of the CoP members was an important focus of performance measurement and success monitoring. The KM department triggered the reflection process with the new form for the first time three months after the end of the KM project. There were no significant changes in the data, and qualitative answers indicated that the only area where CoP members wished to improve was in networking with other CoPs. [67]

During the group discussions which I held with three groups (six, six and five participants), approximately half of the interviewees acknowledged that they already heard of CoPs and the database. However, most of them were not aware what was exactly done in the CoPs or documented in the database. After a short input by me on the new KM structure, five of them stated that they would expect to benefit in their own work from the new transparency on who is expert in what topic area. Two interviewees expected to save time due to the fast access to information documented in the database. However, five interviewees stated that they do not expect any benefit for their own work. Asked for the benefits for the organization, interviewees agreed that there will be a benefit concerning knowledge exchange (4), cost cutting (7), knowledge transfer and documentation (5) and transparency on who were experts on specific topics (3). Interestingly, none of the interviewees felt that the organization would not benefit from the new KM structure. They felt that for making the KM initiative sustainable and beneficial for all organizational members, the information on KM activities would have to be intensified (32), work with the database would have to be implemented into the daily work instead of being an add on (10) and functionalities of the platform should be improved (12). Five participants stated that line management would have to support engagement in KM activities and three interviewees expressed the general need for a culture change concerning knowledge transfer in the organization. [68]

The KM department triggered the CoP reflection process every six months and collected the reflection forms. The self-reporting provided the new KM department with a rapid overview on the actual situation in the CoPs and to offer customized support if necessary. [69]

The group discussions allowed for the elicitation of judgments about the KM process from actors who had not been involved with it on its value for the organization (KRÜGER, 1983). I selected a random sample of 40 employees who were not CoP members from the employee list of the organization and invited them to participate in the group discussions. 17 people participated. Each of the three group discussion sessions followed the same agenda: First, I asked group members whether they know the CoPs and the database. Then, I provided them with a short discussion on the new KM structure. Thereafter, I asked them to name potential benefits for their own work and for the organization. It was interesting to see how participants immediately assessed the benefits for their own work as relatively low but the benefits for the organization as relatively high. These opinions have been affirmed during the discussion in iterative conversations between members. The same happened in the very lively discussion on the fourth question where I asked "What needs to happen for this KM structure to become sustainable and beneficial for the whole organization?" [70]

The sessions were tape recorded and partly transcribed. As the KM department did not expect breaking news from the group discussions and I was finishing my PhD, we decided to save resources and transcribed only the answers concerning the four questions ad verbatim. The KM department used these results to legitimize the costs of the marketing campaign. [71]

I left the department before the end of this phase. Overall, CoP work seemed to run smoothly, whereas I saw in the group discussions a need to contextualize and integrate the KM structure (for example the usage of the database) into the daily work of non-CoP members for sustaining the new KM structure. This would have potentially generated new objectives and the need of an integration concept developed by the KM department. [72]

From the case study above we see that the introduction of Communities of Practice under the label of Knowledge Management is not simply a means for enabling more efficient creation, documentation and distribution of information. It is a massive intervention into an organization because it has the potential to change its decision patterns together with the organizational knowledge. In the case described, a horizontal structure for selecting information as relevant and processing and deciding about it across organizational boundaries was established. [73]

The described change in the organizational decision patterns happened within the blind spot of the organization in the sense that it was not evaluated with the performance measurement approaches that produced data which the management board selected as relevant information of the transformation. However, the management board driven performance measurement instruments provided organizational members with an important orientation within the transformation process: First, they helped to judge about the actual status of the transformation, i.e. allowed to assess whether significant milestones were reached in time. Second, data collected with these instruments reduced uncertainty in the sense that they permitted the management board members to decide about further steps. [74]

The impact of the transformation process on organizational knowledge and thereby on organizational decision patterns can however only be evaluated with the help of an evaluation design that focuses on sense making patterns produced and applied by different actors involved in the transformation process. The continuous collection of data enables evaluators to visualize the otherwise invisible impacts of the transformation. [75]

This article has reported on the journey of a two and a half year longitudinal study aimed at evaluating the impact of a KM introduction into an organization. This study had to satisfy two parties: First the organization as employer who wished to be provided with a systematic performance measurement and success monitoring approach for ensuring management information during the project. Second the epistemic interest of the author-researcher (and the scientific committee which assessed her PhD thesis) into evaluating the impact of the KM implementation on organizational communication and decision structures in a way that meets the requirements of the scientific community. [76]

The above report signifies that this created some conflict potential and dilemmas. These have not been solved in the sense that the author-researcher decided to satisfy the requirements of one side and to neglect those of the other. Instead, she applied a multi-perspective, multi-method and emergent research design which allowed her to adapt the evaluation to the transformation process as suggested by Heiko ROEHL and Helmut WILLKE (2001). This exposes an understanding of the research process as methodologically not determined but mutually interwoven with the object of research, in this case a transformation process. It acknowledges that research aimed at studying dynamics or processes should be process-driven itself. In essence, this procedure enabled the author-researcher to adapt her observation patterns to changes in the transformation process and to develop new evaluation methods for integrating further stakeholder perspectives which emerged, for example the perspective of the CoP members during the improvement or the perspective of non-CoP members during the reflection phase). To apply an emergent research design and integrate as many perspectives as possible of members of a research field enables the comprehension of the multicity of its characteristics and enhances the reliability—in the sense of coherence with existing perspectives on the researched process—of insights produced by qualitative research (BOGUMIL & IMMERFALL, 1985, p.71). This approach worked very well in the described research journey. [77]

Reflecting on the research journey in detail, we find a mix of qualitative and quantitative measures which have been developed and applied for different purposes. We find that methods which have been used for providing the organization, especially the management board and the project team, with data were primarily quantitative whereas methods applied by the author-researcher were qualitative. This relates back to an important difference in the interest of the two sides: Whereas the organization was interested into data on the current status of the implementation of the KM project, the author-researcher was interested in observing a possible transformation in organizational decision structures for dealing with information, into organizational knowledge. It also raises the question about how organizations, which tend to like "hard data—evidence," can accept and benefit from qualitative insight and use it systematically. Authors of system theoretic studies often reflect about how it might be possible to communicate their findings in a way that the system they study could make use of them (see for example MEISSNER & SPRENGER, 2010). From this case study, we can however (and not surprisingly) see that such an aim would also require attempts of the organization to select the knowledge produced by researchers as relevant. This is a key question for Knowledge Management and brings up the issue of tacit knowledge in particular. [78]

The methods developed and applied by the organization and the author-researcher were functional in the sense that they served their respective logics for observing the transformation process. The management board was in need of data which allowed it to decide whether the transformation process was successful by linking its outcomes back to pre-defined objectives. These objectives were quantitative like the number of articles in the database, the number of hits or the performance rates of the CoPs—what is in line with traditional performance measurement approaches which argue for assessing final outcomes (HELLSTRÖM & JACOB, 2003). Based on these criteria, the management board was able to decide whether to continue or to stop the KM implementation process. They formed its framework for self-observation. The project team used these data for justifying its efforts to the management board. Content criteria changed with the assignment of tasks to the project, what signifies that the focus of the transformation process changed the focus of the evaluation process. This implies to research which is assigned the task of reporting on the success of a transformation process that the questions to ask and the data to report are emergent and determined by the organizational self-observation and decision logics. [79]

The additional qualitative data gathered by the author-researcher, i.e. observations gathered in the project diary, qualitative data from interviews and group discussions, enabled her to grasp patterns of sense making which different stakeholders applied to the transformation process as well as its impact on organizational knowledge. Like this, it was possible to integrate sense making patterns of groups of minor relevance to the management board like the CoP members or non-CoP members. One important issue was that the author-researcher formulated her epistemic interest very clearly for herself at the beginning of the research process. She tried to precisely define the frame of her observation. However, by leaving it to the organization to define the focus of the performance measurement, she was able to keep her observation frame open to the integration of further perspectives, to continuously questioning and challenging her own hypotheses and to surprise herself (see KNUDSEN, 2010). [80]

Qualitative research is always suspected to produce data which are biased by the subjective perception frame of the researcher (TUCKERMANN & RÜEGG-STÜRM, 2010). This applies particularly to data collected with a diary or field notes which are said to be valuable in the sense that they provide insights into subjective perceptions and help the researcher to reflect on the process and role but limited as they involve a selection bias (ALASZEWSKI, 2006). In addition, in this case the author-researcher was the only researcher in the organization and data were coded by her only. With respect to the use of multiple interpreters, Gerhard KLEINING (1982) argues that it will help to identify and reduce the influence of a single interpreter's frame of references. The question that arises is how credible the interpretations of the author-researcher have been. As it was not possible to apply the approach of triangulating analysts proposed by Michael PATTON (2002), the author-researcher at least regularly presented her hypotheses and selected material to the members of the research group at the university. In addition, she followed the suggestion by Yvonne LINCOLN and Egon GUBA (1985) and continuously performed "member checks," i.e. asked for feedback on her hypotheses by various stakeholders of the transformation process. This implies a high ecologic and communicative credibility of her interpretations in the sense that others involved in the researched process validated her interpretations based on their own perspectives (MÜHLFELD et al., 1981). However, apart from concluding that the data can be judged as valid for the case of the researched transformation process, it is not possible to generalize them in the way of concluding that the same patterns would be detected in any other transformation process in any other organization. Therefore, further qualitative studies are invited that would reproduce the research design in different organizations and transformation processes. [81]

I sincerely wish to thank Mark LEMON and Jens MEISSNER for their comments on earlier versions of this paper.

Alaszewski, Andy (2006). Using diaries for social research. London: Sage.

Baecker, Dirk (1995). Durch diesen schönen Fehler mit sich selbst bekannt gemacht. Das Experiment der Organisation. In Barbara Heitger, Christof Schmitz & Peter-W. Gester (Eds.), Managerie. 3. Jahrbuch. Systemisches Denken und Handeln im Management (pp.210-230). Heidelberg: Carl Auer Verlag.

Baecker, Dirk (1998). Zum Problem des Wissens in Organisationen. OrganisationsEntwicklung, 17(3), 4-21.

Bogumil, Jörg & Immerfall, Stefan (1985). Wahrnehmungsweisen empirischer Sozialforschung. Zum Selbstverständnis des sozialwissenschaftlichen Erfahrungsprozesses. Frankfurt/M.: Campus.

Bohni Nielsen, Steffen & Ejler, Nicolaj (2008). Improving performance?: Exploring the complementarities between evaluation and performance measurement. Evaluation, 14(2), 171-192.

Flick, Uwe (2009). An introduction to qualitative research (4th ed.). London: Sage.

Guba, Egon G. & Lincoln, Yvonne S. (1981). Effective evaluation: Improving the usefulness of evaluation results through responsive and naturalistic approaches. San Francisco: Jossey-Bass.

Heitger, Barbara (2000). Balanced transformation. Hernsteiner, 13(1), 4-9.

Hellström, Tomas & Jacob, Merle (2003). Knowledge without goals? Evaluation of knowledge management programms. Evaluation, 9(1), 55-72.

Hilse, Heiko (2000). Kognitive Wende in Management und Beratung. Wissensmanagement aus sozialwissenschaftlicher Perspektive. Wiesbaden: DUV.

Jonassen, David H. (1991). Objectivism vs. constructivism: Do we need a new philosophical paradigm?. Educational Technology: Research Development, 39(3), 5-14.

Kaplan, Robert S. & Norton, David P. (1992). The balanced scorecard—measures that drives performance. Harvard Business Review, 70(1), 71-79.

Kleining, Gerhard (1982). Umriss zu einer Methodologie qualitativer Sozialforschung. Kölner Zeitschrift für Soziologie und Sozialpsychologie, 34, 224-253.

Knudsen, Morton (2010). Surprised by method—functional method and systems theory. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 11(3), Art. 12, http://nbn-resolving.de/urn:nbn:de:0114-fqs1003122 [Date of access: September 30, 2010].

Krüger, Heidi (1983). Gruppendiskussion. Überlegungen zur Rekonstruktion sozialer Wirklichkeit aus der Sicht der Betroffenen. Soziale Welt, 34(1), 90-109.

Kusek, Jody Zall & Rist, Ray C. (2004). Ten steps to a results-based monitoring and evaluation system. Washington, DC: World Bank.

Lave, Jean (1988). Cognition in practice: Mind, mathematics, and culture in everyday life. Cambridge: Cambridge University Press.

Lave, Jean & Wenger, Etienne (1991). Situated learning: Legitimate peripheral participation. Cambridge: Cambridge University Press.

Liebowitz, Jay & Suen, Ching Y. (2000). Developing knowledge management metrics for measuring intellectual capital. Journal of Intellectual Capital, 1(1), 54-67.

Lincoln, Yvonne S. & Guba, Egon G. (1985). Naturalistic inquiry. Newbury Park: Sage.

Luhmann, Niklas (1986). Ökologische Kommunikation (3rd ed.). Opladen: Westdeutscher Verlag.

Luhmann, Niklas (1996). Wissenschaft als soziales System. Hagen: FernUniversität in Hagen.

Luhmann, Niklas (1997). Die Gesellschaft der Gesellschaft. Frankfurt/M.: Suhrkamp.

Luhmann, Niklas (2000). Organisation und Entscheidung. Opladen: Westdeutscher Verlag.

Martin, William J. (2000). Approaches to the measurement of the impact of knowledge management programmes. Journal of Information Science, 26(1), 21-27.

Meissner, Jens O. & Sprenger, Martin (2010). Mixing methods in innovation research: Studying the process-culture-link in innovation management. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 11(3), Art. 13, http://nbn-resolving.de/urn:nbn:de:0114-fqs1003134 [Date of access: September 30, 2010].

Miles, Matthew B. & Huberman, A. Michael (1994). Qualitative data analysis. An expanded sourcebook (2nd ed.). Thousand Oaks: Sage.

Mühlfeld, Claus; Windolf, Paul; Lampert, Norbert & Krüger, Heidi (1981). Auswertungsprobleme offener Interviews. Soziale Welt, 32, 325-352.

Patton, Michael Q. (2002). Qualitative research and evaluation methods. Thousand Oaks, CA: Sage.

Rist, Ray C. (2006). The "E" in monitoring and evaluation: Using evaluative knowledge to support a results-based management system. In Ray C. Rist & Nicoletta Stame (Eds.), From studies to streams: managing evaluative systems (pp.3-22). London: Transaction Publihers.

Roehl, Heiko & Willke, Helmut (2001). Kopf oder Zahl!? Zur Evaluation komplexer Transformationsprozesse. OrganisationsEntwicklung, 20(2), 24-33.

Rogers, Patricia J. (2008). Using program theory to evaluate complicated and complex aspects of interventions. Evaluation, 14(1), 29-48.

Sveiby, Karl E. (1997). The new organizational wealth: Managing and measuring knowledge based assets. San Francisco: Barrett Koehler.

Tuckermann, Harald & Rüegg-Stürm, Johannes (2010). Researching practice and practicing research reflexively—conceptualizing the relationship between research partners and researchers in longitudinal studies. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 11(3), Art. 14, http://nbn-resolving.de/urn:nbn:de:0114-fqs1003147 [Date of access: September 30, 2010].

Vedung, Evert (2004). Public policy and program evaluation. London: Transaction Publishers.

Webb, Carol (2009). Dear diary: Recommendations for researching knowledge transfer of the complex. Electronic Journal of Knowledge Management, 7(1), 191-198.

Wenger, Etienne (1999). Learning as social participation. Knowledge Management Review, 6, 30-33.

Wenger, Etienne & Snyder, William M. (2000). Communities of practice: The organizational frontier. Harvard Business Review, 78, 139-145.

Williams, Marion & Burden, Robert L. (1997). Psychology for language teachers: A social constructivist approach. Cambridge: Cambridge University Press.

Wimmer, Rudolf (1996). Die Zukunft von Führung—Brauchen wir noch Vorgesetzte im herkömmlichen Sinn? OrganisationsEntwicklung, 15(4), 46-57.

Wolf, Patricia (2003). Erfolgsmessung der Einführung von Wissensmanagement. Eine Evaluationsstudie im Projekt "Knowledge Management" der Mercedes-Benz Pkw-Entwicklung der DaimlerChrysler AG. Münster: Verlagshaus Monsenstein und Vannerdat.

Wolf, Patricia (2004). Eine Geschichte über Communities. OrganisationsEntwicklung, 23(2), 10-19.

Wolf, Patricia (2006). Wissensmanagement braucht Gruppen: Communities of Practice. In Cornelia Edding & Wolfgang Kraus (Eds.), Ist der Gruppe noch zu helfen? Gruppendynamik und Individualisierung (pp.193-210). Opladen: Barbara Budrich Verlag.

Wolf, Patricia & Hilse, Heiko (2009). Wissen und Lernen. In Rudolf Wimmer, Jens Meissner & Patricia Wolf (Eds.), Praktische Organisationswissenschaft. Lehrbuch für Studium und Beruf (pp.118-143). Heidelberg: Carl-Auer Verlag.

Patricia WOLF, Prof. Dr. rer. pol., is Professor of General Management and Research Director of the Institute of Management and Regional Economics at Lucerne University of Applied Sciences and Arts (Switzerland). At the same time, she is Senior Guest Researcher at the Center for Organizational and Occupational Sciences (Switzerland) and Visiting Professor of Knowledge and Innovation Management at University of Caxias dos Sul (Brazil). Patricia holds a doctor degree in Business Administration from University Witten Herdecke, Germany and is actually finishing her studies on Sociology, Philosophy, and Literature at FernUniversität Hagen, Germany. Her main research interests cover knowledge transformation and innovation management in social systems (regions, organizations, groups).

Contact:

Patricia Wolf

ETH Zurich

Center for Organizational and Occupational Sciences

Kreuzplatz 5, 8032 Zurich

Switzerland

Tel.: +41 44 632 8194

Fax: +41 44 632 1186

E-mail: pawolf@ethz.ch

URL: http://www.pda.ethz.ch/people/Gaeste/pawolf

Wolf, Patricia (2010). Balanced Evaluation: Monitoring the "Success" of a Knowledge Management Project [81 paragraphs]. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 11(3), Art. 10, http://nbn-resolving.de/urn:nbn:de:0114-fqs1003108.