Volume 17, No. 2, Art. 16 – May 2016

Methodological Tool or Methodology? Beyond Instrumentality and Efficiency with Qualitative Data Analysis Software

Pengfei Zhao, Peiwei Li, Karen Ross & Barbara Dennis

Abstract: Qualitative data analysis software (QDAS) has become increasingly popular among researchers. However, very few discussions have developed regarding the effect of QDAS on the validity of qualitative data analysis. It is a pressing issue, especially because the recent proliferation of conceptualizations of validity has challenged, and to some degree undermined, the taken-for-granted connection between the methodologically neutral understanding of validity and QDAS. This article suggests an alternative framework for examining the relationship between validity and the use of QDAS. Shifting the analytic focus from instrumentality and efficiency of QDAS to the research practice itself, we propose that qualitative researchers should formulate a "reflective space" at the intersection of their methodological approach, the built-in validity structure of QDAS and the specific research context, in order to make deliberative and reflective methodological decisions. We illustrate this new framework through discussion of a collaborative action research project.

Key words: qualitative data analysis software (QDAS); validity; methodological decision-making; reflection; technology; collaboration; Dedoose; inter-rater reliability

Table of Contents

1. Introduction

2. A Naturalistic Trust in QDAS

3. The Discussion of Validity in a Post-Qualitative Era

4. Qualitative Research as Practice

5. The Reflective Space in the "Researching Research" Project

5.1 Finding validity in research process instead of in research products

5.2 Consensus formation by breaking a linear coding process

5.3 Open to falsifiability

5.4 Creating an egalitarian collaborative environment

6. Conclusion

Qualitative data analysis software (hereafter QDAS) such as NVivo, Dedoose and ATLAS.ti, has become increasingly popular in the field of qualitative inquiry (DAVIDSON & DI DREGORIO, 2011), but few discussions have examined the effect of its use on the validity of data analysis. A prevalent tendency among qualitative researchers is to utilize QDAS as a tool kit that can make qualitative data analysis more efficient. For instance, our analysis of 72 research articles published in the peer-reviewed journal American Educational Research Journal in 2014 and 2015 shows that of 24 qualitative research or mixed methods articles, 9 report on the use of QDAS. However, only two articles briefly address the effects of conducting data analysis using research software: one highlights the software's capacity to facilitate collaborative research (KANNO & KANGAS, 2014) and the other praises QDAS for making the analysis more efficient (ZAMBRANA et al., 2015). None of the articles explicate in what sense the use of QDAS has influenced the validity of their studies, nor do they discuss how the underlying assumptions embedded in the software intersects with their research practice. Instead, they treat QDAS as a methodologically neutral tool that facilitates their data analysis. [1]

In this article, we argue that QDAS affects qualitative inquiry in a more complicated way and that its relationship to validity deserves a more thorough examination. We present our interrogation of the relationship between validity and QDAS theoretically, before grounding it within discussion of a collaborative action research project. In our theoretical analysis, we review validity theories and literature of QDAS separately. Our review suggests that on the one hand, the QDAS-focused literature treats the relationship between QDAS and validity too technically to reach the epistemological foundation of the discussion, whereas on the other hand, scholarship on validity approaches this issue too theoretically to attend to the technological infrastructure of research practice. The lack of integration of these two sets of literature points to the need for deliberate methodological decision-making in the use of QDAS that encompasses both its theoretical and technological implications. Re-envisioning the relationship between researchers and QDAS, we advocate for a more holistic, deliberative and reflective methodological decision-making process in relation to the examination of validity, and suggest the term "reflective space" to conceptualize the QDAS-validity intersection. [2]

To illustrate the concept of "reflective space," we draw upon our experiences of using QDAS in a collaborative action research project. Whereas our use of QDAS in this project has been limited primarily to one platform—Dedoose—the challenges we encountered prompted an ongoing conversation about the methodological implications of using QDAS more broadly. This article crystallizes the outcomes of these theoretically and practically grounded conversations. Specifically, we propose that in the pursuit of valid qualitative data analysis, a "reflective space" can be created at the intersection of the philosophic perspective underlying a specific study, the technological mediation involved, and the research context. Our goal is to mitigate the conceptual gap between the theoretical development of validity and the research practice using QDAS, and to propose a more deliberate framework to approach validity in qualitative studies while integrating data analysis software into the conversation. [3]

In the following sections, we first examine the gap between the literature of QDAS and validity theories, which sets up the stage for a more integrated approach to conceptualize the relationship between research software and validity. Next, we borrow insights from HEIDEGGER's philosophy of technology and propose to construct a "reflective space" for researchers to examine the issue of validity more deliberately and holistically. Last but not least, a collaborative action research project is used to demonstrate how this new approach can help researchers in the methodological-decision making process. [4]

2. A Naturalistic Trust in QDAS

We start by commenting on a trend that we have observed in qualitative data analysis, namely, a naturalistic trust in QDAS: that is, a view of QDAS as intrinsically trustworthy in strengthening the validity of qualitative data analysis. In this section, we examine how this naturalistic trust manifests in methodological literature and explore how this attitude is amplified by the commercial mechanism related to the dissemination of QDAS. [5]

Scholarship on the impact of QDAS on qualitative inquiry dates back to the 1990s. Advocates of the software packages highlight three aspects of validity that QDAS can help to improve: efficiency, consistency and transparency. For instance, they suggest that the use of research software can significantly improve the speed of qualitative data analysis (EVERS, 2011), which makes it possible for researchers to expand the scope of their research. By quickly searching text and counting frequencies, QDAS allows researchers to interrogate data faster and more precisely, and this in turn adds more consistency to the analysis process (EVERS, 2011; WELSH, 2002). Also, transparency is believed to be another benefit of QDAS, since it effectively supports researchers in keeping audit trails of their research. Doing audit trails then allows researchers to track their work and decision-making, as well as to reflect on their research practice (BONG, 2002; BRINGER, JOHNSTON & BRACKENRIDGE, 2004; EVERS, 2011; SMYTH, 2006). [6]

Whereas the above scholarship conceptualizes software packages as if they are neutral to different methodological approaches, other literature discusses QDAS using terms typically reserved for quantitative studies. For example, some researchers suggest that the use of QDAS adds rigor to the analysis (EVERS, 2011; SINKOVICS & PENZ, 2011). By adopting the concept of "rigor," these researchers echo how the term is used in quantitative studies, that is, related to operationally defined variables, procedurally conducted data analysis based on statistical rules, and a pursuit of generalization. In the context of qualitative research, use of the term "rigor" suggests a similar desire for clearly defined procedures and repeatable results. The discussion of rigor is intrinsically connected to the notion of reliability, especially the calculation of inter-rater reliability typically embedded in QDAS packages (KELLE & LAURIE, 1995; SIN, 2007). Defined as the potential for replication of codes by a different coder within an acceptable margin of error (KELLE & LAURIE, 1995), inter-rater reliability becomes a major validity indicator in collaborative qualitative studies (HWANG, 2008; SIN, 2007). However, researchers seldom fully unpack the assumptions underlying the use of the terms such as "rigor" and "inter-rater reliability," meaning that the terms are employed without sufficient attention to their positivist derivation. [7]

This optimistic attitude toward technology has been further amplified by the commercial mechanism of inventing and disseminating software products for profit. Currently, most major QDAS packages are run by commercial companies, with a few exceptions such as Dedoose. All of these packages claim to be neutral to different methodological perspectives and thus to appeal to all researchers. For instance, the homepage of QSA International (the producer and disseminator of NVivo products) advertises NVivo products as leading to "smarter insights, better decisions and effective outcomes."1) The profit-driven consumerism that underlies such claims invokes an image of QDAS that in many ways undermines the pursuit of critical thought in research. In this sense, commercial discourse, combined with the tendency in some QDAS literature to focus on validity more procedurally than methodologically, has created an omniscient myth of QDAS. [8]

The popular optimistic attitude toward QDAS has also received several critiques. For instance, some researchers explicitly voice concerns regarding the relationship between validity and QDAS, pointing out that using QDAS may shape the "lens" through which researchers view their data (STALLER, 2002). Researchers may get "sucked into" over-detailed coding at the expense of gaining a more abstract view of their data as a whole (GILBERT, 2002). Focusing on the impact QDAS has on methodological technique (such as potential threats to reliability or trustworthiness of a study, ibid.) is thus one way to demonstrate that in real-world research scenarios, the software can fall short in terms of moving us into better methodological conceptualizations. Specifically, without explicitly calling into question the philosophic stances supporting the popular understanding of validity, both the naturalistic trust of QDAS and its critique remain at the technical level, which in turn fixes the understanding of the software as merely a tool for qualitative data analysis2). [9]

3. The Discussion of Validity in a Post-Qualitative Era

The discussion above reveals the implicit understanding of validity—as strengthened by technical skills and procedures—that is prevalent in literature related to QDAS. Such an understanding often results in the use of positivist methodological terms and lack of attention to the disagreements and tensions among different methodological perspectives. Literature around the concept of validity, on the other hand, has thoroughly unpacked these tensions and disagreements. Indeed, contemporary validity theories draw from a variety of methodological perspectives and theoretical discourses to sharpen our understanding of the concept. However, this scholarship has not yet sufficiently incorporated the influence of QDAS into its analysis. As a result, this scholarship, like the QDAS literature, has contributed to an emphasis on the instrumental use of digital qualitative research technology. [10]

Validity in the field of qualitative research methodology has been a highly debated topic for a long time. Whereas the vigorous conversation around this topic has not led to consensus, it has produced a rich understanding of validity. Indeed, the field is far from reaching a unified stance and yet the passion to engage in a debate has cooled down if not totally faded away. Part of this loss of interest is rooted in the critique of knowledge from post-modernist and post-structuralist perspectives, which has challenged and to some degree deconstructed the term "validity" itself (LATHER, 1993; ST. PIERRE, 2014). We thus find ourselves in a "post-qualitative era" (ST. PIERRE, 2011, 2014), a period characterized by the co-existence of the humanistic qualitative methodology and qualitative inquiry influenced by post-modernism and post-structuralism. Along with the co-existence of these two schools of thoughts come renewed challenges from neo-positivism and scientism, fueled by neo-liberalist political power (ibid.). Validity, as it is so closely related to the fundamental understanding of knowledge and truth, has thus become one of the most contentious terms open for debate, deconstruction, and reconstruction. As a result, the contemporary qualitative research landscape requires either a shutting down of the validity conversation or acceptance of the proliferation of validity theories (DENNIS, 2013). Neither possibility fosters clarity with regard to the use of software in qualitative data analysis. [11]

Researchers have shown various attitudes toward the proliferation of validity theories. Whereas some welcome the inspiration and creativity that it has brought to the field (BOCHNER, 2000; DENZIN, 2008; LINCOLN & GUBA, 2000; SCHWANDT, 2000); others are concerned that the conversation lacks the capacity to bring together various strands of dialogue or deepen our current understanding of validity (DENNIS, 2013; DONMOYER, 1996). Still others point to the difficulties that this proliferation has brought to the teaching and learning of qualitative inquiry (CRESWELL & MILLER, 2000; LEWIS, 2009; TRACY, 2010). It is beyond the scope of this article to scrutinize the various conceptualizations of validity one by one. But the state of the field does push us to think through what it means to discuss the validity of qualitative data analysis using QDAS in this era of polyphony. Because the question of what accounts for a valid study is so closely related to fundamental questions about meaning and knowledge, contemporary validity theories are usually packaged together with specific methodological perspectives and with particular ontological and epistemological commitments. Thus, reflections on the validity of a qualitative study involve asking the following questions: From what kind of ontological and epistemological positions does the method derive? What understanding of validity does the interwoven philosophic and method-related assumptions give rise to? If validity is understood as such, what are the strategies to strengthen it? The task then is to connect these method-related reflections on validity to the use of QDAS. [12]

When turning to this task, we find that validity theories are not yet ready to take on the challenge of digital technology. Among the plethora of validity theories, very few have paid attention to the technology mediating qualitative data analysis. Rather, mainstream validity theories have successfully relegated the discussion of QDAS into the technical terrain, a sub-field that forms its own research agenda focused on the skills and procedures of data analysis. The unintended consequence of this relegation is a reinforcement of the understanding of QDAS as merely a tool for data analysis, neutral to methodological approach and validity theories, and a fixing of the divide between technology and theory. As a result, theorists rarely pay attention to the technology of data analysis, and QDAS experts seldom engage in theoretical discussions which may illuminate the methodological implications of QDAS. Bridging the conversation between these two sub-fields means to trespass the hidden boundaries that the existing literature sets. Doing so has a twofold implication: it prompts us to reflect upon the taken-for-granted modes of using QDAS, as well as to reinvigorate the conversation on validity by theorizing the material base of qualitative research. [13]

4. Qualitative Research as Practice

The discussion of last two sections sets the stage for our own investigation into the question of validity and QDAS. We propose to reframe this issue under an overarching understanding of research as practice, which provides a holistic approach without falling prey to the mechanistic dualism that reinforces the view of QDAS as a tool. In the following pages we begin by reflecting on what we view as the central issue, that is, the problematic understanding of QDAS as instrumental. We borrow insights from HEIDEGGER's critique on the instrumentality of technology in his well-known lecture "The Question Concerning Technology" and then explain how this critique can shed light on the issue under examination here. We do not strictly adhere to the whole thesis suggested by Heidegger but treat his critique as the entry point to the discussion. [14]

In his far-reaching lecture, HEIDEGGER sets out to examine human being's relationship with technology, for a free relationship between the two "opens our human existence to the essence of technology" (1977 [1962], p.1). This allows him to see the essence of technology as something beyond "technology" per se, something that cannot be captured through merely advancing the development of technology itself. He starts with considering the common answers on the question of what is the essence of technology. Two popular answers as noted by him are: Technology is a means to the end, and technology is a purposive human activity. Whereas his language is rather opaque, HEIDEGGER is a master of using examples. We can use his example of the forester to illustrate his points:

"The forester who, in the wood, measures the felled timber and to all appearances walks the same forest path in the same way as did his grandfather is today commanded by profit-making in the lumber industry, whether he knows it or not. He is made subordinate to the orderability of cellulose, which for its part is challenged forth by the need for paper, which is then delivered to newspapers and illustrated magazines. The latter, in their turn, set public opinion to swallowing what is printed, so that a set configuration of opinion becomes available on demand. Yet precisely because man is challenged more originally than are the energies of nature, i.e., into the process of ordering, he never is transformed into mere standing-reserve. Since man drives technology forward, he takes part in ordering as a way of revealing" (p.8). [15]

In this example, HEIDEGGER describes the mutually transformative relationship between technology and human practice. The forester's technology of measurement brings forth the formation of timber as a commercial product. It may seem naturally correct that the measurement is a means for the forester to achieve his end, which is to produce the commercial product of timber. However, the shape and the use of the timber, as well as the purpose for producing it, are not something that the forester can determine—they are constrained by the larger context. [16]

HEIDEGGER does not make it explicit what this larger context means. Now in retrospect, we know that this context taps into the social structure of the capitalist society commensurate with the development of technology. Within this larger picture, we can hardly claim that an individual human being, such as the forester, is the only one acting with her full agency to set up goals, choose tools and conduct the act. An individual's action occupies only a small part of the whole chain of actions, which are made available, supported and constrained by technology. The idea of taking technology as an instrument assumes that an actor has the capacity to fully command and control technology. It collapses instantly when this underlying assumption does not hold. [17]

Along this line of thought, HEIDEGGER reveals to us what he views as the essence of technology: Technology brings forth new orientations with which human beings approach the world. Modern technology has created a new way for people to act toward the world, which he calls "standing-reserve." Within this new orientation, the world has become a space for the storage of resources and raw materials, as well as for the potential of profit. The technician's gaze toward the world changes a human being's relationship with nature, and puts fellow human beings under the same gaze as something to be manipulated and exploited. Individuals, such as the forester, can be treated as a means for obtaining more profits and extracting more resources. In his lecture, HEIDEGGER utilizes the concept of "enframing" to denote this relationship between human beings and the world made available through the use of technology:

"As the one who is challenged forth in this way, man stands within the essential realm of Enframing. He can never take up a relationship to it only subsequently. Thus the question as to how we are to arrive at a relationship to the essence of technology, asked in this way, always comes too late" (p.12). [18]

As human beings, we are thrown into the pre-structured world that the orientation toward the world has already built in. Our actions, like the actions of the forester, continuously embody enframing. It is not possible to fully escape enframing and to obtain a bird's eye view of this issue in the first place. [19]

Before diving into HEIDEGGER's theory further, we pause for a moment and discuss its implications thus far for our understanding of QDAS. Our earlier discussion of validity and QDAS problematized the deeply rooted assumptions related to the instrumentality of qualitative research software that have shaped the contours of existing literature. HEIDEGGER's lecture allows us to re-think this assumption by shifting the focus from QDAS itself to investigators' interactions with it. If QDAS is merely a tool, then researchers primarily undertake rational action to pursue pre-defined goals when using it. As in the example of the forester, however, HEIDEGGER insightfully points out the impossibility of an actor conducting pure purposive action in relation to technology, since we are already embedded in the web of social actions conditioned and pre-structured by technology. Regardless of whether we are aware of it or not, this structure changes our way of perceiving the world. To be more specific, when using QDAS, researchers are unavoidably affected by how the software packages take up, present and process data. In other words: qualitative data analysis software has already been conditioned by a presupposed understanding of validity, constituted by implicit assumptions about what are considered valid approaches to interpret data. [20]

Here is an example: almost all QDAS packages have basic text searching functions. Some packages also offer advanced search functions based on the identification of codes, such as the query functions in NVivo products3). These functions allow researchers to check the consistency of their interpretations and identify patterns in the data. Based on the repetition of words, these methods or procedures may potentially reduce and decontextualize the rich meanings of the data, even as repetition and the capacity for repetition reflect an embedded understanding of what helps demonstrate validity. The issue at stake here, however, is not just explicating the embedded assumptions of QDAS, but asking how these assumptions affect researchers' understanding of consistency and patterns of data in return. In this case, with or without awareness, researchers subscribe to this pre-understood view of validity as the ability for semantic repetition in the data analysis process. Another example to illustrate this point is the understanding of inter-rater reliability embedded in many QDAS packages, such as NVivo and Dedoose, that offer the use of COHEN's Kappa to measure the agreement on coding among team members in collaborative projects4). The employment of this statistical measure suggests an emphasis on reproduction in the products of the collaborative coding, instead of the dynamic interpretive process of consensus formation. Thus, as with reproduction, we might ask how the use of COHEN's Kappa shapes, or at least presupposes, researchers' understandings of validity. [21]

HEIDEGGER's lecture also prompts us to understand technology as mediating social practices and relationships. The technology of producing timber that the forester uses mediates his relationship with his employer and connects him to the larger net of social practice. Similarly, we suggest that we need to be mindful of how the technology of QDAS mediates our relationship with multiple parties, such as the producers of software packages, our research participants, and our readers. For example, the discourse of methodological neutrality advocated by QDAS producers has been adopted by some researchers in conceptualizing validity in their studies. The transferability of this discourse does not rely so much on the explicit linguistic communication between the two parties. Rather, it is instantiated through utilization of QDAS. The researchers, who may not be fully aware of the complex consumerist tendency underlying the discourse advocated by software producers, may tacitly take up the way the software is said to work by embedding these implicit assumptions in their interpretation of data. As a result, a realm of practice is formed revolving around the production, dissemination and utilization of QDAS packages. Knowledge in qualitative studies is thus produced within this realm of practice where multiple factors—commercial, political and academic— come into play to reconfigure researchers' data analysis. [22]

With the help of HEIDEGGER, we have come to a more differentiated understanding about the instrumentality of QDAS. This new understanding has shifted our focus from the software itself to researchers' interactions with it. Thus, we suggest that one of the major implications that we can draw from this shift is that researchers should pay particular attention to their interactions with software, as well as to how software mediates and restructures researchers' relationship with other parties, such as their research participants and audience. Examination of these issues re-orient research itself towards an overarching concept of practice. Previous arguments accentuate how the use of certain software packages adds credibility to a study. Under this new framework, the argument should revolve around how a researcher, in her data analysis practice, critically reflects upon the "built-in" structure of QDAS and her own methodological position, and makes informed decisions about the most appropriate way to conduct her analysis. [23]

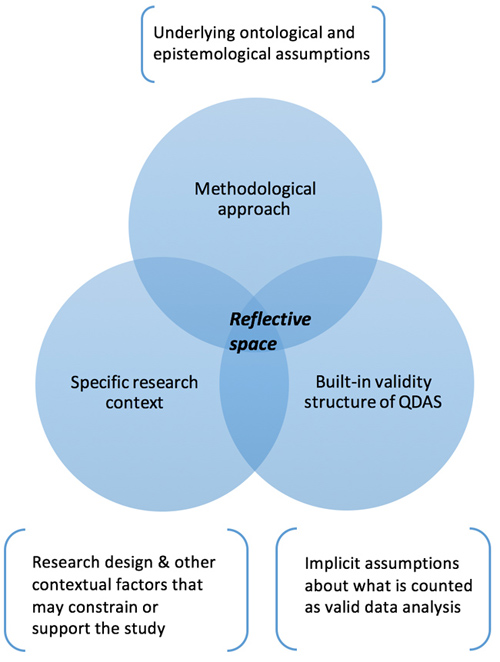

We propose that a conceptual idea of "reflective space" can be created to best facilitate the shift of the analytic focus from QDAS itself to researchers' interaction with QDAS. Forming this reflective space helps us delineate the various factors that a researcher may want to take into consideration when making her decision in working with QDAS. As illustrated in Figure 1, this reflective space represents the intersection of three domains, namely, the specific methodological approach a researcher uses, the "built-in" validity structure of QDAS, and a specific research context. The contents in the brackets show the relatively more backgrounded information that comes into play in relation to the use of QDAS in research practice. These are bracketed not because they are less important, but because they are more implicit and sometimes researchers tend to take them for granted. For example, ontological and epistemological assumptions are often not explicitly stated by researchers. As our review of the existing literature shows, their influence on the use of QDAS are often not sufficiently addressed either. In the domain of the built-in validity structure of QDAS, researchers may have noticed that data analysis software can be used to strengthen qualitative research's validity, but the underlying assumptions of what is counted as valid interpretation may not yet be fully unpacked. Specific research contexts also come into play since real-life research practice is constrained and shaped by practical concerns such as time and financial resources.

Figure 1: Reflective space at the intersection of methodological approach, "built-in" validity structure of QDAS and specific

research context [24]

Through this ideographic expression we map out influences that may affect a researcher's claim on validity when using QDAS. The figure does not provide a flowchart to examine validity step by step, nor does it fully capture the dialectic process of reflection; however, we suggest that it can help us uproot the deeply embedded dualistic assumptions about QDAS and validity, better reflect upon qualitative data analysis process and integrate technological considerations into the discussion of validity in a more holistic way. In the following pages we draw upon a collaborative action research project to illustrate the conceptual framework we propose here. [25]

5. The Reflective Space in the "Researching Research" Project

Since 2012 we have engaged in a long-term collaborative research project, which we call the "Researching Research" Project. The project focuses on what and how graduate students in introductory research methods courses learn. It explores the (potential) evolution of students' conceptualization of research through the process of taking a research methods class. The project involves 4 instructors and draws on the experiences of 92 students from 4 sections of an "Introduction to Educational Research" class. Data for the larger study consists of two sets of materials: archived (online) class discussions and student assignments generated by and collected from students; and the notes, e-mails and self-reflections generated by us as course instructors. We utilize both types of data to facilitate our pedagogical and research reflections. In this article, we primarily focus on the second form of the data to illustrate the conceptual framework proposed above. [26]

We used the QDAS package, Dedoose, as a shared virtual workspace to store, annotate and organize the data in the hope that its user-friendly interface and multiple features could support our collaborative research, especially given that all four of us lived in different places when conducting the data analysis. Through our collaboration, we gradually developed an understanding of Dedoose's built-in structure of validity. For example, its developers present the software in a way suggesting that the validity of data is based primarily on two elements: successful distribution of tasks among team members, and consistency among team members' coding as measured by coders' inter-rater reliability. In a publication by the developers entitled "Dedoose: A Researcher's Guide to Successful Teamwork," SICORA and LIEBER (2013) note,

"At the end of the day, we believe that any and all researchers can conduct successful academic work in teams both for the value of distributing tasks and responsibilities (divide and conquer) and the wonders of the world that open up to teams able to cross disciplinary boundaries" (p.12). [27]

Embedded in this statement is an assumption that the very notion of collaborative teamwork should emphasize division of tasks. In addition, the authors state that teamwork should be based on "a high level of agreement" (p.24) and suggest a few strategies to check this agreement. For instance, Dedoose endorses the use of COHEN's Kappa, a statistical measure of inter-rater agreement, which suggests that coding is the central task of data analysis, thus placing emphasis on the product of collaborating coding. Teamwork then is mainly a matter of demonstrating coding agreement among team members. [28]

Our use of Dedoose helped us overcome geographic constraints in our collaboration. However, we found that it also projects a particular way of conducting collaborative data analysis. If we follow its analytic procedure, we also implicitly endorse its embedded understanding of validity. This understanding, however, stands in tension with how we make sense of validity based on our methodological approach, CARSPECKEN's (1996) critical qualitative methodology, which is largely inspired by the German philosopher Jürgen HABERMAS's (1981) theory of communicative action. [29]

HABERMAS conceives of the notion of validity in his analysis of everyday speech acts. Validity for him is intersubjectively and communicatively structured, ultimately uncertain and rests on consensus achieved by all dialogue participants free from coercion and power (ibid.). Linking this theory of validity to our own work, we aimed to keep a multi-layered, iterative, and reflective dialogue alive through the whole data analysis process so that there would be enough space to form consensus beyond semantic agreement on a coding scheme. In this way, validity was strengthened through a commitment to understanding the rich meaning of the data through reflective and inclusive conversations. Ideally, the software we used could become the platform where the conversation took place and was tracked and documented. [30]

This approach, which locates dialogue in the center of consensus formation, is significantly different from the approach proposed by Dedoose, in which the validity of the analysis lies in the division of labor and the repetition of the same codes across different materials. The difference created tension and challenges all through our data analysis and also prompted us to innovatively solve the problem. One effective way to deal with this tension was to discuss the validity issues of our project with the constraining and enabling capacity of QDAS in mind, and juxtapose it with our guiding methodology and the specific research context. In the following section we discuss the principles that helped us to overcome this challenge in our research. This discussion also serves as an example to illustrate how the framework of reflective space can be used in a real research scenario. [31]

5.1 Finding validity in research process instead of in research products

As discussed above, we found Dedoose to be primarily product-focused in its assumptions regarding collaborative data analysis. However, in light of HABERMAS's critical theory, our experiences as a group of four researchers working in collaboration for the last five years point to the central role of dialogue in our research practice. This emphasis shifts the focus of collaboration from product to process. In our experience, we noticed that the importance of communication remains insufficiently addressed in Dedoose, which constrains and brackets the dialogical element of collaboration, and thus had a direct impact on data analysis. [32]

An analysis of our written exchanges during data analysis helps highlight: 1. how our collaborative process has been intersubjective and dialogic in nature, and 2. how attempts to collaboratively use a software platform for data analysis came into tension with such a dialogic approach. For instance, in our early use of Dedoose, we learned that we could not upload Word documents within which comments were embedded (a limitation also common in many other QDAS packages). This meant that we were unable to use the software to analyze our comments on one another's writings, which we deemed an important part of our data. Soon after we also realized that although all of us could access codes created by any one of us, we were unsure how to document who created what code and what the code meant to the person creating it. As we attempted to solve this problem, Peiwei suggested putting the coder's initials on each of the codes manually to track the analysis process. We also sought help from Dedoose technical support for a more convenient way to track our coding. Two suggestions we received from Dedoose were: 1. continue putting our initials next to the codes as there was no way in the software to document when and by whom codes on collaborative projects were created, and 2. use the memo function in Dedoose to write a quick note about our interpretation of each code (e-mail from Karen to the group, July 9, 2013). [33]

This example illustrates that, as a group, we were interested in collaborating on the coding process—in other words, we wanted to emphasize the collaborative nature of creating a shared understanding of the data. However, Dedoose did not allow us to do this in an intuitive way. Instead, each change in the Dedoose coding system needed to be supplemented with extensive discussions, over e-mail and via Skype conversation. Our e-mails from a little later further suggest how important the communicative practice was for us, as well as how limiting it felt to solely use the memo function in Dedoose:

July 10, 2013, Peiwei wrote: "For the coding, I think we still have quite few overlapping codes, particularly with the child codes. So I think writing memo can be helpful. Another thought I have is to have another Skype meeting to talk it through. Usually discussion can be the most efficient way to reconcile differences. What do you think?"

July 14, 2013, Karen wrote: "There are some codes that I think don't quite encompass what the excerpts are about and some codes that I think could be re-worded to be a bit clearer. I'm not sure how to best go about this unless we DO decide to go through everything together to achieve consensus. The more I think about this the more I wonder exactly how it might go." [34]

The e-mail exchange above illustrates our frustration in trying to engage in a joint learning process while conducting our analysis. Ultimately, we found that using the Dedoose software platform for collaborative analysis was more limiting than other approaches. Indeed, our communication outside of Dedoose—through e-mails and Skype conversations in particular—were most central in helping us refine our theoretical insights and thoughts. In other words, our intersubjective dialogue was extremely useful, while the actual codes generated in Dedoose primarily served as a reference for these discussions. In some ways, this turns the notion of codes as analysis product on its head, as the codes have functioned as a catalyst for continuing our collaborative process. [35]

5.2 Consensus formation by breaking a linear coding process

As discussed previously, centering on code as research product, the process of coding in QDAS has a tendency to be linear and mechanistic. We argue that this process may flatten the rich meaning of the original data by fixing it on a monologic dimension, as a static and rather flat representation of meaning. In our study, we experienced the need to break the linear mode of coding in "bottleneck" moments and foster a more organic process of consensus formation. By "bottleneck" moments, we mean those moments when unsettled feelings about current interpretive approach arise and the previous consensus needs to be reevaluated. This often signals that an alternative approach or an adjustment of the current approach is necessary. In contrast to conceptualizing validity through emphasis on the convergence of codes, our study reveals an opportunity to strengthen validity through dialogues as a way to counter the monologic approach of data analysis. [36]

For instance, to address one of our research questions, "How do graduate students conceptualize 'research' when they are starting an introductory research methods course?," we used students' responses to a short essay assignment as our primary data source. When identifying emerging themes through coding, an unsettled feeling grew among us as we noticed that there were threads inferred from the data that could not be easily captured by thematic analysis alone. For instance, many students saw research as something only "researchers" do, while positioning themselves as outsiders to research. We could have simply stated that students understood research as what experts do, which would stay on the content level, but how could we address the underlying feeling of alienation about research? This is one of the "bottleneck" moments that challenged us to collectively reflect upon the very limitations of our current approach. [37]

In a group meeting in November 2013, Pengfei raised the question whether we should create a shared coding book and start to code the data using the same set of codes. Karen and Barbara both expressed some concerns about this approach. First, they worried that, once delving into coding, we would be so drawn into the detailed labeling and retrieval work that it could curtail our dynamic interactions, which had kept our analysis open to reflections and alternative interpretations. Although disagreements on codes could be raised and discussed, we would still work under a relatively closed and fixed interpretive structure. More importantly, they argued that a collective coding process of this type might fail to capture our holistic understanding of meaning. The discussion lead to the decision that we should stick to a more dialogue-oriented approach at that stage of the analysis. [38]

In retrospect, we consider this discussion an important decision-making moment, which reaffirmed our methodological path and pushed us to make a significant shift in our approach to method, switching from thematic coding to pragmatic analysis. The latter enabled us to include the analyses of narrative or argumentative forms, tones in writing, audience and so on. From this perspective, we were able to examine the underlying tension between students' identity claims (e.g. outsider to the research community) and their feelings of alienation. The decision to resist the confinement of a linear coding mode configured in Dedoose thereby provided us with an opportunity to adjust our analytic strategies to re-align with our methododology. A bottleneck moment was thus transformed into a moment of reflection and consensus re-formation. [39]

Many QDAS packages perpetuate the assumption that inconsistency among researchers in collaborative coding is a primary source of disagreements among researchers, and in turn, a threat to validity. Strategies such as calculating COHEN's Kappa are functions provided by QDAS packages to detect inconsistent coding and to identify disagreements among researchers. Although some software does acknowledge the potentially positive role of inconsistency, we still need a more articulate argument to explain why discussion about disagreement is also crucial in strengthening validity. In other words, a key issue here is not only being open to possible falsifiability, but also how we should practice our openness toward it. [40]

Our methodological theory offers a theoretical springboard for us to wrestle with this issue. By differentiating validity claims from truth claims, HABERMAS (1981) avoids a foundationalist position of claiming certain claims as ultimately true. Instead, he suggests that we examine whether a claim is valid based on the intersubjective consensus formed among dialogue participants (ibid.). Since the dialogic process is always dynamic, claims are constantly subject to new reflections, challenges, and critiques. In this sense, disagreements among researchers should not be treated as a threat to validity, but rather as a constructive starting point to initiate helpful conversation and in-depth reflection that help to explicate, challenge, and transcend embedded validity criteria in speech acts and ultimately strengthen validity. [41]

Throughout our project, we made efforts to approach disagreements in this way. We began data analysis by independently analyzing the data collected from our own students. After this stage, we met and discussed general impressions about the data and next steps. Consensus was not formed at the first moment, and initially we weren't able to even articulate our disagreement. Still, all of us agreed that our discussion was helpful in moving us toward a more comprehensive and meaningful understanding of the data. The deeper we delved into the data, the more clearly we could articulate our own positions and recognize differences among our interpretations. [42]

What we learned from our experience is that being critical and supportive are equally important and the team needs to find a balance between the two. In our own experience, most of the important disagreements were not related to specific coding choices, but occurred at a more holistic level, regarding our general interpretative approach. When a disagreement emerged, we did not attempt to tackle it for the sake of solving the disagreement per se. We always created ample space to listen to and understand the team members' thoughts first. As we discovered, sometimes a minor disagreement cannot be solved without taking into consideration the whole interpretive approach. Therefore, extensive conversations about our disagreements always helped us better locate the underlying interpretive divergence. If we could not achieve a consensus temporarily, it would usually be solved later on as we kept dialoging about the issue. [43]

5.4 Creating an egalitarian collaborative environment

HABERMAS (1981) suggests that egalitarian dialogue among all interlocutors is required to form free consensus—an important condition to strengthen the validity of a communicative action. We thus endeavored to pay particular attention to the power dynamic and construct an egalitarian conversational space in our collaborative study. In relation to QDAS, we noticed that the potential divide between those who could use the research software fluently and those who did not might contribute to unequal access to data and different levels of engagement in data analysis, which might then give rise to certain power dynamic among researchers. Therefore, we strove to make sure that every collaborator was comfortable enough to use Dedoose. [44]

Initially, we were at different places in terms of our familiarity with Dedoose. Three of the team members could fluently use Dedoose during the preliminary analysis. The other member did not participate actively in the initial round of data analysis and when she joined in, she experienced a steep learning curve as well as some frustrations in navigating this software platform. This might have impeded her from equally participating in the collaboration. To mitigate the potential digital divide within the research team, we fostered a culture of collaborative learning of Dedoose as we made progress. The team member with less fluency in Dedoose sent out e-mails regarding the problems she had and other team members responded with detailed explanations and sometimes screen shot demonstrations. Similarly, team members also frequently exchanged strategies for, concerns with, and experiences related to using Dedoose. In this way, we treated QDAS as the material infrastructure and enabling condition that facilitated an egalitarian group dynamic. [45]

To summarize, we did not confine our analysis of validity in the Researching Research Project to validity as conceptualized within Dedoose itself, but focused on our research practice as it was facilitated and constrained by QDAS. Through creating reflective space at the intersection of the use of Dedoose, Habermasian critical research method, and the specific context of our collaborative teamwork, we found that Dedoose tends to reinforce a linear and mechanistic process of data analysis that emphasizes the development of shared codes. The software also perpetuates a positivist understanding of "consensus" in conceptualizing validity by endorsing the use of inter-rater reliability. With this in mind, our deliberate reflection allowed us to navigate data analysis while working both with and against the software. [46]

We began this article with a critical examination of the relationship between validity and QDAS in existing literature. This has led us to ask the question how the use of QDAS can strengthen or weaken the validity of a qualitative project guided by a specific methodological approach. We then identified the tendency in the literature to instrumentalize technology employed in data analysis. To mitigate the divide between theory and technology, we suggest shifting the analytic focus from the software itself to the research practice. The analytic concept of reflective space emerges from this shift at the intersection of methodological approach, built-in validity structure of QDAS, and specific research context. [47]

Using a critical action research project as an example, we discussed in detail how QDAS, together with its functions and embedded validity assumptions, shaped our collaborative data analysis, and posed particular challenges to the validity of our study. Developers of qualitative research software may benefit from considering the concept of reflective space, as it provides a new approach to thinking about how research software may better support qualitative studies and the production of valid knowledge. Under this new approach, instead of highlighting software's instrumentality and efficiency, software developers may want to 1, be more aware of and acknowledge the methodology-related assumptions embedded in the structure of QDAS, and 2, refine research software's functions and platforms to better enable and document researchers' ongoing dialogues and reflection during data analysis. [48]

Finally, we want to emphasize the exploratory nature of this discussion and present it as an invitation among our readers for further dialogue. Instead of claiming that QDAS automatically strengthens validity, or conversely, is totally irrelevant to validity, we suggest eliminating the theory/technology divide that is prominent in current literature on QDAS and validity theories. This may, in turn, allow us to integrate QDAS into a more reflective methodological decision making process. [49]

This research was supported by the Phase I & II Scholarships of Teaching and Learning, Indiana University Bloomington. We are grateful to Mirka KORO-LJUNGBERG and Jessica Nina LESTER who provided detailed and constructive feedback on an earlier version of this manuscript.

1) http://www.qsrinternational.com/product [Accessed: October 9, 2015]. <back>

2) Some other researchers have developed extensive discussions on the relationship between QDAS and various methodological approaches. Since several software packages, such as NVivo and ATLAS.ti, are modeled after grounded theory (EVERS & SILVER, 2014; RICHARDS, 2002). The earlier discussion about this issue revolved around whether methodological underpinnings of grounded theory in QDAS packages unavoidably excluded diverse approaches (COFFEY, HOLBROOK & ATKINSON, 1996; DAVIDSON & DI GREGORIO, 2011; LONKILA, 1995; MacMILLAN & KOENIG, 2004). Whereas some researchers took efforts to de-couple the connection between QDAS on the one hand, and grounded theory and coding strategy on the other (BARRY, 1998; BONG, 2002; LEE & FIELDING, 1996), a more recent advancement of the conversation was that a few researchers pioneered the integration of QDAS in studies explicitly guided by humanistic, phenomenological or post-humanist methodologies (DAVIDSON, 2012; GOBLE, AUSTIN, LARSEN, KREITZER & BRINTNELL, 2012). Although their exploration is admirable, we also notice that none of them have explicitly linked the discussion of QDAS and methodology with validity. <back>

3) For more information about how to explore coding using query functions, see NVivo 11 for Windows online help: http://help-nv11.qsrinternational.com/desktop/procedures/run_a_coding_query.htm [Accessed: December 29, 2015]. <back>

4) NVivo 11 for Windows provides two ways to calculate inter-rater reliability: 1. to calculate the percentage of coding that different researchers agree upon each other, and 2. to calculate the Kappa coefficient; see http://help-nv11.qsrinternational.com/desktop/procedures/run_a_coding_comparison_query.htm?rhsearch=Kappa&rhsyns=%20 [Accessed: December 29, 2015]. Dedoose online training center also provides the calculation of COHEN's Kappa coefficient to test the agreement of coding among different researchers; see http://www.dedoose.com/blog/?p=48 [Accessed: December 29, 2015]. <back>

Barry, Christine A. (1998). Choosing qualitative data analysis software: Atlas/ti and Nudist compared. Sociological Research Online, 3(3), http://www.socresonline.org.uk/3/3/4.html [Date of Access: March 29, 2016].

Bochner, Arthur P. (2000). Criteria against ourselves. Qualitative Inquiry, 6(2), 266-272.

Bong, Sharon A. (2002). Debunking myths in qualitative data analysis. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 3(2), Art. 10, http://nbn-resolving.de/urn:nbn:de:0114-fqs0202107 [Date of Access: February 11, 2016].

Bringer, Joy D.; Johnston, Lynne H. & Brackenridge, Celia H. (2004). Maximizing transparency in a doctoral thesis1: The complexities of writing about the use of QSR* NVIVO within a grounded theory study. Qualitative Research, 4(2), 247-265.

Carspecken, Phil F. (1996). Critical ethnography in educational research: A theoretical and practical guide. New York: Routledge.

Coffey, Amanda; Holbrook, Beverley & Atkinson, Paul (1996). Qualitative data analysis: Technologies and representations. Sociological Research Online, 1(1), http://http-server.carleton.ca/~mflynnbu/internet_surveys/coffey.htm [Date of Access: March 29, 2016].

Creswell, John W. & Miller, Dana L. (2000). Determining validity in qualitative research. Theory into Practice, 39(3), 124-134.

Davidson, Judith (2012). The journal project: Qualitative computing and the technology/aesthetics divide in qualitative research. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 13(2), Art. 5, http://nbn-resolving.de/urn:nbn:de:0114-fqs1202152 [Date of Access: March 29, 2016].

Davidson, Judith & Di Gregorio, Silvana (2011). Qualitative research and technology: In the midst of a revolution. In Norman K. Denzin & Yvonna S. Lincoln (Eds.), Sage handbook of qualitative research (4th ed., pp.627-643). Thousand Oaks, CA: Sage.

Dennis, Barbara (2013). "Validity crisis" in qualitative research: Still? Movement toward toward a unified approach. In Barbara Dennis, Lucinda Carspecken & Phil F. Carspecken (Eds.), Qualitative research: A reader in philosophy, core concepts, and practice (pp.3-37). New York: Peter Lang.

Denzin, Norman K. (2008). The new paradigm dialogs and qualitative inquiry. International Journal of Qualitative Studies in Education, 21(4), 315-325.

Donmoyer, Robert (1996). Educational research in an era of paradigm proliferation: What is a journal editor to do? Educational Research, 25(2), 19-25.

Evers, Jeanine C. (2011). From the past into the future: How technological developments change our ways of data collection, transcription and analysis. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 12(1), Art. 38, http://nbn-resolving.de/urn:nbn:de:0114-fqs1101381 [Date of Access: February 11, 2016].

Evers, Jeanine C. & Silver, Christina (2014). The First ATLAS. ti User Conference. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 15(1), Art. 19,

http://nbn-resolving.de/urn:nbn:de:0114-fqs1401197 [Date of Access: March 29, 2016].

Gilbert, Linda S. (2002). Going the distance: "Closeness" in qualitative data analysis software. International Journal of Social Research Methodology, 5(3), 215-228.

Goble, Erika; Austin, Wendy; Larsen, Denise; Kreitzer, Linda & Brintnell, E. Sharon (2012). Habits of mind and the split-mind effect: When computer-assisted qualitative data analysis software is used in phenomenological research. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 13(2), Art. 2, http://nbn-resolving.de/urn:nbn:de:0114-fqs120227 [Date of Access: March 29, 2016].

Habermas, Jürgen (1981). The theory of communicative action. Volume I: reason and the rationality of society. Boston: Beacon Press.

Heidegger, Martin (1977 [1962]). The question concerning technology, and other essays. New York: Garland Publishing, Inc.

Hwang, Sungsoo (2008). Utilizing qualitative data analysis software: A review of Atlas. ti. Social Science Computer Review, 26(4), 519-527.

Kanno, Yasuko & Kangas, Sara E.N. (2014). "I'm not going to be, like, for the AP": English language learners' limited access to advanced college-preparatory courses in high school. American Educational Research Journal, 51(5), 848-878.

Kelle, Udo & Laurie, Heather (1995). Computer use in qualitative research and issues of validity. In Udo Kelle (Ed.), Computer-aided qualitative data analysis: Theory, methods and practice (pp.19-28). Thousand Oaks, CA: Sage.

Lather, Patti (1993). Fertile obsession: Validity after poststructuralism. Sociological Quarterly, 34(4), 673-693.

Lee, Ray & Fielding, Nigel (1996). Qualitative data analysis: Representations of a technology: A comment on Coffey, Holbrook and Atkinson. Sociological Research Online, 1(4), https://ideas.repec.org/a/sro/srosro/1996-4-1.html [Date of Access: March 29, 2016].

Lewis, John (2009). Redefining qualitative methods: Believability in the fifth moment. International Journal of Qualitative Methods, 8(2), 1-14, https://ejournals.library.ualberta.ca/index.php/IJQM/article/view/4408 [Date of Access: April 20, 2016].

Lincoln, Yvonna S. & Guba, Egon G. (2000). Paradigmatic controversies, contradictions, and emerging confluences. In Norman K. Denzin & Yvonna S. Lincoln (Eds), Sage handbook of qualitative research (2nd ed., pp.163-188). Thousand Oaks, CA: Sage.

Lonkila, Markku (1995) Grounded theory as an emerging paradigm for computer-assisted qualitative data analysis. In Udo Kelle (Ed.), Computer-aided qualitative data analysis: Theory, methods and practice (pp.41-51). Thousand Oaks, CA: Sage.

MacMillan, Katie & Koenig, Thomas (2004). The wow factor preconceptions and expectations for data analysis software in qualitative research. Social Science Computer Review, 22(2), 179-186.

Richards, Tom (2002). An intellectual history of NUD* IST and NVivo. International Journal of Social Research Methodology, 5(3), 199-214.

Schwandt, Thomas A. (2000). Three epistemological stances for qualitative inquiry: Interpretivism, hermeneutics, and social constructionism. In Norman K. Denzin & Yvonna S. Lincoln (Eds.), Sage handbook of qualitative research (2nd ed., pp.189-214). Thousand Oaks, CA: Sage.

Sicora, Katie-Coral & Lieber, Eli (2013). A researcher's guide to successful teamwork, http://www.dedoose.com/_assets/pdf/a_researchers_guide_to_successful_teamwork.pdf [Date of Access: March 21, 2015].

Sin, Chih Hoong (2007). Using software to open up the "black box" of qualitative data analysis in evaluations: The experience of a multi-site team using NUD* IST Version 6. Evaluation, 13(1), 110-120.

Sinkovics, Rudolf R. & Penz, Elfriede (2011). Multilingual elite-interviews and software-based analysis. International Journal of Market Research, 53(5), 705-724.

Smyth, Robyn (2006). Exploring congruence between Habermasian philosophy, mixed-method research, and managing data using NVivo. International Journal of Qualitative Methods, 5(2), 131-145, https://ejournals.library.ualberta.ca/index.php/IJQM/article/view/4395 [Date of Access: April, 20, 2016].

St. Pierre, Elizabeth A. (2011). Post qualitative research: The critique and the coming after. In Norman K. Denzin & Yvonna S. Lincoln (Eds.). Sage handbook of qualitative inquiry (4th ed., pp.611-635). Thousand Oaks, CA: Sage.

St. Pierre, Elizabeth A. (2014). A brief and personal history of post qualitative research: Toward "post inquiry". Journal of Curriculum Theorizing, 30(2), 2-19.

Staller, Karen M. (2002). Musings of a skeptical software junkie and the HyperRESEARCH™ fix. Qualitative Social Work, 1(4), 473-487.

Tracy, Sarah J. (2010). Qualitative quality: Eight "big-tent" criteria for excellent qualitative research. Qualitative Inquiry, 16(10), 837-851.

Welsh, Elaine (2002). Dealing with data: Using NVivo in the qualitative data analysis process. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 3(2), Art. 26, http://nbn-resolving.de/urn:nbn:de:0114-fqs0202260 [Date of Access: February 11, 2016].

Zambrana, Ruth Enid; Ray, Rashawn; Espino, Michelle M.; Castro, Corinne; Cohen, Beth Douthirt & Eliason, Jennifer (2015). "Don't leave us behind": The importance of mentoring for underrepresented minority faculty. American Educational Research Journal, 52(1), 40-72.

Pengfei ZHAO, M.A., is a PhD candidate in Inquiry Methodology Program at Indiana University, Bloomington. Her research takes three directions: philosophical foundations of critical qualitative research, digital technology and its implication for qualitative inquiry, socialization and identity formation in the context of drastic social change.

Contact:

Pengfei Zhao

Department of Counseling and Educational Psychology

School of Education

Indiana University Bloomington

W.W.Wright Education Building

Bloomington, IN, 47405-1006, USA

Tel: 001-203-645-3112

E-mail: pzhao@indiana.edu

Peiwei LI, Ph.D., is an assistant professor and the research coordinator of the Counseling Psychology Psy.D. Program at Springfield College, Massachusetts. As a methodologist, her primary interests are in the area of critical qualitative inquiry methodology as informed by critical theory. As a counseling psychologist, she is passionate about culture, diversity, and social justice in both her research and clinical practice.

Contact:

Peiwei Li

Counseling Psychology Psy.D. Program

Locklin 322, Springfield College

263 Alden St.

Springfield, MA, 01104 USA

Tel: 001-413-748-3265

E-mail: pli@springfieldcollege.edu

URL: http://springfield.edu/academic-programs/psychology/doctor-of-psychology-psyd-program-in-counseling-psychology/peiwei-li

Karen ROSS, Ph.D., is an assistant professor of conflict resolution at the University of Massachusetts, Boston. Her primary interests lie at the conceptual and methodological intersection of education, dialogue, peacebuilding, and social change. In particular, she is concerned with questions about how (and by whom) "impact" can and should be defined and measured.

Contact:

Karen Ross

Department of Conflict Resolution, Human Security & Global Governance

McCormack Graduate School of Policy and Global Studies

University of Massachusetts Boston

100 Morrissey Blvd.

Boston, MA 02125-3393 USA

Tel: 001-617-287-3568

E-mail: karen.ross@umb.edu

URL: http://www.karen-ross.net

Barbara DENNIS, Ph.D., is an associate professor of qualitative inquiry in the Inquiry Methodology Program at Indiana University. She has broad interests in the critical theory and methodological practices intentionally focused on eliminating sexism and racism from cultural ways of knowing and being.

Contact:

Barbara Dennis

Department of Counseling and Educational Psychology

School of Education

Indiana University Bloomington

W.W.Wright Education Building

Bloomington, IN, 47405-1006, USA.

Tel: 001-812-856-8142

E-mail: bkdennis@indiana.edu

URL: http://education.indiana.edu/dotnetforms/Profile.aspx?u=bkdennis

Zhao, Pengfei; Li, Peiwei; Ross, Karen & Dennis, Barbara (2016). Methodological Tool or Methodology? Beyond Instrumentality

and Efficiency with Qualitative Data Analysis Software [49 paragraphs]. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 17(2), Art. 16,

http://nbn-resolving.de/urn:nbn:de:0114-fqs1602160.