Volume 20, No. 3, Art. 1 – September 2019

The Poor and Embarrassing Cousin to the Gentrified Quantitative Academics: What Determines the Sample Size in Qualitative Interview-Based Organization Studies?

Eneli Kindsiko & Helen Poltimäe

Abstract: It is essential for scholars to reflect on their research practices and critically assess scientific rigor. In the current article, we aim to critically review the state of qualitative research in organization studies by focusing on trends in sample sizes. Organizational scholars presenting qualitative, interview-based manuscripts tend to face the ongoing challenge of how many interviews are enough. The research reported in this article, covering 11 years and investigating 855 interview-based studies, provides empirical evidence that, across the years, the number of interviews seems to be rather high. The total sample included studies with more than 100 interviews (7% of the sample), more than 50 interviews (34%) and studies with more than 30 interviews (62%). Furthermore, when studies start to increase in sample size, they often do so at the expense of homogeneity across respondents. We conclude by giving some possible explanations for why we are facing such a situation today.

Key words: qualitative research; interview; saturation point; organization studies; sample size

Table of Contents

1. Introduction

2. Method: A Reflection on 855 Qualitative Studies

2.1 Problem formulation

2.2 Data collection stage

2.3 Data evaluation stage

2.4 Analysis and interpretation stage

3. Results

3.1 Sample sizes: Have they grown?

3.2 What Factors Contribute to the Number of Interviews Used in Qualitative Studies?

3.3 Composition of the sample: How heterogeneous can it be?

3.4 Saturation point: How to justify it?

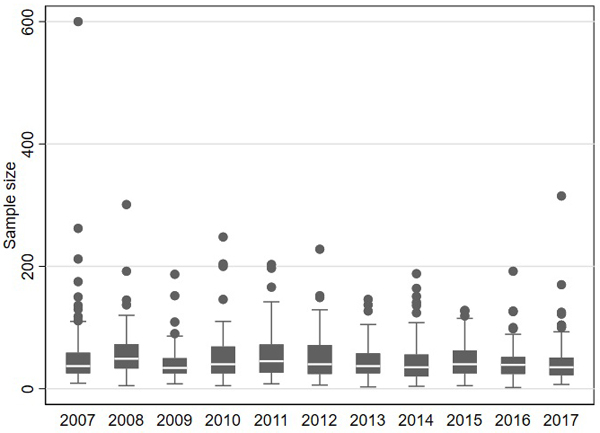

3.5 Country (U.S. vs. Europe): How much do research traditions differ?

3.6 Demands from the grant provider: How large a sample is needed to get my research funded?

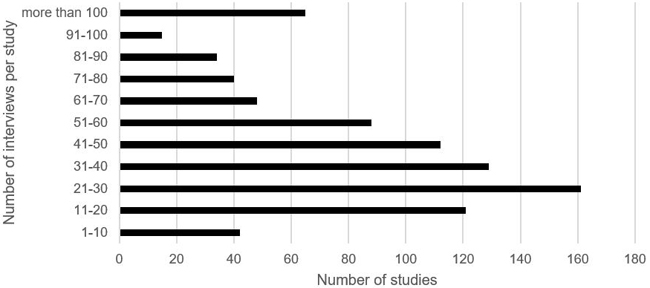

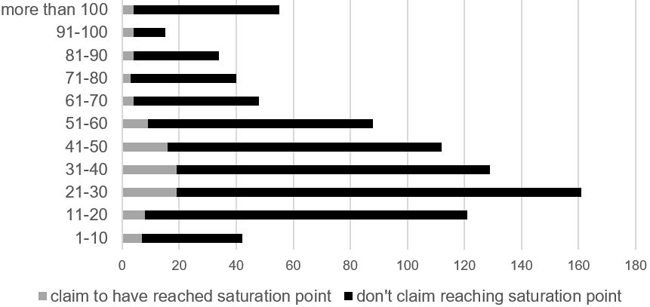

4. Discussion and Concluding Thoughts

Many authors have addressed the difficulties of designing and conducting qualitative research (GEPHARDT, 2004; GIOIA, CORLEY & HAMILTON, 2012), writing it up (JONSEN, FENDT & POINT, 2018; SANDELOWSKI, 1998), and ultimately, getting their paper published (BLUHM, HARMAN, LEE & MITCHELL, 2011; PRATT, 2008, 2009). That said, organizational outlets are well served with treatises explaining why qualitative research is a "high-risk, high-time commitment research activity" (MARSHALL, CARDON, PODDAR & FONTENOT, 2013, p.11). With this article we aim to report on our study of a growing stream of scholarly literature that has raised concern over the state of qualitative research in organization studies—namely signposting how difficult it is to get a qualitative paper published. However, we will take a slightly different and critically reflective approach—seeking to reveal how the practice of undertaking qualitative research has, and does, change due to numerous influences brought on by changes in the research environment (e.g., reliance on funding and tough competition with quantitative studies). [1]

GEPHART, an experienced reviewer of qualitative research for the Academy of Management Journal (AMJ), has revealed how a large share of qualitative research submitted to leading organizational outlets seem to represent positivist epistemological standpoints, and "mirror quantitative research techniques" (2004, p.456). One visible sign of this mimicking is the reporting of large and rather heterogeneous samples in qualitative studies. However, many of these claims are based on anecdotal evidence, and there is a short supply of articles (MARSHALL et al., 2013, or MASON, 2010, to name but a few) that actually confirm the dynamics in sample sizes in recent years. This brings us to our first research question: What trends exist in terms of sample size in studies applying qualitative interviews as a method? [2]

The research environment surrounding qualitative research continues to be dominated by the quantitative paradigm. In fact, one of the reasons why organization studies tend to overuse quantitative research is that a sizeable share of researchers, but also reviewers, are well trained in positivist standpoints and quantitative research, and less aware of the interpretive paradigm (GEPHARDT, 2004), which was originally the core of qualitative research. The motivation behind this study is to encourage scholarly attention back to reflect on our epistemological understanding of what qualitative research is about. Why should we care about growing sample sizes in qualitative studies? A large and especially heterogeneous sample does not allow deep analysis, which is the essence of qualitative inquiry (SANDELOWSKI, 1995), especially when founded on a constructivist or interpretive paradigm. Qualitative researchers ought to explain how homogeneous their sample is (TROTTER, 2012), and how well it allows them to investigate their research problem in depth (SANDELOWSKI, 1995). An overly large sample might raise the question of how much time was spent with each respondent and whether the allocated contact time was in fact sufficient to cover the research problem under question. Our motivation for investigating trends in sample size in organizational outlets stems from years-long exposure to articles that seem to have adopted a research vocabulary that explains the specifics of their sample in accordance with assumptions more common to the quantitative paradigm. To illustrate the claim, one qualitative study by SCHWARTZ and McCANN (2007) reported to have conducted close to 600 semi-structured interviews (a result of a joint-sample, where samples of three separate projects have been put together), which exceeds the recommendations made by qualitative scholars by ten times. SANDELOWSKI (1995) recommended that more than 50 interviews could harness the depth and quality of the analysis. The authors of this enormous study justify their large sample using a vocabulary and rationale more common to the quantitative paradigm:

"... the combination provides us with a larger sample and greater diversity of cases, providing not only a diversity of regions, industries and types of firms, but, because of relative differences in the focus of the three studies, additional variables which increase our appreciation of management transformations" (SCHWARTZ & McCANN, 2007, p.1530). [3]

Previous empirical investigations of sample size in qualitative interview-based studies published in the field of information systems have resulted in claiming that the number of interviews seems to correlate with many subjective determinants, such as the journal of publication (BLUHM et al., 2011), or the geographical region—whether it is a European or U.S. outlet (MARSHALL et al., 2013). In addition to the abovementioned factors, we also test whether factors like funding or composition of a sample are related to the sample sizes used by qualitative researchers. From this starting point, we arrive at our second research question: What factors contribute to the number of interviews used in qualitative studies? [4]

Through this article we contribute to organization research in two ways. First, we reflect on the changing nature of research practice—highlighting the need to acknowledge how qualitative research is actually undertaken. Existing studies that have taken a similar approach rely on much smaller datasets (BLUHM et al., 2011, who studied 198 qualitative articles from three U.S. and two European management journals), are based on the analysis of a single journal (STRANG & SILER, 2017 investigated the structure of 350 qualitative research articles published in Administrative Science Quarterly between 1956 and 2008), or have focused on a rather narrow population (MASON, 2010, who analyzed sample size in 560 dissertations utilizing interviews). Second, we seek to offer some explanations for why we are facing the problem of quantifying the qualitative, and how the changes in the research environment might have influenced this. We believe our research questions signpost an important gap in the literature because they urge qualitative scholars to reflect on their research practices as they tend to change or modify in reaction to changes in the academic research environment. [5]

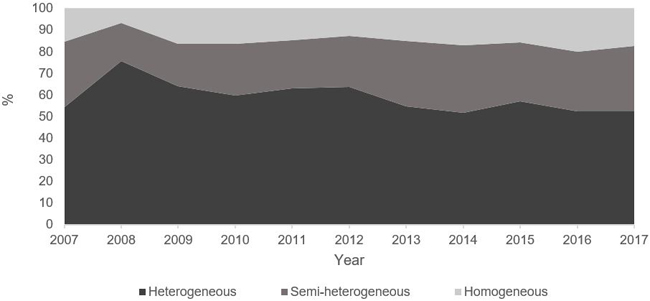

In summary, in this article we report on our investigation into changes in sample size in interview-based and qualitative organization studies. We seek to map the trends and tendencies in relation to sample sizes in interview-based qualitative studies across 11 years, and possible factors explaining the sample size. We will begin with a description of the methodology employed in our research—how we have built up the meta-analysis and systemized existing qualitative interview-based studies (Section 2). This will be followed by the results of our review of interview-based studies (Section 3), ending with a discussion and concluding remarks (Section 4). [6]

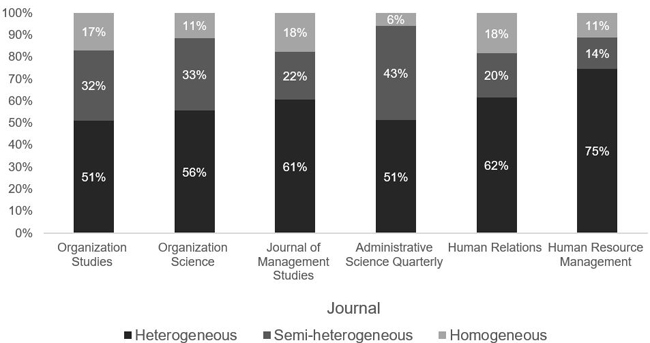

2. Method: A Reflection on 855 Qualitative Studies

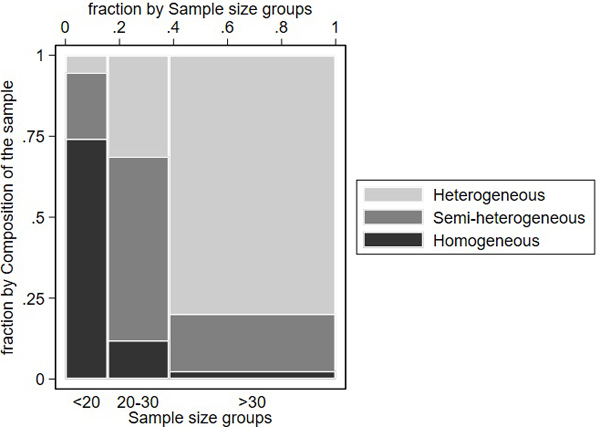

There is a growing need for systematic research synthesis alongside the continuous expansion of empirical research papers. We have applied a meta-analysis to investigate the state of qualitative interview-based studies. The aim of the meta-analysis is "to synthesize existing empirical evidence and draw science-based recommendations for practice" (AGUINIS, PIERCE, BOSCO, DALTON & DALTON, 2011, p.306). That said, meta-analysis is highly suitable for conducting "quantitative literature reviews" (ibid.). We are not aiming to provide recommendations, but wish to draw attention to how the practice of conducting and presenting qualitative research seems to have changed. We have adopted the following five stages of research synthesis suitable for conducting and presenting meta-analyses as proposed by COOPER (1982, 2007): 1. problem formulation; 2. data collection; 3. data evaluation; 4. analysis and interpretation; and 5. presenting the results. As a modification, we combined steps 4 and 5 to provide a more synthesized analysis. [7]

During the problem formulation stage, we selected our variables so that relevant studies could be found. The key variable in the current study is the number of interviews conducted. All our other variables serve in as much as they help to explain the possible changes in sample size across the years of the study. The data sought during the second round focused on specific elements—factors describing, but also facilitating, our understanding of the changes in samples. We decided to analyze elements that have also been highlighted by previous scholars. In addition to the size of the sample (number of interviews conducted), year (showing the dynamics in sample size), and the country of origin of the journal of publication (BLUHM et al., 2011; MARSHALL et al., 2013), we also looked at the composition of the sample (GUEST, BUNCE & JOHNSON, 2006), whether an article claimed to have reached saturation point (MASON, 2010; O'REILLY & PARKER, 2013; TUCKETT, 2004), sample share per researcher, and finally, whether the study acknowledged having benefited from funding (GREEN & THOROGOOD, 2004; MASON, 2010). [8]

Another important decision during the problem formulation stage was to narrow the scope by area of study. It has been acknowledged that research practice tends to be paradigmatically anchored to the research field (BURRELL & MORGAN, 1979). Therefore, a meta-analysis should focus on a rather narrow research field in order to acknowledge the philosophy of science, namely the epistemological, ontological and methodological assumptions that ground the discipline. For example, depending on the discipline, sampling frameworks, but also the typical or expected sample size, may vary remarkably (ISRAEL 1992; TROTTER, 2012). Due to our background, we will focus on organization studies. Next, we moved to the data collection stage, where one identifies suitable studies to be included in the meta-analysis (COOPER, 2007). [9]

The data collection stage started by setting up the criteria for selecting the journals that address organizational studies. First, we selected journals from the list of the Financial Times' Top 50—the list of journals used by the Financial Times in compiling the Financial Times Research rank, and used as part of creating the world's best 100 full-time MBA programs (see ORTMANS, 2018). The list serves as a guide both to scholars (JONSEN et al., 2018), who based their study on selected journals from the Financial Times list, and the students, as publishing in "top-tier North American journals remains for many colleges and universities one of the key metrics of achievement" (PRATT, 2008, p.482). In addition, the top journals advertise themselves by mentioning the fact that they are "included in the Financial Times Top 50 journals list"1) (e.g., in the field of organization studies). [10]

Second, to have a reasonable number of articles that cover the fields of organizational studies more likely to entail qualitative research, only those articles that cover the subject areas "management," "organizational behavior," and "human resources" were investigated. This left us with 14 potentially suitable journals out of 50 (listed below in Table 1, Scientific Journal Ranking [SJR]). On the basis of origin, the 14 top-tier organizational and management journals are strongly over represented by North American journals, yet this is a limitation we consciously accept as the Financial Times' Top 50 is also dominated by U.S.-based journals.

|

Journal |

Country |

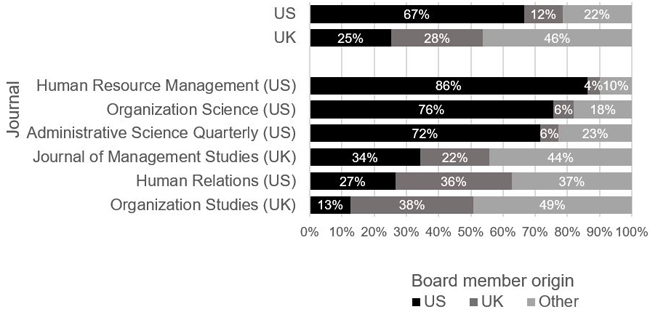

SJR 20172) |

H Index3) |

|

Administrative Science Quarterly |

United States |

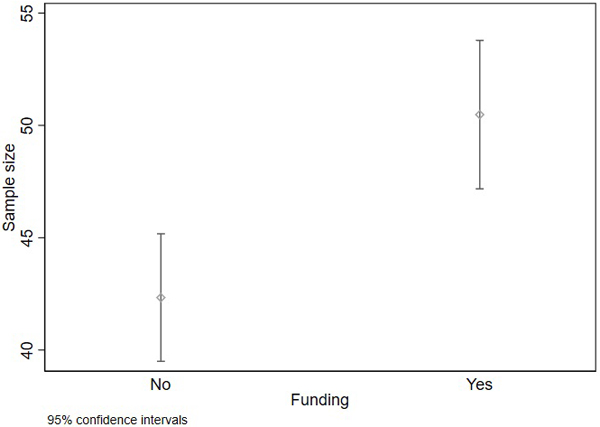

10.19 |

158 |

|

Academy of Management Journal |

United States |

8.54 |

266 |

|

Strategic Management Journal |

United States |

8.01 |

232 |

|

Academy of Management Review |

United States |

7.88 |

229 |

|

Journal of Management |

United States |

6.46 |

176 |

|

Organization Science |

United States |

5.5 |

196 |

|

Journal of Applied Psychology |

United States |

4.69 |

235 |

|

Journal of Management Studies |

United Kingdom |

3.8 |

145 |

|

Human Relations |

United States |

2.2 |

105 |

|

Organization Studies |

United Kingdom |

2.02 |

120 |

|

Organizational Behavior and Human Decision Processes |

United States |

1.99 |

122 |

|

MIT Sloan Management Review |

United States |

1.82 |

85 |

|

Human Resource Management |

United States |

1.31 |

73 |

|

Harvard Business Review |

United States |

0.3 |

154 |

Table 1: Initial list of top journals to be reviewed for the current study [11]

Third, the initial list was filtered by eliminating journals that do not have extensive empirical research articles (e.g., MIT Sloan Management Review, Harvard Business Review), comprise a large share of theoretical/conceptual articles (e.g., Academy of Management Review), or are largely focused on quantitative approaches and statistics (e.g., Journal of Applied Psychology, but also Strategic Management Journal and Journal of Management). Fourth, our choice of journals was restricted by whether there was full access to the journals (this eliminated the Academy of Management Journal from our list). The final list of journals selected for review is shown in Table 2.

|

Journal |

Country |

SJR 2017 |

H Index |

|

Administrative Science Quarterly |

United States |

10.19 |

158 |

|

Journal of Management Studies |

United Kingdom |

3.8 |

145 |

|

Organization Science |

United States |

5.5 |

196 |

|

Organization Studies |

United Kingdom |

2.02 |

120 |

|

Human Relations |

United States |

2.2 |

105 |

|

Human Resource Management |

United States |

1.31 |

73 |

Table 2: Final list of top journals selected for review in the current study [12]

For all six journals, a period of 11 years (2007–2017) was examined in detail. Focusing on a rather long period allows us not only to gain a wide overview, but also accounts for any changes among the editorial boards and reveals possible trends in organization studies. See Table 3 for details about our sample.

Table 3: Overview of the dataset: Research articles entailing interview-based studies between 2007 and 2017, across six journals. Click here to download the PDF file. [13]

After collecting the studies, we moved to the data evaluation stage, where we critically assessed which studies to include and which not. Table 3 shows the number of articles that were examined during our analysis. Looking at the six journals in the period 2007–2017, a total of 4,139 articles were published. Our first screening involved filtering each journal using the keyword "interview," which resulted in 2,105 out of 4,139 articles. During the second round, we examined the 2,105 articles one by one. We excluded mixed studies (ones that mix qualitative and quantitative approaches), focusing only on pure qualitative studies. In the case of mixed studies an interview-based approach is often taken as input for a large-scale quantitative survey, or as an explanatory tool, where interviews with key informants help to explain the (peculiar) patterns revealed by the quantitative study. In addition, the database comprises only individual semi-structured or unstructured interviews, not group or focus group interviews. Only face-to-face or telephone and Skype-mediated interviews are taken into account. Lastly, we did not include articles that shied away from reporting their sample size precisely, but only reported a range. The second round left us with our final sample, 855 articles that could be considered fit for our purpose in a meta-analysis. [14]

2.4 Analysis and interpretation stage

The method of analysis applied in this article involved a means comparison: we analyze whether the average sample size differs on the basis of the variables mentioned above (e.g., sample composition, saturation point, journal, etc.). If, based on the variable, there are two groups, a t-test is applied; in the case of three or more groups, an analysis of variance (ANOVA) is applied. To facilitate this, some outliers have been removed (see Section 3), and to check the robustness a non-parametric median-test has been run, which provided similar results. [15]

An important remark about our research methodology is that initially we included sampling strategy in our list of aspects to be analyzed when we first started collecting the data, but quite soon after the initial analysis we removed it. The reason was that researchers very rarely mentioned what sampling strategy they applied. This might be a field-specific practice, or a general lack of attention to this aspect. For example, if we look at the articles from Organization Studies for 2017, the year with the highest share of articles (57), 42 articles out of 57 (74%) do not mention their sampling strategy. In some rare cases, the reader might guess the sampling strategy, but even using the inter-rater reliability approach (i.e., having multiple researchers independently assess what the sampling strategy employed by the study might be), we would not know for certain how the authors of the study actually designed their sample. Therefore, we did not see any rationale for including sampling strategy as one of the aspects to be analyzed. Since only a small percentage of studies mention the sampling strategy, we are not able to make statistically credible comparisons between sample size and sample strategy. A detailed overview of our results including comparisons with previous findings and critical remarks about the state of qualitative research will be given in Section 3. [16]

3.1 Sample sizes: Have they grown?

Of the 855 studies analyzed across the period of observation, 2008 has the smallest number of papers with qualitative interviews: 45 papers were identified according to our search criteria defined above. In general, the number of papers has increased over time: in 2009–2010 it was around 60, by 2016 it had reached more than 100 (see Table 3). Analyzing the number of interviews conducted in these papers (from here on, also called "sample size"), it can be witnessed that the median sample size has been relatively stable in the observed period. This is also demonstrated by the line inside the box in Figure 1—around 30–50 interviews per study. However, there are several exceptionally large values for sample size (i.e., outliers), demonstrated by points on the boxplot. This leads to higher mean values over time, ranging from 40 to 60. Figure 1 demonstrates that the highest sample size was 600 in 2007, which is an extremely high number of interviews in a single study. The proportion of studies with very many interviews is consistently high during the observed period—the total sample included studies with more than 100 interviews (7% of the sample), more than 50 interviews (34%) and studies with more than 30 interviews (62%). We might explain this anomaly by the fact that the current study examined only top-level journals, where the expectations of the quality of interview-based studies are high. To confirm the claim, the vast majority of the large interview-based studies were longitudinal studies with repeating interview cycles held in the same place or involving interviews in several countries (often this was the case in grant-funded studies) by various research groups. This in turn provides food for thought about the impact of project-based (grant-based) studies on qualitative research.

Figure 1: Boxplot for sample size (number of interviews conducted per study) by years [17]

As VAN MAANEN reminds us, design and analysis of qualitative research often go hand-in-hand, and demand "highly contextualized individual judgements" (1998, p.xi) from the researchers. Considering the amount of time that in-depth analysis and administering qualitative data demands, authors like BODDY (2016) or SANDELOWSKI (1995) respectively suggest that a sample size of 50 or 30 in-depth interviews is large for a qualitative study. The outliers in our findings exceed these recommended administrable sizes by 10 to 20 times. Yet, as outliers seem to be present across the whole period of observation, we can expect this to be a new normal in qualitative studies. [18]

As can be seen in Figure 2, the most frequent classes of sample size are 21–30 (19% of studies), 31–40 (15%) and 11–20 (14%). In total, the majority of the studies have used 11–60 interviews (72%).

Figure 2: Distribution of studies by sample size [19]

Our investigation of articles revealed a rather interesting trend in publications. Studies often draw on numerous separate research projects from a longer period but combine samples for a specific publication. A good example of an article that uses combined samples is SCHWARTZ and McCANN (2007), where they report close to 600 semi-structured interviews, data gathered by three research projects carried out between 2000 and 2006. This ultimately resulted in declaring a sample size close to 600 respondents. In addition, SWART and KINNIE (2014) draw on two research projects: their "roof-article" published in Human Resource Management is based on two projects combining 150 and 38 interviews with a rather heterogeneous array of respondents. CHROBOT-MASON, RUDERMAN, WEBER and ERNST (2009) based their findings on the results of a combined dataset from two separate studies that involved 134 interviews. Lastly, KJÆRGAARD, MORSING and RAVASI (2011, p.519) explain how their grounded theory "study started as two separate research projects, which later converged into a joint investigation," where they merged two databases so that the data gathered between 1990-2000 included 203 interviews (109 interviews conducted between 1990–1993, and 94 interviews collected between 1999–2000). The studies highlighted here illustrate only a small fragment of the whole list of studies that combined samples from different research projects. In summary, to answer the question about whether sample sizes have grown over time, we can say that is not the case, but there is a remarkable share of studies that report a large sample size in every year. In order to eliminate the impact of outliers, we removed studies with more than 200 interviews (altogether 10 studies), and hence the number of studies under analysis is 845. [20]

3.2 What Factors Contribute to the Number of Interviews Used in Qualitative Studies?

The first question that comes to mind is that perhaps the bigger the research group (i.e., the more authors), the bigger the sample. As large research projects are inevitably conducted by large research groups, we might assume that papers published by these groups have a longer list of authors and the number of interviews conducted per researcher is still reasonable. That said, it is relevant to ask whether large samples are also conducted by larger groups of researchers? Based on our data, this is not evident: there is no evidence that larger sample sizes are collected by more authors (Table 4). [21]

The literature reveals how research disciplines vary remarkably depending on how many participants of the research team gain authorship when the research results are published. For example, lab-based sciences (e.g., natural sciences, physics, biomedicine, etc.) tend to practice a collective research culture, where it is a common practice to "include all the names of participants in the laboratory research group" (LIBERMAN & WOLF, 1998, p.245). BANDYOPADHYAY (2001, p.146) found that across disciplines, the share of papers with multiple authors in physics is 62.24%, in mechanical engineering 36.6%, in mathematics 36.3%, in philosophy 12.3%, and only 3.85% in political science. Therefore, the number of authors may not be connected to the reported sample size.

|

|

Number of papers |

Mean number of interviews |

SD of number of interviews |

Sig. value of test |

|

Authors |

|

|

|

0.218 |

|

1 author |

191 |

45.4 |

31.4 |

|

|

2 authors |

337 |

44.1 |

29.4 |

|

|

3 or more authors |

317 |

48.4 |

35.2 |

|

|

Journal |

|

|

|

0.000 |

|

Organization Studies (UK) |

280 |

42.5 |

30.7 |

|

|

Organization Science (US) |

131 |

50.8 |

32.5 |

|

|

Journal of Management Studies (UK) |

100 |

49.3 |

36.3 |

|

|

Administrative Science Quarterly (US) |

35 |

68.4 |

34.3 |

|

|

Human Relations (US) |

238 |

41.3 |

27.1 |

|

|

Human Resource Management (US) |

61 |

52.1 |

39.6 |

|

|

Composition of the sample |

|

|

|

0.000 |

|

Homogeneous |

134 |

17.7 |

14.8 |

|

|

Semi-heterogeneous |

228 |

35.1 |

21.5 |

|

|

Heterogeneous |

483 |

59.0 |

32.9 |

|

|

Saturation point |

|

|

|

0.455 |

|

Not mentioned |

748 |

46.3 |

32.7 |

|

|

Mentioned |

97 |

43.7 |

27.5 |

|

|

Country |

|

|

|

0.162 |

|

United States |

465 |

47.4 |

31.9 |

|

|

United Kingdom |

380 |

44.3 |

32.3 |

|

|

Funding |

|

|

|

0.000 |

|

Not mentioned |

465 |

42.3 |

31.2 |

|

|

Mentioned |

380 |

50.5 |

32.8 |

|

Table 4: Difference in mean sample size by groups4) [22]

Out of the different variables we tested, in addition to author, also saturation point and country of origin of the journal did not have an effect on sample size: the mean number of interviews is not statistically different in these groups (Table 4). However, the mean number of interviews was different according to the composition of the sample, the journal and the funding. These findings are further discussed below. [23]

3.3 Composition of the sample: How heterogeneous can it be?

According to FUSCH and NESS (2015, p.1409), "there is no one-size-fits-all method to reach data saturation," and "this is because study designs are not universal." We acknowledge and agree with this claim, yet, the current empirical study can highlight commonalities in how researchers conduct interview-based research. Studies tend to cluster, based on their sample composition. According to ROBINSON (2014, p.26), the degree of heterogeneity can be determined by various parameters, as "demographic homogeneity, graphical homogeneity, physical homogeneity, psychological homogeneity or life history homogeneity." We seek to focus on demographic homogeneity: "Homogeneity imparted by a demographic commonality such as a specific age range, gender, ethnic or socio-economic group" (p.28). [24]

By investigating in detail the composition of the sample for each of the 855 qualitative interview-based studies, based on their socio-economic background, we could divide them into three groups: studies with heterogeneous, semi-heterogeneous or homogeneous samples (Table 5).

|

Type of sample |

Description |

An exemplar |

|

Heterogeneous sample |

A sample where interviewees cover a wide array of individuals from various positions and companies (in most cases also from different countries and sectors). |

A study confirming this case is FARNDALE et al. (2010), where a total of 248 people from numerous sectors, job titles, and 19 countries were interviewed. |

|

Semi-heterogeneous sample |

A sample where interviewees cover individuals from various positions, but all from the same company or branch of industry; or, for example, all respondents are from different companies but from the same occupation or the same status of employment. |

An illustrative study of a semi-heterogeneous sample is found in NEELEY (2013)—a study that comprised 41 respondents from various jobs in one French high-tech company to investigate how workers experience and express status loss in reference to their English fluency level. |

|

Homogeneous sample |

A sample where interviewees cover individuals from the same and rather narrow position, and preferably also from the same company or equivalent. |

Research from AUDEBRAND and BARROS (2017, p.1), when they investigated "co-operatives successful battle against corporate dominance in the Québec funeral industry." For this, they interviewed 12 managers (out of a possible 30) from funeral co-operatives in the Québec province in Canada. |

Table 5: Typology of samples [25]

Figure 3 provides evidence of how interview-based studies across the 11 years are dominantly based on heterogeneous samples—depending on the year, heterogeneous samples make up 52%–76% of all the samples. This implies how qualitative studies in leading journals tend to be based on samples where interviewees cover a wide array of individuals from various positions and companies, in most cases also from different countries and sectors.

Figure 3: Distribution of studies by composition of sample and year [26]

In addition, when we zoom in to the journal level (see Figure 4), Human Resource Management has been the scholarly outlet with the greatest share of heterogeneous samples. In contrast, Organization Studies and Administrative Science Quarterly display the lowest share of heterogeneous samples.

Figure 4: Distribution of studies by composition of the sample and journals [27]

As can be seen from Figure 5, there is a clear tendency that homogeneous samples prevail in studies with less than 20 interviews and heterogeneous samples prevail in studies with more than 30 interviews. In studies with more than 30 interviews, homogeneous samples are rare (3% among this group) and the majority of studies use heterogeneous samples (80%).

Figure 5: Distribution of studies by composition of sample and sample size (share in respective groups) [28]

The composition of a sample (whether the authors aim at homogeneity, semi-homogeneity or heterogeneity), has an effect on sample size. In the case of a homogeneous sample, the mean number of interviews per study is the lowest (about 18), in the case of a semi-heterogeneous sample, the mean number of interviews is 35 and in the case of a heterogeneous sample, it is 59 (Table 4). This result provides strong evidence to support the main claim of this article—a quantifying of the qualitative paradigm. When studies start to grow in sample size, they often do so at the expense of homogeneity across the respondents; for example, conducting interviews in different countries, and/or across all levels of organizational hierarchy. The composition of the sample is what ultimately determines whether and how soon we reach saturation in information flow (ROMNEY, WELLER & BATCHELDER, 1986). To sum up this section, we highlighted the need to pay more attention to the ways samples are composed and whether authors discuss the fit between their homogeneous, semi-homogeneous or heterogeneous sample and their respective research problem. [29]

3.4 Saturation point: How to justify it?

By far the most widespread instrument for limiting sample size in qualitative research has been saturation point—a decision point where the researcher can stop interviewing as new participants deliver little or no new information (BOWEN, 2008; GLASER & STRAUSS, 1967). We give evidence of the practice where a vast share of authors justify their sample size not by claiming a good fit between their research problem and the composition of the sample, but instead referring to the classics; for example, as recommended by GUEST et al. (2006), a sample of 12 interviews can be sufficient. Numerical justification by preset benchmarks is rare, and less sought in qualitative research. Furthermore, it is the essence of qualitative research that samples are highly context-based, thus universal numerical recommendations have weak explanatory power. [30]

Figure 6 provides detailed information about the distribution of studies that have claimed to have reached saturation point. Across the 845 qualitative studies that we investigated, only 10% even mention saturation point. However, there is no difference in average sample size when comparing those studies that claim to have reached saturation point and those which have claimed not to (see Table 4).

Figure 6: Distribution of studies by sample size and saturation point [31]

We would question the universal expectation of showing the saturation point for all qualitative research. Having originated from grounded theory, the concept of saturation point is perhaps too quickly, and in an unquestioned manner taken over to be used across all qualitative research methods. Therefore, we provide support to the claim by O'REILLY and PARKER, "as saturation becomes unquestioned and expected, it is necessary to take time to reflect on what this actually means for research practice" (2013, p.196). A vast share of studies that mention saturation point might do so merely because it is strongly expected from the reviewers. We consider a potential solution to be to divide the heterogeneous sample into smaller sub-groups and to seek saturation for all potential sub-groups of the larger sample (KINDSIKO & BARUCH, 2019). To conclude this section, we provide evidence of the need to reconsider the ways researchers justify their samples and give further attention to how "tools" like saturation point are being used both by authors and reviewers. [32]

3.5 Country (U.S. vs. Europe): How much do research traditions differ?

Of the 845 studies analyzed here, 57 report 100 or more interviews (7%), and differentiated by journals the result is as follows: Organization Studies (28%), Organization Science (17%), Journal of Management Studies (16%), Administrative Science Quarterly (12%), Human Relations (16%), and Human Resource Management (11%). One of the most common complaints among the qualitative researchers who have managed to get their work published in leading North American journals is the perception that "qualitative research has to be much better than its quantitative counterpart to be accepted" (PRATT, 2008, p.490). Furthermore, reviewers seem to evaluate qualitative research with inappropriate (positivistic quantitative) standards, and this also applies to the demands over sampling. Disproportionately high expectations might be one of the factors fueling the tendency of qualitative researchers to mimic the quantitative and seek to impress the reviewers with large samples. [33]

According to MARSHALL et al. (2013), U.S. researchers tend to present larger sample sizes than their colleagues from other countries. The current study is comprised of four U.S.-based journals and two U.K.-based journals; therefore, the results might be inclined toward the epistemological traditions of the United States. This could possibly be one of the limitations of the current study as most of the journals investigated are of U.S. origin. Then again, we could claim how U.S. journals are rather well served by qualitative studies authored by U.K. scholars, and vice versa. Therefore, we are not able to say how strongly our findings are influenced by the North American "tradition of large samples." To compare our findings with previous research, in 2011, BLUHM et al. reviewed 198 qualitative studies from both continents (three U.S. and two European management journals) and found that the preference toward larger sample sizes in North American journals have been interpreted as:

"a product of the logical positivism and quantitative rigour embedded in American academic institutions that train many of the field's scholars. Such a positivistic grounding is challenged and even dismissed by European scholars and institutions, which more readily embrace and teach a variety of qualitative approaches to studying organizational phenomena" (p.1867). [34]

Although our study comprises only two U.K. journals (Organization Studies and Journal of Management Studies), considering the number of articles we worked through within the mentioned journals (380 articles, which is 45% of the total 845), it still provides a relatively good comparison. [35]

According to our findings, there is no statistical difference in the mean number of interviews between U.S. and U.K. journals. Therefore, we dispute previous research that claims a sharp difference between U.K. and U.S. outlets (e.g., MARSHALL et al., 2013). A possible explanation here might be the fact that the editorial boards of the leading journals (both U.K. and U.S.) are dominated by U.S.-based board members, or are rather international to claim the dominance of any epistemological tradition. For example, BURGESS and SHAW (2010) investigated 2,952 editorial board memberships from 36 journals across the Financial Times list and found that the editorial boards of leading journals are strongly influenced by males originating from high status U.S. universities, and specializing in organizational behavior and enterprise/small business fields. To gain an overview of the composition of the editorial boards of our sample journals, Table 6 covers the distribution of editorial board members by country of origin and journal (as of September 2018).

|

|

Members |

per |

country |

No. of countries in |

|

|

United States |

United Kingdom |

Other |

editorial board |

|

Organization Studies (UK) |

13% |

38% |

49% |

19 |

|

Journal of Management Studies (UK) |

34% |

22% |

44% |

28 |

|

Organization Science (US) |

76% |

6% |

18% |

15 |

|

Administrative Science Quarterly (US) |

72% |

6% |

22% |

11 |

|

Human Relations (US) |

27% |

36% |

37% |

17 |

|

Human Resource Management (US) |

86% |

4% |

10% |

8 |

Table 6: Composition of editorial board across the six journals [36]

Interestingly, both U.K.-based journals reveal greater heterogeneity in terms of how many different countries their editorial boards reveal. The editorial board for Organization Studies comprises members from 19 countries, and the Journal of Management studies covers 28 countries. Figure 7 reveals how the editorial boards of U.K.-based journals are not dominated by U.K.-based board members, but U.S.-based journals have a rather clear dominance of U.S.-based board members—72–86%, the only exception being Human Relations, where only 27% of members are of U.S. origin.

Figure 7: Composition of editorial boards, differentiated by country of origin (U.S. vs U.K.-based journals) [37]

However, these results should be taken with caution, because the country of origin is usually given based on the academic institution where the member of the editorial board works. It might not be positively correlated with the country where the researcher was trained and where he or she developed base assumptions on how to craft qualitative research. For example, a U.K.-based researcher might have gained his or her degrees and training from the United States and vice versa. [38]

Although there were no differences in mean sample size by countries, quite substantial differences occur according to journal, which is also reflected in Table 4. The highest average number of interviews per paper can be found in Administrative Science Quarterly (68) and slightly lower is this number for Human Resource Management, Organization Science and Journal of Management Studies (52, 51 and 49 respectively). The smallest average number of interviews can be found in Organization Studies and Human Relations (43 and 41 respectively). [39]

3.6 Demands from the grant provider: How large a sample is needed to get my research funded?

During the process of grant applications, researchers are forced to provide methodological plans that are as detailed as possible—this implies that they have to provide clear information about how many will be interviewed, who will be interviewed, how and for how long. The influence from funding bodies and preset sample sizes in the application can be at odds with the very core of the qualitative research. As reflected by GUEST et al. (2006, p.60):

"it is precisely a general, numerical guideline that is most needed, particularly in the applied research sector. Individuals designing research—lay and experts alike—need to know how many interviews they should budget for and write into their protocol, before they enter the field." [40]

Considering how costly (in terms of money, time, and brainpower) in-depth interviewing can be, funding can turn out to be an important restriction on sample size. For example, researchers might be forced to stop recruiting new interviewees when they run short of money—leaving the sample perhaps too small (GREEN & THOROGOOD, 2004). From another point of view, researchers might also be forced to conduct interviews with a sample size preset by the grant application. The latter is a tendency that has been critically highlighted by previous studies: "it is becoming an increasingly common requirement at the design stage, for planning, funding and ethical review, to state in advance the proposed size of the sample" (O'REILLY & PARKER, 2013, p.193). [41]

Based on our sample, the number of interviews is related to funding: there is a clear tendency that when funding is mentioned, the sample size is larger (the mean sample size of this group is about 50), while if funding is not mentioned, the mean sample size is around 42 (Figure 8).

Figure 8: 95% confidence interval for mean sample size based on the existence of funding [42]

Interpreting our results by juxtaposing the sample size and whether the research was funded or not, we can see how those studies that have gained funding tend to be the largest and most heterogeneous. More than seventy percent of the funded studies claim a sample size of 30 or more. In comparison, in the studies that did not gain funding, less than 60% claim a sample size of 30 or more. The fact that the majority of large-scale studies depend on external funding has resulted in hiding away the saturation point or any other rationalization about the sample size—as researchers have little control over the "pre-ordered" sample size. We believe this conclusion to be the most important and highly problematic issue that should be addressed in future studies—how and in what ways will project-based funding modify existing practices of conducting qualitative research? [43]

4. Discussion and Concluding Thoughts

The title of this article is an extract from a reflection by a top scientist who had managed to get a qualitative study published in a leading North American organizational journal:

"Qualitative papers are at a disadvantage from the beginning simply because they have to be so much better than the average quantitative paper. Because qualitative researchers suffer from an inferiority complex (being the poor and embarrassing cousin to the gentrified quantitative academics) they are reviewed with a much more critical and discerning eye" (PRATT, 2008, p.491). [44]

We have reflected upon the changing nature of research practice—highlighting the need to acknowledge how qualitative research is actually undertaken. We sought to bring forward how the conditions from the research environment seem to influence the way qualitative research is undertaken. A large share of literature has highlighted how qualitative researchers feel the need to defend themselves from the dominance of quantitative superiority (GEPHARDT, 2004; PRATT, 2009), a tactic of mimicking the quantitative paradigm in order to get their qualitative study published. The most evident trace of this is the over-proportionally and unjustifiably large sample size. We contribute by revealing factors that might have influenced the ways researchers conduct interview-based research. To our knowledge, the organizational research community lacks comprehensive and evidence-based reflection on what is happening with sample size in leading journals. That said, we sought to bring scholarly attention to the ways the research environment has and seems to continue influencing how researchers design, conduct and frame (publish) qualitative research. [45]

We revealed how sample sizes in interview-based studies in leading journals are rather high and heterogeneous. We believe that 11 years is a rather short period for witnessing radical trends in research practice; in other words, to reveal a vast increase in sample size. Therefore, although we did not see remarkable growth in sample size, our study still revealed several problems with the overall practice of conducting and presenting qualitative interviews. [46]

First, qualitative research in leading journals tends to build on a rather large and heterogeneous sample. Furthermore, most studies do not reveal their sampling strategy––their logic behind selecting specific informants. We believe such a practice is creating a threat to the basic epistemological, ontological and methodological assumptions that separate qualitative and quantitative approaches. If qualitative research continues mimicking the quantitative (large and heterogeneous samples), qualitative studies may start to lose the most important advantage it is supposed to have—instead of testing a preset hypothesis and knowledge, qualitative research should build on the ability to go in-depth into the research phenomena and provide highly novel insights. As several editorial notes from leading organizational journals admit (PRATT, 2008), the articles that seem to have had the largest impact on the profession, tend to build on the qualitative approach. Yet, at the same time, qualitative studies are the most critically scrutinized both by editors and reviewers, as well as fellow qualitative researchers who have the power to evaluate whether the specific study is fit enough to be published in their outlets. [47]

Second, studies that gained funding revealed the most heterogeneous and largest sample sizes. Possible reasons for this might be the perception that in order to get funding in the first place, a small number of interviews are seen as insufficient by both the researchers and the funding body. These studies might end up with heterogeneous samples due to the fact that funding institutions would prefer a more comprehensive overview of the research problem, one that includes as many stakeholders or fields as possible. Acknowledging the possible effect of grant money in raising the sample size in qualitative research, we claim that organizational scholars should therefore critically modify and develop methodological standpoints to assure a better fit with the new research environment. For example, we revealed how rarely researchers justify the composition or the size of their sample. Therefore, we claim that even when research grants tend to expect large studies, scholars should pay more attention to the design of their sampling. For example, although the application of saturation point as a tool for providing evidence of "the goodness" of the sample is not seen as universally necessary in the case of all qualitative research (O'REILLY & PARKER, 2013). When it is used, it should be fit for purpose and convince the reader. We managed to show how only about 10% of the 855 studies mentioned reached saturation point, which might not be a problem in itself, but when highly heterogeneous samples with 100 and more respondents (e.g., people from various occupations and countries) claim to have reached saturation point, then we might have a problem. [48]

As in all cases, our article has a few limitations. Foremost, this study is constrained by the choice of journals we investigated. First, future studies could expand the sample. All the journals investigated in this study represent top scholarly outlets, which, on the one hand is a good thing—revealing trends in sample size among top qualitative studies (as often they are taken as a benchmark for others); yet, on the other hand, top journals are largely of U.S. origin, which could give preference toward the epistemological, ontological and methodological preferences set by the respective country and scholarly community. In this case we leave the question open and debatable—as the academic community is highly international, the country of origin of the journal might not be as strict a limitation as once considered (e.g., by BLUHM et al., 2011). Authors might have been trained (gained their degrees) in one country, working in several countries and co-authoring with numerous individuals across the world. In that respect, what we might witness is a mix of epistemological, ontological and methodological assumptions. Second, the current study is based on English language journals. Future studies could investigate similar aspects across journals where the language is not English. Our choice of English based journals was made as English language tends to dominate among scholarly outlets. [49]

This work was supported by the Estonian Ministry of Education and Research (grant number IUT20-49).

1) https://journals.sagepub.com/home/oss [Accessed: May 29, 2019]. <back>

2) "The SCImago Journal & Country Rank is a publicly available portal that includes the journals and country scientific indicators developed from the information contained in the Scopus® database" (https://www.scimagojr.com/aboutus.php [Accessed: May 17, 2019]). <back>

3) "The h-index is an index that attempts to measure both the productivity and impact of the published work of a scientist or scholar” (https://blog.scopus.com/posts/the-scopus-h-index-what-s-it-all-about-part-i [Accessed: May 17, 2019]). <back>

4) In the case of two groups, the t-test is used. If there are more than two groups, ANOVA is used. <back>

Aguinis, Herman; Pierce, Charles A.; Bosco, Frank A.; Dalton, Dan R. & Dalton, Catherine M. (2011). Debunking myths and urban legends about meta-analysis. Organizational Research Methods, 14(2), 306-331.

Audebrand, Luc K. & Barros, Marcos (2017). All equal in death? Fighting inequality in the contemporary funeral industry. Organization Studies, 39(9), 1-21.

Bandyopadhyay, Amit K. (2001). Authorship pattern in different disciplines. Annals of Library and Information Studies, 48(4), 139-147.

Bluhm, Dustin J.; Harman, Wendy; Lee, Thomas W. & Mitchell, Terence R. (2011). Qualitative research in management: A decade of progress. Journal of Management Studies, 48(8), 1866-1891.

Boddy, Clive Roland (2016). Sample size for qualitative research. Qualitative Market Research: An International Journal, 19(4), 426-432.

Bowen, Glenn A. (2008). Naturalistic inquiry and the saturation concept: A research note. Qualitative Research, 8(1), 137-152.

Burgess, Thomas F. & Shaw. Nicola E. (2010). Editorial board membership of management and business journals: A social network analysis study of the Financial Times 40. British Journal of Management, 21(3), 627-648.

Burrell, Gibson & Morgan, Gareth (1979). Sociological paradigms and organisational analysis. London: Heinemann.

Chrobot-Mason, Donna; Ruderman, Marian N.; Weber, Todd J. & Ernst, Chris (2009). The challenge of leading on unstable ground: Triggers that activate social identity faultlines. Human Relations, 62(11), 1763-1794.

Cooper, Harris M. (1982). Scientific guidelines for conducting integrative research reviews. Review of Educational Research, 52(2), 291-302.

Cooper, Harris M. (2007). Evaluating and interpreting research syntheses in adult learning and literacy. Boston, MA: National College Transition Network, New England Literacy Resource Center/World Education.

Farndale, Elaine; Paauwe, Jaap; Morris, Shad S.; Stahl, Günter K.; Stiles, Philip; Trevor, Jonathan & Wright, Patrick M. (2010). Context‐bound configurations of corporate HR functions in multinational corporations. Human Resource Management, 49(1), 45-66.

Fusch, Patricia I. & Ness, Lawrence R. (2015). Are we there yet? Data saturation in qualitative research. The Qualitative Report, 20(9), 1408-1416, http://www.nova.edu/ssss/QR/QR20/9/fusch1.pdf [August 25, 2018].

Gephardt, Robert P. (2004). Qualitative research and the Academy of Management Journal. Academy of Management Journal, 47(4), 454-462.

Gioia, Dennis A.; Corley, Kevin G. & Hamilton, Aimee L. (2012). Seeking qualitative rigor in inductive research: Notes on the Gioia methodology. Organizational Research Methods, 16(1), 15-31.

Glaser, Barney G. & Strauss, Anselm L. (1967). The discovery of grounded theory: Strategies for qualitative research. New York, NY: Aldine de Gruyter.

Green, Judith & Thorogood, Nicki (2004). Qualitative methods for health research. London: Sage.

Guest, Greg; Bunce, Arwen & Johnson, Laura (2006). How many interviews are enough?: An experiment with data saturation and variability. Field Methods, 18(1), 59-82.

Israel, Glenn D. (1992). Determining sample size. Fact Sheet PEOD-6, University of Florida, Gainesville, FL, https://www.gjimt.ac.in/wp-content/uploads/2017/10/2_Glenn-D.-Israel_Determining-Sample-Size.pdf [Accessed: August 25, 2018].

Jonsen, Karsten; Fendt, Jacqueline & Point, Sébastien (2018). Convincing qualitative research: What constitutes persuasive writing?. Organizational Research Methods, 21(1), 30-67.

Kindsiko, Eneli & Baruch, Yehuda (2019). Careers of PhD graduates: The role of chance events and how to manage them. Journal of Vocational Behavior, 112, 122-140.

Kjærgaard, Annemette; Morsing, Mette & Ravasi, Davide (2011). Mediating identity: A study of media influence on organizational identity construction in a celebrity firm. Journal of Management Studies, 48(3), 514-543.

Liberman, Sofia & Wolf, Kurt Bernardo (1998). Bonding number in scientific disciplines. Social Networks, 20(3), 239-246.

Marshall, Bryan; Cardon, Peter; Poddar, Amit & Fontenot, Renee (2013). Does sample size matter in qualitative research?: A review of qualitative interviews in IS research. The Journal of Computer Information Systems, 54(1), 11-22.

Mason, Mark (2010). Sample size and saturation in PhD studies using qualitative interviews. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 11(3), Art. 8, http://dx.doi.org/10.17169/fqs-11.3.1428 [Accessed: August 23, 2018].

Neeley, Tsedal B. (2013). Language matters: Status loss and achieved status distinctions in global organizations. Organization Science, 24(2), 476-497.

O'Reilly, Michelle & Parker, Nicola (2013). "Unsatisfactory saturation": A critical exploration of the notion of saturated sample sizes in qualitative research. Qualitative Research, 13(2), 190-197.

Ortmans, Laurent (2018). FT Global MBA ranking 2018: Methodology and key. Financial Times, January 28, 2018, https://www.ft.com/content/1bf3c442-0064-11e8-9650-9c0ad2d7c5b5 [Accessed: September 10, 2018]

Pratt, Michael G. (2008). Fitting oval pegs into round holes: Tensions in evaluating and publishing qualitative research in top-tier North American journals. Organizational Research Methods, 11(3), 481-509.

Pratt, Michael G. (2009). From the editors: For the lack of a boilerplate: Tips on writing up (and reviewing) qualitative research. Academy of Management Journal, 52(5), 856-862.

Robinson, Oliver C. (2014). Sampling in interview-based qualitative research: A theoretical and practical guide. Qualitative Research in Psychology, 11(1), 25-41.

Romney, A. Kimball; Weller, Susan C. & Batchelder, William H. (1986). Culture as consensus: A theory of culture and informant accuracy. American Anthropologist, 88(2), 313-338.

Sandelowski, Margarete (1995). Sample size in qualitative research. Research in Nursing & Health, 18(2), 179-183.

Sandelowski, Margarete (1998). Writing a good read: Strategies for re‐presenting qualitative data. Research in Nursing & Health, 21(4), 375-382.

Schwartz, Gregory & McCann, Leo (2007). Overlapping effects: Path dependence and path generation in management and organization in Russia. Human Relations, 60(10), 1525-1549.

Strang, David & Siler, Kyle (2017). From "just the facts" to "more theory and methods, please": The evolution of the research article in Administrative Science Quarterly, 1956-2008. Social Studies of Science, 47(4), 528-555.

Swart, Juani & Kinnie, Nicholas (2014). Reconsidering boundaries: Human resource management in a networked world. Human Resource Management, 53(2), 291-310.

Trotter, Robert T. (2012). Qualitative research sample design and sample size: Resolving and unresolved issues and inferential imperatives. Preventive Medicine, 55(5), 398-400.

Tuckett, Anthony (2004). Part I: Qualitative research sampling—the very real complexities. Nurse Researcher, 12(1), 47-61.

Van Maanen, John (1998). Different strokes: Qualitative research in the Administrative Science Quarterly from 1956 to 1996. In John Van Maanen (Ed.), Qualitative studies in organizations (pp.ix-xxxii). Thousand Oaks, CA: Sage.

Eneli KINDSIKO is an associate professor of qualitative research at the School of Economics and Business Administration, University of Tartu in Estonia. She holds a double master degree in philosophy (2010) and economics (2013) and a doctoral degree in economics (2014) from University of Tartu. Her main research interests are within the field of qualitative research, management, academic career, and career patterns of PhDs.

Contact:

Eneli Kindsiko

School of Economics and Business Administration

University of Tartu

J. Liivi 4

50409, Tartu, Estonia

E-mail: eneli.kindsiko@ut.ee

URL: https://majandus.ut.ee/en/about-school/school-economics-and-business-administration

Helen POLTIMÄE is a lecturer in economic modeling at the School of Economics and Business Administration, University of Tartu in Estonia. She holds a master degree (cum laude) in economics (2008) and a doctoral degree (2014) from University of Tartu. In her PhD thesis, she modeled the different effects of Estonian environmental taxes. She has participated in several projects related to environmental economics and supervised students in applying quantitative methodology. She is teaching courses related to quantitative research methods, statistics and econometrics.

Contact:

Helen Poltimäe

School of Economics and Business Administration

University of Tartu

J. Liivi 4

50409, Tartu, Estonia

E-mail: helen.poltimae@ut.ee

URL: https://majandus.ut.ee/en/about-school/school-economics-and-business-administration

Kindsiko, Eneli & Poltimäe, Helen (2019). The Poor and Embarrassing Cousin to the Gentrified Quantitative Academics: What Determines the Sample Size in Qualitative Interview-Based Organization Studies? [49 paragraphs]. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 20(3), Art. 1, http://dx.doi.org/10.17169/fqs-20.3.3200.

Revised: March 2024