Volume 3, No. 2, Art. 25 – May 2002

Reporting the Results of Computer-assisted Analysis of Qualitative Research Data

Robert Thompson

Abstract: This paper focuses on the interrelationship between the mechanical and conceptual aspects of qualitative data analysis. The first part of the paper outlines the support role a computer program, HyperQual2, plays in the mechanical analysis process. The second part of the paper argues that the most important aspect of analysis in any research endeavour is conceptual in nature. It provides a "snapshot" of the in-depth analysis of one interview protocol. The metaphor "snapshot" is apt because the intention is to capture the "essence" of the analysis process. Such a focused approach is one way of reporting and making transparent the qualitative research process. It can be used as a framework so that readers of the research are better able to judge the fidelity of the results of the final analysis.

Key words: CAQDAS, HyperQual, qualitative research, analysis of qualitative data, reporting data analysis, beginning teachers, teacher appraisal

Table of Contents

1. Introduction

2. What Computers Can and Cannot Do in Qualitative Data Analysis

3. The Mechanical Analysis of Data

4. The Conceptual Analysis of the Data

5. Using HyperQual2 in Analysing Data

6. Making the Analysis Process Transparent

7. Conclusion

A dilemma for qualitative researchers is how to cope with the quantity of text generated from a research endeavour. Not only have the mountains of text to be managed in a systematic way, but decisions as to why particular segments of the text are chosen to represent patterns or categories need to be justified rigorously. Many researchers now use computers to assist in the analysis of data. This creates a further problem in relation to how the results of the analysis should be reported so that readers can feel confident that the analysis process has been carried out professionally and the researcher has taken adequate measures to guarantee its integrity. [1]

A weakness of many qualitative research studies is the failure by researchers to illuminate thoroughly how they derive the outcomes of analysis (HASSELGREN 1993). Some commentators have argued for a more serious and rigorous reporting of techniques when analysing qualitative data using a computer program (BAZELEY & O'ROURKE 1996). However, there is a dearth of explicit examples in the literature that are detailed and transparent enough to provide models on how to "write-up" the computer analysis of qualitative research data. This paper attempts to redress this imbalance and offers one such model. [2]

2. What Computers Can and Cannot Do in Qualitative Data Analysis

Qualitative research generates a large amount of raw data, usually in the form of text. Researchers using manual methods to organise and manage this amount of data face endless hours of sorting, highlighting, cutting and pasting. No doubt, some believe the investment of a great deal of time in this aspect of textual data analysis is part and parcel of the qualitative research process—a form of "penance" that qualitative researchers have to pay to achieve "richer" outcomes. There is a tendency, too, for qualitative researchers to favour a "hands-on" approach when analysing their data. Physically handling the data, by marking text or cutting and pasting the transcripts of interviews, seems to give the process a more human touch by connecting the researcher to the researched (THOMPSON 1995). This differentiates qualitative from quantitative researchers and is a reaction by the former against the "science, reason and evidence" of a quantitative approach where "neutral" researchers preserve a "distance" between themselves and those being researched (DENZIN & LINCOLN 1994). [3]

So ingrained is this view that even though computer programs represent a genuine advance over manual methods of data analysis and have been designed to help speed up the process, some researchers continue to resist their use. They continue to perceive computers as a "devil-tool of positivism and scientism" and as interlopers into the qualitative realm of research (LEE & FIELDING 1996, paragraph 4.5). According to this view, computers seem to fit more easily in the quantitative domain since their very existence is based on numbers (THOMPSON 1994). Interestingly, there is a contrary position taken by other commentators who believe that to use a computer program for data analysis lends a scientific gloss to qualitative research (COFFEY, HOLBROOK & ATKINSON 1996). The logic of this argument is based on the premise that qualitative researchers need to compete with the "rigour" of quantitative data analysis and replace the obligatory statistical analysis with something equally as complicated and "scientific-like". [4]

In considering the question of using a computer program in the analysis of qualitative research data, it is necessary from the outset to understand fully what computers can and cannot do in the research process. Any computer can be programmed to do the mechanical part of analysis, but no computer can do the conceptual part. Computer Assisted Qualitative Data Analysis Software (CAQDAS) is designed to help in the analysis of data by storing, managing and presenting data in written form (LEE & FIELDING 1991, 1996; RICHARDS & RICHARDS 1994; PADILLA 1994a; COFFEY, HOLBROOK & ATKINSON 1996). [5]

COFFEY, HOLBROOK and ATKINSON (1996) raise the following four, important issues in this respect:

Most computer-based approaches depend on particular segments of text being marked using code words attached to discrete stretches of data thus allowing the researcher to retrieve all instances in the data that share a code.

The underlying logic of coding and searching for coded segments in this way is little different from manual techniques.

There is no great conceptual advance over the indexing of typed notes and transcripts, or of marking them physically with code words or coloured inks.

In practice, the speed and comprehensiveness of searches is an undoubted benefit of using a computer to assist in analysis. [6]

While computer software offers a number of ways of organising and managing qualitative research data, COFFEY, HOLBROOK and ATKINSON (1996) are resolute in maintaining that coding data using computer programs is not analysis. They note that such a narrow approach, results from accepting such procedures uncritically and is premised on an elementary set of assumptions for managing qualitative data. In addition, they warn "that many of the analytic strategies implied by code-and-retrieve procedures are tied to the specific inputting requirements of computer software strategies". Consequently, "there is an increasing danger of seeing coding data segments as an analytic strategy in its own right, and of seeing such an approach as the analytic strategy of choice" (COFFEY, HOLBROOK & ATKINSON 1996, paragraph 7.7). [7]

BAZELEY and O'ROURKE (1996, p.17) argue for a more serious and rigorous reporting of techniques for the analysis of qualitative data using a computer program. They criticise researchers for using "brush off" statements that say the data will be analysed using a specific computer program. They contend that this is done as if the very mention of the program's name conveys that the researcher knows how to use it, and as if the program can be fed data, following which it will more or less "spit out" the results. Accordingly, they maintain that the researcher needs to tell the computer what to do and not vice versa. BAZELEY and O'ROURKE (1996) concede researchers who use qualitative data may find it more difficult to describe what is done when analysing huge volumes of interview text. However, they believe there remains a need to convince readers "that it involves more than intuitive reading and becoming submerged in lovely, gooey 'in depth' data" (p.17). In other words, the researcher needs to write not only how the data were collected, but also what he or she did with them. [8]

Two assumptions underpin the computer analysis of qualitative research data. Firstly, the computer is used because it frees the mind of the researcher as much as possible from the mechanics of qualitative data analysis so the focus can be placed on the more important conceptual aspect of data analysis. This takes into account that while the mind is well suited to making decisions about pattern recognition and the development of categories or themes, it is easily confused by large amounts of data and becomes bored with having to do monotonous tasks repetitively (PADILLA 1994a). [9]

Secondly, the computer plays no part in the type and quality of data acquired. No matter how powerful the computer program is, or how much skill the researcher has in using the program, if the data are of poor quality, then this will be reflected in the research outcomes. More importantly, the decisions made to select parts of interview text that illustrate the categories or themes in a study remain the responsibility of the researcher not the computer. The strength of the analysis depends to a large extent on the well-established strategies used in analysing qualitative research data. [10]

3. The Mechanical Analysis of Data

Many computer programs used in qualitative data analysis are expensive and complicated to operate necessitating researchers to undertake extensive training and practice in order to become proficient users of a program. It is not uncommon for researchers to need several days of in-service training followed by weeks and weeks of practice. The complex nature of these programs reflects a view of qualitative data analysis as an equally complicated endeavour and some researchers are drawn to them because of this inherent "complicatedness". [11]

There is a perception amongst some researchers that if a program is expensive and sufficiently complex enough, then it will be able to carry out much of the analysis process for the researcher. In other words, the researcher feeds in the data and the computer spits out the results. In this scenario, there is a danger that the program can drive the analysis rather than vice versa. What researchers fail to understand is that this is a superficial and misguided assumption. There are no easy short cuts in undertaking a quality controlled and rigorous analysis of research data. The consequences of trying to cut corners are more likely to result in research that lacks credibility and is difficult to defend. This is particularly so with qualitative data because the process of analysis involves a dynamic relationship between researcher and data. [12]

HyperQual2 (PADILLA 1994b), is particularly useful in the analysis of data obtained using qualitative research methods because it is quite a simple program to understand and to use (THOMPSON 1994, 1995, 1999). A few hours of practice are all that is needed to become a proficient user. Its simplicity is its strength. The program uses the features of the Macintosh program HyperCard and is based on creating sets of data cards arranged in stacks. [13]

HyperQual2 allows the researcher to enter data, to code selected segments of data and to output the data in report form. The process is iterative and stacks are progressively refined. From the original stack containing the interview transcripts, "chunks" of data are isolated by the researcher and are deposited to new stacks. The data segments in the new stacks are analysed further and the process continues until the researcher is satisfied with the final version. It is at this stage that the segments of data can be printed in report-form according to the conceptions that they represent. The power of HyperQual2 is that navigation within a stack or among stacks is easy. Researchers can move to any arbitrary card quickly and efficiently in a variety of ways. This ease of movement allows the researcher to maintain the context of the data segments by tracing them back to the original interview transcript. HyperQual2's particular strength is that the researcher always stays in close contact with the original data. [14]

In the following section, the conceptual analysis of the data is treated as a separate issue. This is done for three reasons. Firstly, it adds clarity to the discussion. Secondly, it supports the concerns of COFFEY, HOLBROOK and ATKINSON (1996) that coding data with computer programs is not analysis. Thirdly, it emphasises the point that the most important part of analysis in any research endeavour is conceptual in nature. [15]

4. The Conceptual Analysis of the Data

In qualitative research, the most difficult task for the researcher is the conceptual part of data analysis: identifying meaningful segments of data, organising these segments into categories, and finally describing the relationship among these categories. In the previous section, it was argued that the massive volume of qualitative data obtained from intensive interviews needs to be broken down into manageable chunks in order that the human mind can deal with them. TESCH (1987, p.1) maintains that this process does not "merely consist of a random division into smaller units", it involves "skilled perception and artful transformation by the researcher". She isolates several elements that capture the spirit of a successful qualitative data reduction process and in so doing, provides a framework for analysis:

the researcher captures what is most important, most prevalent, most essential in the thousands of words dealing with the object of investigation;

the data become distilled to their essentials rather than simply being diminished in volume;

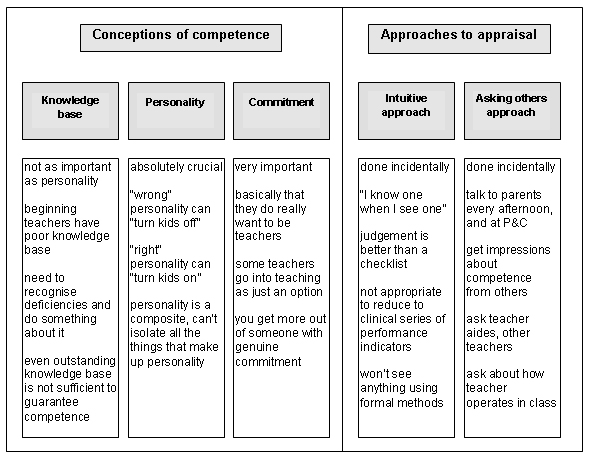

the process is methodical, systematic and goal-oriented; and

the research outcomes lead to a result that others can accept as representing the data (TESCH 1987, p.1). [16]

A weakness of many qualitative research studies, according to HASSELGREN (1993), is the failure by researchers to illuminate thoroughly how they derive the outcomes of analysis. He argues that in most cases: researchers "quite simply establish that they transcribe their interviews, read and re-read these thoroughly and then state that in this process categories of description, and so also the conceptions, simply 'emerge'" (p.71). [17]

He believes researchers need to account in detail how they proceed with the gathering and analysis of the data, state any considerations behind the interview questions, and reflect on the ascribed meaning of the transcribed text. By doing this, the researcher takes into account how the categories take form in the making of interview procedures and interview transcripts. This point is supported by FRANCIS (1993) who argues that making procedural and decision criteria explicit gives greater opportunities for the readers of the research to be able to judge on what grounds and in what sense they can accept the final analysis as satisfactory. [18]

5. Using HyperQual2 in Analysing Data

The focus of this section is an account of the important role a computer program plays in the analysis of qualitative data. HyperQual2 is a Macintosh computer program (PADILLA 1994b) and was trialled and used extensively in both stages of a pilot study (BALLANTYNE, THOMPSON & TAYLOR 1996, 1998) and a doctoral study (THOMPSON 1999). At the heart of HyperQual2 is the ability to create stacks of cards on which the researcher can store data. A newer, upgraded version of the program, HyperQual3, has recently been developed (PADILLA 1999)1). It must be emphasised that the role of the computer is one of support. Its uses are confined to organising and managing qualitative research data, speeding up the process of manipulating data and, most importantly, giving the researcher extra time to undertake the conceptual analysis of the data. [19]

The mechanical and conceptual aspects of the analysis of data are inter-woven and happen concurrently. To separate the two completely is artificial and underplays the extent to which they are inextricably intertwined. The analysis of data using HyperQual2 illustrates succinctly the interconnectedness of the mechanical and conceptual aspects involved and at the same time gives an overview of the analysis process. [20]

Each data card in a stack has the capacity to store three thousand words which is approximately the equivalent of the text of a forty-five minute interview. Interviews of a longer duration are stored on two or more data cards. Having entered the original interview transcripts onto the selected number of data cards (mechanical), an attempt is made to gain a sense of meaningful patterns arising from the discourse (conceptual). Segments of data in this research study are coded (conceptual/mechanical) and isolated (mechanical) in the following way. The first step of analysis is to read carefully and re-read the transcripts looking for patterns, categories, and ideas (conceptual). This initial scan is fairly coarse, but it gives the researcher a chance to be immersed in the text, to get a "feel" for what the data as a totality is "saying" (conceptual). [21]

At this point, segments of data relevant to the research inquiry are isolated (conceptual/mechanical), coded on the basis of perceived similarity or difference (conceptual) and transferred to a second stack (mechanical). Data in the second stack are "filtered" by code to a series of new stacks representing each category (mechanical). In other words, quotes are sorted according to similarity of meaning, one quote per card, into "electronic piles" of cards. Each "pile" of cards makes up an individual stack representing a particular category. A series of stacks represents all identified categories in the study. In some borderline cases, segments are re-coded (conceptual, mechanical), before being moved to the appropriate stack (mechanical). [22]

The new stacks are further filtered and added to until the final version is reached (conceptual, mechanical). With each iteration, the process of scanning becomes increasingly finer until eventually the criterion attributes that defined each group are made explicit and the decision to select a particular segment of text to illustrate a particular "category" becomes more accurate (MARTON, CARLSSON & HALASZ, 1992). HyperQual2 allows the researcher to move easily from particular segments in the new stacks back to the original data. The final stage of the process is the "down loading" of the data segments into a print format (mechanical). The stages in the analysis of data are represented in diagrammatic form in Figure 1.

Figure 1: Stages in the analysis of qualitative research data using HyperQual2 [23]

The coding (tagging) of data allows for close contact with the original interview data. The original text is never disturbed and always stays intact. The new stacks contain "chunks" of text illustrating a range of categories. Because each piece of text has a given "tag" or code and this accompanies the text to the stack, it is easy to "filter" the segments according to these codes and deposit them into a new stack. The latter contains all segments of text relating to a particular category. [24]

From the previous description, it might seem that the analysis process is linear in nature. However, this is far from the truth. The process is recursive and cyclical and "throughout the process an unstable system of categories and related data slowly settles into a stable system of categories" (MARTON 1988, p.189). HyperQual2 is designed in such a way that it enhances this cyclical approach. In the analysis process the researcher often needs to move between segments of text within a stack or between quotes in different stacks. There are also times that the researcher may need to return to the original interview. [25]

During this combination of processes of sorting and analysing, the researcher is looking for characteristics that clarify the categories or themes under investigation. The process is iterative and can be likened to a continuum that reflects an initial "coarse" analysis that becomes steadily "finer" over time (FLEET & CAMBOURNE 1989; PADILLA 1994b). Without the aid of a computer, a researcher has to move manually within print-based material. Such methods can become laborious, time consuming and inefficient. [26]

Researchers undertaking the analysis of qualitative research data using a computer need to describe what the program does in the analysis process. They need to be transparent in the processes they use in the particular research endeavour. They also need to link this analysis to their particular research endeavour. In other words, it is important that the analysis process is contextualised. [27]

6. Making the Analysis Process Transparent

The intention of this section is to look closely at the conceptual analysis of data used in this study (THOMPSON 1999). The focus of the study was the phenomenon of beginning teacher competence from the viewpoint of the school principal. The research investigated primary school principals' differing conceptions of competence and determined how they impact on the processes used to appraise beginning teachers. Responses from the twenty-seven principals who participated in this study were pooled and analysed. The process of analysis involved careful reading and re-reading of transcripts with the purpose of generating categories of description which described the variation in principals' conceptions of the phenomenon and the approaches that principals used in appraising beginning teacher competence. [28]

The analysis process described in this study was modelled on SVENSSON and THEMAN's (1983) seminal research on conceptions of political power. In their report, they dealt with one interview protocol only, but they focused on an in-depth examination of the processes they used to describe as faithfully as possible the interviewee's conceptions of political power. Likewise, the present section confines itself to one interview in an attempt to highlight the processes of analysis used in this study and to illustrate how the categories describing beginning teacher competence and the approaches to appraisal were formed and refined. Focusing on one interview protocol gives valuable insight into how the research in general and analysis in particular were undertaken. [29]

In the following sections, five interview excerpts have been chosen from one interview to help illustrate how the conceptual analysis of data was undertaken in this study. Each excerpt is taken verbatim and within each, numbered segments are framed for easy reference. For example, the following reference: "Fig. 2: Int. excerpt 1: 3" points the reader to Figure 2, Interview excerpt 1 and relates to the third framed segment that begins with the words: "he gets a lot more out of the kids ...". The reason for including a natural sequence from the interview is to convey the direct context of the chosen segments. [30]

From the initial scan of the data, descriptions of particular conceptions and appraisal approaches are not dealt with separately and articulated precisely by principals. On the contrary, they are interconnected and often partly expressed. SVENSSON and THEMAN (1983, p.10) capture succinctly this idea:

"In a discussion of an unstructured interview many statements are only partly expressed or contain hidden references to something having been mentioned earlier. Every reply is a reply to a question and almost every question emerges from the previous reply. Everything is connected to something else. Still, in reading the protocol one finds that there are different parts dealing with different questions and that some statements seem to address the category involved more directly than others. This identification of parts and more significant statements is the first step in the analysis and it is deepened and revised through further analysis and interpretation." [31]

In the first excerpt (see Fig. 2, Int. excerpt 1: 1), the principal responds to the opening entry question by emphasising the importance of a knowledge-base as an indication of competence. This can be traced to Fig. 3, Int. excerpt 2: 2 and 2: 3, where the principal expresses a deficit view of beginning teachers' knowledge and describes them as "semi-literate" with a weak knowledge-base. From this starting point, a "germ" of a conception of competence (as knowledge) is gleaned from the data. The next steps for the researcher are to scan the rest of the transcript and also other transcripts with the intention of seeking additional references to confirm (or deny) that a knowledge-base is seen by principals as important to beginning teacher competence. The scan at this stage is superficial with the researcher getting a "feel" for the data.

Figure 2: Interview excerpts 1 [32]

On closer scrutiny of the initial response of the principal, there are also references to "commitment to teaching" and "personality" (see Fig. 2, Int. excerpt 1: 2). The former is followed-up by the researcher later in the interview (see Fig 3, Int excerpt 2: 1). The latter concerning personality, "I suppose the personality thing, also", seems to be a passing comment, almost an afterthought. The interviewer probes for more information on this point. SVENSSON and THEMAN (1983, p.8) stress the necessity "of keeping in contact with such reactions and to elucidate them rather than to neglect them as is often done in standard interviews".

Figure 3: Interview excerpts 2 [33]

A question about whether personality was seen to be more important than a beginning teacher's knowledge-base challenged the principal to compare the two and in so doing helped reveal that the principal, in fact, thinks personality is more important than a knowledge-base—with the "right" kind of personality teachers are able to "turn the kids on" (see Fig 2, Int. excerpt 1: 3). The way the interview unfolded in this short sequence is similar to an incident in SVENSSON and THEMAN'S (1983) research in which: "the interviewer had snapped up something that had just happened to slip out" (p.8).

Figure 4: Interview excerpts 3 [34]

Further questioning about personality sees the principal indicate his distrust of breaking personality into a "series of sort of descriptive management-style indicators". This is followed by a statement about a competent beginning teacher that appeared to slip out: "I know one when I see one". Again this statement was "snapped up" with a quick and pertinent question: "How?" which, in turn, leads to a further line of probing about an intuitive approach to appraisal. This is evident in four of the five interview excerpts, Interview excerpts 1, 3, 4, and 5 (see Figs. 2, 4, 5 and 6) and shows clearly a line of probing that encourages the development of descriptions of the phenomenon under investigation. The interview protocol reflects how the interviewer attempts to cultivate a dialogue that helps clarify the principal's viewpoint.

Figure 5: Interview excerpts 4 [35]

Of interest to this study, is that the principal not only describes what an intuitive approach is, but also what it is not. In so doing, the principal describes one conception of competence and, by default another conception of competence. For instance, the comment "it's a composite of everything" can be juxtaposed with the comment "you can't reduce it to a series of descriptive management style indicators". Similarly, "my judgement is better than a checklist" and "I don't sort of go into a class and sort of start ticking" describe the phenomenon in question using a process of contrast. This process of discernment by comparison not only helps describe the conception more clearly (in this case an "intuitive" approach), it also helps describe other conceptions (in this case an "inspectorial" approach to appraisal). Francis (1993) concurs with this approach and argues that an iterative approach that sorts expressions in terms of similarities and differences assists the researcher to arrive at the most satisfactory outcome. [36]

In the final excerpt of the interview (see Fig. 6, Int. excerpt 5: 1), a further approach to appraisal is identified. The principal describes how he talks to a range of people (parents, teacher aides and teachers) to gain impressions about the competence of the beginning teacher. This method of collecting information is different from the intuitive approach described previously where the principal "knows one when I see one". However, both approaches to appraisal are related to each other in that they are incidental in nature and are not carried out in any systematic way. Having decided in the initial scan of the data that these approaches are different, the researcher seeks instances in other interviews. This is done in order to confirm that this informal, incidental data collection by "asking others" about competence or, indeed, by "others telling" the principal about competence are, in fact, an important way in which principals acquire knowledge about competence.

Figure 6: Interview excerpts 5 [37]

Suffice it to say at this point, that a "coarse" analysis of the initial responses of the principal (see Fig. 2 and Fig. 6) reveals five possible paths of inquiry: 1) competence is a "sound knowledge base"; 2) competence is "commitment"; 3) competence is "personality"; 4) appraisal of competence is "intuitive"; and 5) appraisal of competence is done by "asking others". The five categories outlined above represent three related but distinctively different conceptions of competence and two different appraisal approaches. An overview of this "coarse" analysis is captured in Figure 7.

Figure 7: Overview of the "coarse" analysis of interview protocol [38]

The "snapshot" outlined above illustrates only the preliminary stages of the analysis, but is sufficient to capture the "essence" of the early decisions made in the process. From this "coarse", superficial scan, a series of stages involving closer scrutiny of the data were undertaken until the description of the conceptions and appraisal approaches was the "best" that could be achieved. A number of other conceptions of competence and appraisal approaches were identified in the subsequent analysis. The final "fine tuning" was done in the very last stage of research—the "writing-up" stage. The iterative nature of the approach to the analysis of data is an essential aspect of the analysis procedure used in this study and is represented in diagrammatic form in Figure 8.

Figure 8: The iterative nature of the conceptual analysis of interview data [39]

The process involved the researcher moving within and among the twenty-seven interview transcripts and reading and re-reading the text. This process is represented in the diagram by a series of arrows. The results of the analysis led to descriptions of principals' conceptions of competence, and descriptions of the appraisal approaches they use to judge competence. By focusing on one interview protocol, the section was able to illustrate at a much deeper level how decisions in the conceptual analysis of data were made. [40]

This paper has addressed the major weakness in reporting the analysis of qualitative research, namely a lack of clarity in describing the mechanical and conceptual analysis processes used. In many qualitative studies involving the use of computer analysis much is left unsaid as though the very name of the computer program is sufficient in itself to justify the way the data are analysed. The paper has given a detailed description of the computer program HyperQual2. It argued that computer programs can assist the researcher in the mechanical aspects of analysis leaving more time for the all important conceptual analysis. [41]

A series of excerpts from one interview were used to focus on the conceptual analysis of the data. It was shown how patterns in the data were identified and "took shape" in the early, "coarse" stages of analysis. Of course, to "boil down" such a complex process in this way has its shortcomings and leaves out much. However, the "snapshot" approach used to describe the process is apt because rather than attempting to represent "the big picture", the intention was to capture the "essence" of the analysis process by addressing one small part. [42]

1) HyperQual3 requires a Macintosh computer with PowerPC or G3. It costs $180 (USA). It can be ordered from Raymond V. Padilla, HyperQual, 3327 N. Dakota, Chandler, AZ 85225 USA. URL: http://users2.ev1.net/~hyperqual/ (broken link, FQS, Nov. 2002). <back>

Ballantyne, Roy; Thompson, Robert & Taylor, Peter (1996). Discriminating between competent and not yet competent beginning teachers: An analysis of principals' reports. Asia-Pacific Journal of Teacher Education, 24(3), 281-307.

Ballantyne, Roy; Thompson, Robert & Taylor, Peter (in press). Principals' conceptions of competent beginning teachers. Accepted for publication in the Asia-Pacific Journal of Teacher Education, 26(1), 51-64.

Bazeley, Patricia Ann & O'Rourke, Barry (1996). Getting started in research: A series of reflections for the beginning researcher. University of Western Sydney: Macarthur.

Coffey, Amanda; Holbrook, Beverley & Atkinson, Paul (1996). Qualitative data analysis: Technologies and representation. Sociological Research On-Line, 1(1). Available at: http://www.socresonline.org.uk/1/1/4.html.

Denzin, Norman K. & Lincoln, Yvonna S. Eds (1994). Handbook of qualitative research. Thousand Oaks: Sage.

Fleet, Alma & Cambourne, Brian (1989). The coding of naturalistic data. Research in Education , 41, 1-15.

Francis, Hazel (1993). Advancing phenomenography—Questions of method. Nordisk Pedagogik, 13(2), 68-75.

Hasselgren, Biorn (1993). Tytti Soila and the phenomenographic approach. Nordisk Pedagogik, 13(3), 148-157.

Lee, Raymond M. & Fielding, Nigel G. (1991). Computing for qualitative research: Options, problems and potential. In Nigel G. Fielding & Raymond M. Lee (Eds). Using computers in qualitative research (pp.1-13). London: Sage.

Lee, Raymond M. & Fielding, Nigel G. (1996). Qualitative Data Analysis: Representations of a Technology: A Comment on Coffey, Holbrook and Atkinson. Sociological Research Online, 1(4). Available at: http://www.socresonline.org.uk./1/4/lf.html.

Marton, Ference (1988). Describing and improving learning. In Ribich Schmeck (Ed). Learning Strategies and Learning Styles (pp.53-82). New York: Plenum.

Marton, Ference; Carlsson, M. A. & Halasz, L. (1992). Differences in understanding and use of reflective variation in reading. British Journal of Educational Psychology, 62, 1-16.

Marton, Ference; Hounsell, Dai & Entwistle, Noel (Eds) (1984). The experience of learning. Edinburgh: Scottish Academic Press.

Padilla, Raymond V. (1994a). Qualitative analysis with HyperQual2. Chandler: AZ.

Padilla, Raymond V. (1994b). HyperQual2 Version 2.1 [Computer Program]. Chandler: AZ.

Padilla, Raymond V. (1999). HyperQual3Version 1 [Computer Program]. Chandler: AZ.

Richards, Tom J. & Richards, Lyn (1994). Using computers in qualitative research. In Norman K. Denzin & Yvonna S. Lincoln (Eds), Handbook of qualitative research (pp.445-462). Thousand Oaks: Sage.

Svensson, Lennart. & Theman, Jan (1983). The relation between categories of description and an interview protocol in a case of phenomenographic research. Paper presented at the Second Annual Human Science Research Conference. Duquesne University, Pittsburgh, P.A., May 18-20.

Tesch, Renata (1987). Comparing methods of qualitative analysis: What do they have in common? Paper presented at the American Educational Research Association Annual Meeting, Washington.

Thompson, Robert (1994). Taking the hypertension out of qualitative data analysis with HyperQual 2. Paper presented at the 24th annual conference of the Australian Teacher Education Association, Queensland University of Technology, Brisbane, July.

Thompson, Robert (1995). Hyperqual2 Computer Workshop. Pre-Conference Workshop on qualitative research for participants of the Australian Teacher Education Association Conference, Sydney, July.

Thompson, Robert (1999). Appraising beginning teachers: Principals' conceptions of competence. Unpublished PhD Thesis, Queensland University of Technology.

Robert THOMPSON is Associate Dean (Teaching & Learning) and Deputy Dean in the Faculty of Education and Creative Arts at Central Queensland University, Australia. He trained as a primary/secondary teacher in the UK and taught in England for two years before moving to Australia. He taught for ten years in a variety of schools and was Director of an Education and Professional Development Centre for five years, before taking up an appointment at CQU in 1989 as Faculty Coordinator at a regional campus. The Faculty has recently introduced a suite of new education degrees: the Bachelor of Learning Management, the Master of Learning Management and the Professional Doctorate. These replace the outdated Bachelor and Master of Education degrees. He has been involved in the development, implementation and accreditation of the new programs. His main research interest is in the appraisal of beginning teacher competence.

Contact:

Robert Thompson

Faculty of Education and Creative Arts

Central Queensland University

University Drive

Bundaberg Queensland 4670, Australia

E-mail: r.thompson@cqu.edu.au

Thompson, Robert (2002). Reporting the Results of Computer-assisted Analysis of Qualitative Research Data [42 paragraphs]. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 3(2), Art. 25, http://nbn-resolving.de/urn:nbn:de:0114-fqs0202252.

Revised 2/2007