Volume 3, No. 3, Art. 25 – September 2002

Evaluation and Adjudication of Research Proposals: Vagaries and Politics of Funding

Wolff-Michael Roth

Abstract: In this contribution for debate, I attempt to do a critical anthropology of research funding at one national council, the Social Sciences and Humanities Research Council of Canada. In this, I follow its own paradigmatic inscription on the cover of the "Manual for Adjudication Committee Members" (which regulates funding related evaluation and adjudication at the Social Sciences Research Council of Canada) and take "a closer look," an inspection de plus près, at its process, the vagaries and politics included. To guide my anthropological investigation, I draw on different social science theories, which I articulate in terms of the topologies they provide for structuring the social. I draw on a form of writing that integrates third- and first-person perspectives on the social processes of funding and the autobiographical experiences of being and not being funded.

Key words: funding, politics, social theories, first- and third-person perspectives

Table of Contents

1. Introduction

1.1 A third-person perspective on funding ...

1.2 ... and a first-person perspective

1.3 Purpose

2. Social Topologies

3. Structure of Evaluation and Adjudication at SSHRC

3.1 Funding agencies as black boxes

3.2 Program officer

3.3 Assessors and assessments

3.4 Committee and adjudication

3.5 Proposal: Immutable mobile or fluid?

4. Vagaries and Politics of Adjudication

5. Shoring the Agency from Appeals

5.1 Justifying committee recommendations

5.2 Constraining and limiting appeals

6. Concluding Comments

7. Coda

8. Invitation to Discussion

|

How do you write about something that you really seem to understand only when you have experienced it? How do you write about something that you understood only through inflicted pain without sounding apologetic or accusatory? How do you write "objectively" about the politics of funding without having experienced the rejection of a grant proposal however unjustified the decision may have been or appeared? Does it matter that I was actually not too concerned with the rejection? (As I do the final editing on this manuscript, no final decision regarding my proposal has been made.) Does it matter that the rejection provided me with time to do and complete other dear-to-my-heart projects rather than running around to seek, recruit, and train suitable graduate students? Finally, does it matter that I had already planed to write an analysis of the process of funding? [1] |

This is a critical investigation of the vagaries and politics of research funding; my observations derive from my participation as applicant, assessor, committee member, and committee chair in funding-related evaluations and adjudications of four councils, the Australian Research Council (ARC), the Canadian Social Sciences and Humanities Research Council (SSHRC), the U.S. National Science Foundation (NSF), and the Fonds FCAR (Quebec). I concentrate my investigation on SSHRC, with which I have extensive experience in all roles.1) [2]

|

For an entire week, I was chairing a committee that ranked research proposals in the field of educational psychology and areas of education to determine those approximately 30 to 40% that would receive financial support by the national funding agency. Although being the only one who had read all proposals, I was not able to predict with certainty whether a particular proposal, though it was ranked highly by the two readers, would actually be funded. It only took the opinion of one reader who also made his or her point very forcefully, to change a proposal rating from high into the region of not fundable. Having submitted my own proposal to another committee, I began to have anxiety attacks—would my proposal be receiving a score that makes it not fundable? What made it worse, I saw the members of the committee during the breaks and lunch periods, continuously reminding me that what was happening to proposals in my committee could also happen to mine. [3] |

1.1 A third-person perspective on funding ...

An important question in scholarly debate over the past two decades has been about the nature of truth and scientific revolutions, based on historical studies of published research (KUHN 1970, POPPER 1959). More recently, research in the emerging field of the social studies of science focused on science in the making rather than science as ready-made product (KNORR-CETINA 1981, LATOUR & WOOLGAR 1979). Studies from the latter perspective have shown how the nature of scientific knowledge cannot be understood independent of politics, economics, and other social processes. That is, what we encounter as new facts, depending on our familiarity with the particular field through the daily news, popularizing media, or specialized, academic literature is a function, among others, of the nature of the projects that get funded. A particularly nice case in point is the history of artificial intelligence research in the United States, which, after what turned out to be an influential academic paper, shifted from a symmetrical approach to funding neural network and von Neumann architectures (which had led to traditional, symbolic artificial intelligence and cognitive science) to the almost complete abandonment of the former approach (OLAZARAN 1996). Following the predilections of their directors and program officers, the U.S. Advanced Research Projects Agency through its Information Processing Techniques Office, for example, directed its funding exclusively to symbolic artificial intelligence (AI), so that the history of AI really "is a paradigm of massive and effective state intervention in science and technology" (GUICE 1998, p.107). In many countries, though, program directors and officers have less influence than they have in U.S. agencies, rhetorically constructing their fairness through reliance on the peer review system. [4]

There are differences in the structure of funding and the way funding agencies operate across nations. I am most familiar with funding in Canada, though I have participated in evaluating proposals in Australia and the USA, too. In Canada, there are three major federal funding agencies, the Social Sciences and Humanities Research Council (SSHRC), the Natural Sciences and Engineering Research Council (NSERC), and the Medical Research Council (MRC). Differences in the way evaluations and adjudications are handled by the agencies and the participating scholars also exist across different funding councils. In the present, I will only analyze the processes involving the social sciences and humanities; in particular, I will focus on the standard research grants program, not only the largest of programs from the agency's perspective but also the most commonly known by the researchers. The Canadian minister for industry summarized the program in the following way:

"Standard Research Grants (SRG) is SSHRC's largest program, with a 2000-2001 budget of $39.3 million. This program provides three-year grants to individual researchers and to small research teams. In 2000-2001, the program supported 642 new research projects in all disciplines of the social sciences and humanities. This represents a 41.6% success rate, a slight decrease from the 43% funded in 1999-2000." (TOBIN 2001, p.10) [5]

The standards research grants program accepts applications in 17 different categories that cover the various social sciences and humanities disciplines, including one category for interdisciplinary/multidisciplinary research. Neglecting for the moment that the success rates differ across the committees, the very articulation of success co-articulates failure, though the latter remains normally unmarked. Not receiving funding is a failure, even if it was the result of injustice, sloppy work, or bias and prejudice. On this side, only the successes are marked and remarked. [6]

Does the peer review system guarantee that every proposal is fairly evaluated? What are some of the potential problems in the peer review system, which crucially selects between research projects being done versus research projects not being done and ultimately, what will be published as outcome of the research? But there are other questions, too, which we could raise—these questions come particularly salient when we look at the effect that the proposal adjudication and, with it, the peer review (evaluation) process has on the individual researcher. How do individuals experience the process of seeking research funding, particularly the moments associated with the process outcomes? What is the impact of a particular result when there appears to be, from the perspective of the researcher, clear evidence of misconduct, bias, prejudice, or carelessness? [7]

1.2 ... and a first-person perspective

On the other side, is the experience of success or failure in the grant-seeking process. Writing and submitting applications for research funding has a very personal side, even for those who normally are successful and who do not feel pressured by their heads of department, deans, or other parts of the university structure. As individual researchers, we are not only celebrating successes but also have to cope with the presumed shortcomings and even more so when we detect injustice and prejudice. [8]

|

For months I was wondering about how my proposal would fare in this committee for interdisciplinary research, where I had not submitted an application before. There were moments when I thought that my proposal could experience the same fate that that of another well-known researcher had taken in my own committee: my proposed research program could be rated 5.9 (out of 10), thereby failing the minimum score required, and even a high score on my past record would not help me. At other moments I felt much more positive figuring that even if I was given the lowest passing score, 6.0, a rating of 9 on my scholarly record, the score that I had received three years before, would give me a combined score of 78%, where the weighting is 60:40 for previous record and research program, respectively. The weeks before I found out the results, I was going through a roller coaster, moments of high when I felt that I would surely be funded and moments of low, when I felt that the vagaries of the committee process would lead to a not-funded decision. I was attending conferences and met many colleagues who already had received their results. (Despite the confidentiality agreement to which committee members and SSHRC personnel are committed, there are always researchers who know the results prior to the official notification!) The week before returning home was the worse: highs were following lows and lows were following highs. Upon returning, I found the email from my dean—I did not receive the funding sought. My score was so low that it fell into category 4A, recommended for funding but there was not enough money. I could not understand—had I not figured that under the worse circumstances, I would get a score that ought to have been sufficient. But I had to wait for another several weeks before I received the official notification including the scores and the justification of the committee recommendation. Record of research achievement: "Members found the candidate's record quite respectable, though it noted assessor 8's concern that some of his research papers appeared to be somewhat repetitive. The committee found the supporting document of good quality." (Notification from funding agency, p.1) "As evident in the bibliography and in Roth's vitae, he is an unusually prolific writer and his research interests are broad... . The quality of Roth's writing and his contributions to the field of science education are good, in my estimation. Many of his papers are repetitive, and he borrows extensively from others' more original contributions ..." (Assessor 8, p.2) Assessor 8 had made his comment without providing supporting evidence or pointing the committee to the particular place in the document where he found repetitiveness and borrowing "from other's more original contributions." The meeting justification not only noted the "concern that some of [Roth's] research papers appeared to be somewhat repetitive" but also omitted what can be read as a qualifier—"his contributions to the field of science education" directly precedes the comment about repetitiveness so that the repetitiveness is likely to pertain to that field. There is no indication that the committee actually cared to check that only three of the 40 publications on the limited two-page list pertained to journals in science education, the others being books and journals in social studies of science, applied linguistics, educational psychology, education, and cybernetics. In my initial response to the program officer responsible for my file and following "proper" appeals procedures, I wrote: [9] |

"I just received the evaluation of my proposal. I have some very grave concerns about the score that I have received on my research record.

I am writing to you following the procedures for appeal listed on the SSHRC website.

My submitted record shows:

|

Refereed |

Other refereed |

Non–refereed |

|||||

|

Books |

Chapters |

Articles |

Proceedings |

Papers |

Professional |

Reviews |

Lectures |

|

7 |

19 |

118 |

20 |

149 |

5 |

5 |

49 |

During my work on SSHRC committees (I chaired Committee 12 this year), I have not seen a single application with such a record for a single scholar.

It is incomprehensible to me and seems to smack bias (on reviewer 8's part, perhaps) that I would receive 7.45.

My articles listed have appeared in the highest rated (SSCI) journals of several fields, and I have had 7 awards for the quality of my scholarship." (My initial reaction to the program officer, May 14, 2002) [10]

|

In this initial contact, I communicated grave concerns; I felt and still feel that there was bias, perhaps on the part of one of the assessors and perhaps there was a lack of diligence on the part of a adjudicating committee member—which would not be, according to the information I was provided by insiders, the first time. If my case was singular, one could go on though hurtful to me and trust in the justice of the system in general. But I am not the only scholar who has serious concerns with the process. Another, world-renowned Canadian scholar recently provided me with access of a letter written to the granting council that states: [11] |

"I write to express severe dissatisfaction with [the committee's] conduct in evaluating my application [...] and lay serious charges that the [committee]:

failed to apply due diligence that is obliged by the high standards of scholarly review to which I am certain SSHRC subscribes, namely, to be open and clear about reasons for judgments, and to be consistent and fair in adjudicating applications.

acted with prejudice. By the word prejudice, I mean exactly and only this:

a preformed opinion, usually an unfavorable one, based on insufficient knowledge, irrational feelings, or inaccurate stereotypes

disadvantage or harm caused to somebody or something (Encarta World English Dictionary © 1999 Microsoft Corporation)." [12]

|

An insider to the agency told me that the decisions are a function of "the management and culture of committees of the division of research funding. I am convinced you would have received funding if your project had been submitted to one of the two education committees." [13] |

In this contribution for debate, I attempt to do a critical anthropology of research funding at one national council, the Social Sciences and Humanities Research Council of Canada. In this, I follow its own paradigmatic inscription on the cover of the "Manual for Adjudication Committee Members" (which regulates funding related evaluation and adjudication at the Social Sciences Research Council of Canada) and take "a closer look," an inspection de plus près, at its process, the vagaries and politics included. To guide my anthropological investigation, I draw on different social science theories, which I articulate in terms of the topologies they provide for structuring the social. [14]

Social topologies come in different forms—in the sociological literature, one can find theorizing in terms of regions, networks, and fluids (MOL & LAW 1994). First, in a regional topology, social objects are clustered together and defined by relatively clean boundaries; Erving GOFFMAN's (1959) articulates social space into region, such as "front," "back," "in the wings of," and "outside" the stage, depending on the relationship of the audience to the performance. While the "official stance" of the performers is visible on front stage, the impression fostered by the presentation is knowingly contradicted as a matter of course backstage, indicating a more "truthful" type of performance. In the adjudication process, "objectivity" and "objective evaluation" are on the front stage; on the backstage, things are being said that clearly smack bias. On the backstage, the conflict and difference inherent to familiarity is more fully explored, often evolving into a secondary type of presentation, contingent upon the absence of the responsibilities of the public performance. To be outside the stage involves the inability to gain access to the performance of the team, described as an "audience segregation" in which specific performances are given to specific audiences, allowing the team to contrive the proper front for the demands of each audience. This allows the team, individual actor, and audience to preserve proper relationships in interaction and the establishments to which the interactions belong. [15]

Second, there are (actor) networks characterized by relations between rather heterogeneous elements (human, non-human); the nature of the relations constitutes socially relevant entities such as distance, difference, and power (e.g. LATOUR 1987). In actor network theories, each individual, group, technology, company, belief, finance, raw material, or artifact counts as an actor. Actor networks do not a priori distinguish between human and non-human actors on a priori grounds, and therefore constitute "symmetric anthropologies" (LATOUR 1993, p.101). Any social process is modeled by including all the relevant actors as nodes in a network; methodologically, this means that researchers have to follow the various human and non-human actors that are present. Stability arises from "immutable mobiles" (e.g., inscriptions) that circulate in the network and thereby put different actors in relations. Changes necessarily ripple through the network and therefore have consequences not only to the individual actor but, reflexively so, to the network as a whole. Recently, I used actor network theory to articulate and explain the origins of editorial power, authorial suffering, and the stability of the "publish or perish" practices in North American academe (ROTH 2002). Actor network theory was useful because it allowed me to represent publishing, reviewing articles, editing journals, and undergoing tenure and promotion review as a seamless web of activities and actors. This web is relatively stable (and therefore difficult to change) because of the stakes involved, documents exchanged, biographies, and the history of the community. Most importantly, actor network approaches emphasize that the "same" social process can be viewed from the perspective of any of the actors, the Nobel Prize winning physicist, his cleaning personnel that make work in the lab possible, or the graduate students who spent many overnighters to get the data on which the award was ultimately based. In fact, taking the perspective of the non-heroes provide different, less complimentary, and down-to-earth account of the events that others recount in terms of heroism and in the form of master narratives (REDFIELD 1996). Even more surprising insights can be gained on social events, even cognition, if one takes the perspective of salient artifacts (e.g., tools) that have some currency in the actor network. Though its audience was mostly cognitive psychologists, I once featured the story of a glue gun on the development of practices in a hands-on science class (ROTH 1996). [16]

Third, the metaphor of fluid has been used to theorize both social space and social (including non-human) actor (MOL & LAW 1994). In contrast to a regional topology, fluid spaces do not have clear boundaries, objects generated within them and the objects/actors that generate them are not well defined, and boundaries (separating, e.g., normal from pathological) are gradients (de LAET & MOL 2000) rather than discontinuities characteristic of traditional ontologies and mereologies (e.g. SMITH 1997).2) Fluid topologies and fluid objects are not easy to deal with, because the nature of their ambiguous boundaries makes them resist easy classification—their identities cannot be determined nice and neatly, once and for all. Inside, outside, and borderline, important to making distinctions in traditional ontology (e.g., what is the nature of a point on the boundary separating green and red surfaces) cannot easily be distinguished, nor can similarity and difference. They come, as de LAET and MOL point out in an interesting twist on SMITH's (1997) problem, "in varying shades and colours" (de LAET & MOL 2000, p.660). A fluid ontology gives rise to other interesting entities, such as mixtures, and properties, such as robustness. Finally, a fluid is inherently dialectical because it accepts the other two topologies as coexisting with itself. Thus, fluid spaces are not better than regions or networks: "Fluid objects absorb all kinds of elements that could only ever have come into being within the logic of other topologies. Doctors use numbers and observations. They mix them together happily." (MOL & LAW, 1994, p.663) [17]

And thus I will happily mix discourse analyses of the contents of policy documents, reviewer comments, and minutes of meetings; observations made in meetings where decisions about funding were being made; and computer models of decisions that implement an actor network ontology. I will also happily mix first-person perspectives with third-person perspectives, which have been used by traditional ethnographers and are still the most acceptable to most journals of social science research. [18]

3. Structure of Evaluation and Adjudication at SSHRC

3.1 Funding agencies as black boxes

The peer review process has been implemented, as in other parts of academia, because it is often thought to be the best of all systems to assure fairness and quality. But, as I have argued elsewhere, the very structure of peer review, especially blind and double-blind review processes lends itself to heinous attacks on the part of reviewers (even editors) against which the author of the reviewed piece has no recourse; it also gives some individuals, those that are placed in special nodes of the network such as editors in journals, enormous power over what and who gets published and who does not (ROTH 2002). [19]

In the field, funding agencies are referred to as if they were singular actors. Thus, when talking to academics, one can hear "SSHRC (Canada) did not fund my proposal," "In the DFG (Germany), there is a bias against qualitative research," or "I got a career grant from NSF (USA)." That is, such parlance divides the field into different regions, the agency, often associated with the city where it is located (In particular contexts, "I am going to Washington" means "I am going to NSF"), and the world beyond. The agency is treated like a single point in the topography of funding in the social sciences; it is also an actor, dispensing funds; and, as LATOUR (1987) taught us to think about such issues, it is a black box. To the outside, the infrastructure is invisible, but it can, under certain circumstances, be opened up—though, as I will show here, among others, it cannot be opened by the ordinary academic out there. A relationship of power is enacted at the very moment more researchers attempting to get funding from to the agency than there is money to fund; somehow a decision is being made to fund some but not others.3) Because the agency dispenses a desirable good, the competition for this good stabilizes the agency and its infrastructure; it is thus that relations of power come about rather than existing a priori.4) If there was no competition, a small office would suffice to dispense the available funds. In this way, those who are not funded, even those subject to a non-funding decision based on a biased process, support the funding agency and its infrastructure. This stability is based, to a considerable part, on the fact that the processes within the black box are invisible to most academic actors, who are therefore subject to, or shall I say, subjected to the decisions regarding their request without recourse (ROTH 2002).5) [20]

In the Canadian Social Sciences and Humanities Research Council, peer review is instantiated in two stages:

SSHRC grant applicants submit detailed research proposals that are evaluated:

first, by a series of external assessors outside SSHRC who are experts in the research field in question;

second, by peer review committees composed of other experienced researchers.

The review committees recommend to SSHRC which proposals to fund, based on the highest standards of academic excellence and other criteria, including the importance of the proposed work to advancing knowledge. (http://www.sshrc.ca/web/apply/policies/appeals_e.asp) [21]

|

I have become quite disillusioned with the peer review process after an experience with one of the highest ranked journals in education (based on impact ratings by the Social Sciences Citation Index). I had submitted an article that was returned, after a lengthy period, with two recommendations for "rejection." I took the article, gave it a new title but changed nothing in the body of the text and then submitted it to the same journal. This time it came back highly rated with one "accept as is" and one "accept with minor changes." [22] |

Before articulating and theorizing the adjudication process (Section 4), I describe some of the key actors and their duties in the process, program officer, committee members, and assessors and their assessments. [23]

Without doubt, the program officer who selects assessors, committee members, and committee chair has a critical role. His or her acquaintance with and knowledge of the field is crucial for guaranteeing the fairness of the process. Unfortunately, at SSHRC, the program officers often do not know the field and thereby leave the process open to fail.

"The program officer serves as both resource person to the committee and SSHRC's representative during the adjudication process. The officer is responsible for ensuring that the committee understands fully and applies consistently all relevant SSHRC policies and regulations in order that all applications receive equitable and fair treatment. The officer will intervene as necessary during the adjudication to guide and advise the committee and to interpret SSHRC policy. The Program officer also alerts the committee to any problems with specific applications or recommendations and suggests possible solutions or alternative recommendations." (SSHRC 2000, p.12) [24]

At SSHRC, the program officers have or rather should have relatively little impact on the decision, though their choice of the reviewer and their assignment of the readers can make or break a proposal. The field of expertise of a program officer may not lie in the area that they are responsible for—someone trained in anthropology may be responsible for the process in educational psychology and areas of education. In this case, they are less familiar with the microstructure of the field, the relations that make friends and foes—they may select an assessor who has friendly or inimical relation to the proposing author without declaring the nature of the special relation. The officer is not an insider in the field and because of this ignorance, is likely to commit errors in the selection of assessors. In actor network terms, this potentially very powerful actor on the inside of the black box makes decisions that have an aleatory aspect, which, in this case, is not a way of eliminating bias (in contrast to the reigning paradigm in randomization in experimental designs). In addition to making these decisions, the program officer organizes the meeting, sends the files and returned assessments to committee members and chair. [25]

The nature of the program officer differs significantly from, let's say, the U.S. National Science Foundation (NSF). Here, the program officer frequently is a specialist in the field, an academic him- or herself, who selects the four reviewer-panelists, whom they often know personally from conferences and other venues. Unlike in my Canadian context, I have experienced on many occasions NSF program officers intervene in discussions—attempting to put a better light on a particular proposal and proposing faculty, especially when there are several unfavorable reviews. In any event, the program officers often do, if they do not like the reviews, solicit more either during the meetings or subsequent to them to get reviews of the kind that support their funding or non-funding inclinations. Because of their knowledge of the field, they can select the reviewers such that they are likely to come up with the preferred assessment. (A friend serving as the editor of an international journal told me repeatedly that it is not difficult to get specific results in the review process. It all depends who he selects as reviewers to get a proposal rejected or accepted, and thereby bias the process in favor or against a particular article or proposal.) [26]

Ideally, two sometimes three assessors external to the adjudication process assess each proposal intended to assist the committee, which may not have the required expertise, in evaluating a proposal: "[...] adjudication committees depend on the advice of external experts since they do not always possess the range of expertise necessary to competently judge all applications." (SSHRC 2000, p.17) [27]

If no suitable assessors can be found, "the committee is asked to give the application an in-depth review" (SSHRC 2000, p.7) and "must take special care to justify its recommendations [based solely on committee members' assessments] as fully as possible" (SSHRC 2000, p.18). [28]

|

The committee discussion more often than not disregards the assessors' reports. On the other hand, readers who have not taken the time to carefully read the assigned files tend to draw on the reviews more heavily. [29] |

The assessors' reports are subsequently made available to the review panel, and in particular to the two readers assigned to each proposal.6) Whether the two readers (and the committee) draw on the assessors cannot be determined a priori. Frequently, the readers and the committee as a whole judge the assessment as too complimentary, only infrequently as too critical or biased. Some assessor reports read as if there were ideological or personal differences between assessor and applicant, differences that are then played out in the review. Information that has no grounding in the proposal itself is used to provide a negative critique and worse, to slander an application. Personal or paradigmatic bias can also exist between the applicant and a committee member, without such bias is openly declared as such. [30]

|

I experienced the most scathing and biased attack on any proposal during an adjudication at NSF in Washington. A non-traditional artificial neural network (ANN) proposal had received very good and excellent assessments by all four panelists. Although this would have been sufficient, the program officer decided to solicit another review, this time from a world-renowned scholar who publicly defended GOFAI (Good Old-fashioned AI) and hardwired linguistic competencies of humans. Within an hour, the scholar had not only "read" the 15-page 10-point font proposal but also written a scathing two-page review: It had taken me, one of the assigned readers and familiar with several domains of the described interdisciplinary effort including ANN modeling, an entire afternoon to read. [31] |

"On occasion, the committee reviews an assessment which it judges to be biased, unfair, or personally hurtful to the applicant. In such a case, the committee may wish to use the committee comments to inform the applicant that it does not endorse the views of the assessor in question." (SSHRC 2000, p.18)

How does a committee that depends on the advice of external experts because it does not possess the expertise decide that an assessment is biased or unfair? If there had been conflicts within the field between the applicant and one or both assessors, how would anyone be able to uncover the apparent conflict and injustice, especially given that the program officers have little understanding of the field? How does a committee on which none of the members have expertise in the field of the application deal with an assessor statement such as "Many of his papers are repetitive, and he borrows extensively from others' more original contributions"? How does it deal with the statement when there is no evidence provided, just a claim, period! When there is no basis for such an assessment, it constitutes mere bias, opinion, which does not even find support outside the proposal. [32]

Some fields are relatively small; consequently, all of the assessors know and are familiar with the applicant. The reviews point out all the strengths of a proposal and are therefore inherently excellent and recommend funding, often basing the recommendation on the applicants past record rather than on the details of the particular proposal. [33]

Given the diversity of committee members and given the program officer's lack of familiarity with a particular field, the door is wide open for acrimonious, biased, heinous, and personal attacks against an individual researcher. How can a well-functioning committee take unsupported claims such as "His papers are repetitive" and "he borrows extensively from others' more original contributions" as matters of fact for basing a decision? [34]

Unfortunately, the evaluation and adjudication procedures and the guidelines governing appeals are such that they give slim chance to applicants to uncover bias and to trigger remedial actions.

"Under the provision of the Privacy Act, the name of the external assessor or appraiser of an application for SSHRC funding constitutes personal information about the assessor, not the applicant. Applicants will have access to the full text of the external assessments in the research support programs, with the exception of the name of the assessor." (SSHRC 2000, p.5) [35]

|

A friend who works in the USA told me a way of making sure that certain foes and enemies do not review your NSF proposal is to make them a member of your national advisory board; conversely, if there is someone that you expect will provide a more favorable review, you do not make them a member prior to the funding decision. Once you have secured funding, you change the national advisory board, excluding and including individuals so that it reflects a group that you can work with. The project as a whole becomes very fluid, changing its nature thereby limiting the opposition that it might experience at different moments in time and different social locations. [36] |

3.4 Committee and adjudication

The program officer selects a committee whose size depends on the number of files submitted to a particular area; in the two education committees and the interdisciplinary committee, there can be between 120 and 140 files each year. The officer selects committee members according to a set of criteria intended to ensure

"[t]he overall competence and credibility of the committee;

the scholarly stature of the individual nominees;

appropriate representation on the basis of areas of expertise, university, region, language and gender;

an appropriate knowledge of both official languages. (In order to participate in bilingual discussions without simultaneous translation, members must have a reading knowledge and good aural comprehension of the second official language.)" (SSHRC 2000, p.10) [37]

|

Program officers have told me that readers sometimes barely know the files assigned to them. These are readers that are not asked to return. Such readers often base their comments entirely on the assessments. [38] |

Putting together a committee given the listed constraints—plus the additional constraint of actually finding a professor who not only fits a particular profile but also is willing to serve on the committee given the enormity of the task—is a complex problem. The problem is particularly complex because "appropriate representation" is not only a matter of fairness and representation, but also an issue of political correctness in a country with diverse regions, cultures, and universities and of gender equity. Officers feel quite constrained, as one of them once wrote, "we have a set of criteria that we have to respect in the selection of persons that may sit on our committees. I need a person from the province of Quebec."7) Appropriate linguistic representation of and competency in the two official languages (English, French) is content of two of the four bullets. It is not surprising that under the best of circumstances, equity along all dimensions is practically never achieved. [39]

|

Being fluent in both official languages, I have no difficulties following the deliberations in either one. In fact, as committee chair I insisted on each member's choice to speak in his or her preferred tongue. At the same time, I know that several individuals in the meeting cannot follow what is being said. At times, those of us fluent in both languages translate. At other times, the mere fact of having to adjudicate 140 files in four-and-one-half days militates against delayed translations as a permanent fact. So at any one point, some of the members neither understand nor contribute. In the end, we nevertheless note a collective, committee decision. [40] |

Some committees have a tremendous charge, having to adjudicate 140 odd proposals. In any event, each proposal is assigned to two committee members, Reader A and B. Readers have approximately six to eight weeks to read and rate on a scale from 1 to 10 about 30 to 40 files. The meeting is then convened in a special place, a particular region of the country (in Canada, we often talk about regional disparities), the nation's capital. During the meeting (on a stage inaccessible to most proposing authors), Reader A presents his or her considerations, ending with the presentation of the scores for past record and present proposal. Reader B makes an equivalent presentation. If the scores are close, and especially after a day or two of meetings, the two members quickly settle on a score, changes to the original scores being frequently proposed prior to making the comments. A discussion follows in case of discrepancies between the two assessments. When there are differences between the two readers, especially differences that they do not seem to be able to resolve, other members enter the conversation. The committee chair mediates the deliberations and summarizes the consensus that emerges. [41]

|

There are also observers, whose task it is to further assure fairness of the adjudication process. However, when the "observer" enters the room, the committee seems to be really behaved. None of the almost bitter discussions that oppose two members of the committee occur in the presence of the observer, nor do I observe those moments that I call "ganging up against a researcher or a research group" in the inner conversations with myself. Very successful researchers or groups with lots of funding are more likely associated with a negative bias in a committee conversation than less known and individual researchers. When we deal with the proposal of well-known researcher, one might hear comments such as "the researcher just repeats him/herself," "he/she has already gotten grants for this numerous times," "this is only a marginal increment over what he/she has done in the past." For a "grant-writing machine," that is, a group of researchers that write multiple grants, taking turns in being the lead researcher (PI or principal investigator), I can hear in addition comments such as "they don't need it" or "they already have another one." All of these comments negatively bias the conversation. [42] |

During the first two days, the committees actually develop something like a personality—at least those that I have worked on seemed to develop one. Being cooped up for eight or nine hours in the same room (sometimes without windows), one comes to know arguments and argumentative styles, particular sensitivities, preferences with respect to particular research methods and theoretical frames; and one develops friendly inclinations towards particular others in the room. [43]

|

I have had repeatedly the curious experience that the committee almost acted as organism despite the often great diversity of the individual members. At times, this new organism was not dispassionate in the way objectivist philosophers displayed science, it could be vengeful when it felt offended, inflict punishment. I was not only unable to explain this emergent behavior built also how I had became a part—an organ, a member—of this organism myself. In this collective, my individual I—which I might have experienced reading a proposal in the isolation of my office—was mediated and had taken a new identity to support the functioning of the emergent organism. There was this strange dialectic that I became part of and supported decisions that on my own, independent of the collective, I would not have and did not support. For example, I had come into a meeting with a high score on the program of a particular proposal and ended up consenting with all others that the proposal was below the funding threshold. [44] |

We can look at the committee, although it looks like a singular agent to the outside, as a network of actors. Because of the connections, and the stipulation that any decision has to be consensual, the identity of each actor is not that he or she would enact in a different network. Rather, new identities never experienced before (and perhaps never after) may emerge as a result of the particular situation that brings together diverse individuals under specific conditions and principles (ROTH et al. in press). [45]

Before modeling the decision-making process about how to interpret a proposal and ultimately, whether to fund or not (see Section 4), I show how the proposal itself can be understood as an immutable mobile or as a fluid. [46]

3.5 Proposal: Immutable mobile or fluid?

To understand social processes, different topologies have been proposed—my exposition thus far exhibited two such topologies: places and networks. "Ottawa" and "SSHRC" (funding agency) as opposed to the geographic location and researcher status of individual applicants implicitly uses "geography" to create distinctions. The expression "Going to the trough" to refer to seeking funding also embodies this topology of places. On the other hand, my description also articulated actors that stand in particular relations—applicants, program officers, program directors, assessors, and committee members. These actors form a network, an actor network (LATOUR 1987); the applicants use their proposal to enroll assessors, individual committee members, the committee as a whole, and, ultimately, the president who acts for and represents the agency ("SSHRC staff submit recommendations for funding to the President of SSHRC for approval" [SSHRC 2000, p.19]). That is, each proposal travels in the network, which includes different geographical places, from the applicant (and his or her institution) to SSHRC (Ottawa), to applicants and committee members and their home institutions, and from the committee members back to Ottawa for the adjudication. As the proposal text does not change when it is copied and while it travels to different places and actors, it takes the aspect of an immutable mobile (LATOUR 1987). It is the flow of these immutable mobiles in networks that stabilizes the network; it is the lifeblood of these networks. Without them, the network would not exist. [47]

Although there is an aspect of immutability, there is also an aspect of flexibility, for the proposal means different things to the different actors and in different places. These differences can be used to identify boundaries in a topology of places, which has given rise to the notion of boundary objects (STAR & GRIESEMER 1989), which are, as other material and technological objects, subject to interpretive flexibility (e.g. BIJKER 1995). The proposal, qua boundary object travels to different places where it is interpreted flexibly. The same research record as articulated in the proposal can therefore be both evidence for originality and copying, for distinction and ordinariness:

"As a whole, it is evident that the researcher distinguishes himself by his research record, both in terms of its quantity as in its quality. His previous work both prove his originality and are of interest to more than one discipline ..." (Assessor 5, p.1, my translation)

"Many of his papers are repetitive, and he borrows extensively from others' more original contributions ..." (Assessor 8, p.2) [48]

The committee members, individually and as a collective, may agree with one of these assessments or interpret the record in yet another way. This is an ontology in which the same object, with a constant internal structure, is merely interpreted differently. But we can think of the proposal in a different, dialectical way, leading to a different ontology. [49]

The proposal can be viewed as a fluid, changing (a part of) its identity, in the same way that human beings are characterized by different identities when they change their social location—they can be powerful (as father towards child) and powerless (as assembly line worker) at the same time, they can be, as history showed, both loving (as father towards their children) and demonic torturers (as guards in concentration camps, white police officers on duty beating up on blacks [e.g. Rodney King affair]). In the same way, the proposal changes its identity, is something in its relation to different people and groups. At the same time, there is also something constant about it, some aspect of materiality that doesn't seem to change even if replaced by a copy. This is the dialectic aspect of identity, difference in the face of sameness, sameness in the face of difference—this aspect of identity has been captured in terms of the tensions between idem and ipse identity (RICOEUR 1990). [50]

Conceived as a fluid, the changing identity of the proposal is not surprising. Fluid spaces (e.g. academe and funding agencies) do not have clear boundaries and "the objects generated inside them—the objects that generate them—aren't well defined" (MOL & LAW 1994, p.659). This makes it difficult to define boundaries; even those between the pathological (leading to a non-funding decision) and the normal (suitable for funding) are no longer stable and unambiguous. The same proposal that was highly rated by the assessors and both readers as meritorious for funding, may end up, after the committee meeting, having some pathology that makes it unfit for being recommended. That is, even if all a priori conditions were positively biased toward a particular identity of the proposal-fluid, the adjudication meeting may give it a different identity. In a fluid space, it is not possible to nice and neatly establish boundaries (as this is attempted in ontology [e.g. SMITH 1997]), and therefore identities, once and for all. [51]

4. Vagaries and Politics of Adjudication

|

During a recent adjudication meeting, I sat with nine other professors around a table having to make decisions about funding or not funding a large number of proposals. For each file, we came to a decision. Sometimes it took a long time. Sometimes a file that came in with high scores retained high scores. At other times, despite initial high scores, an application was scored so low as not to be eligible for funding. As the chair, I attempted to predict the contributions of individual members to a particular file. But more than once I was astonished how, for example, two individuals sometimes supported the same file, whereas their input supported opposite decisions (funding, not funding). During some of the specific discussions, I was not in the position to make any accurate predictions as to its outcome. Some members spoke in favor of funding, others provided arguments why the project should not be funded, and still others did not speak out at all. In the end of each discussion, however, the committee, through its chair, formulated a consensus position representing the committee as a whole. The "committee consensus" is therefore a construction in more than one sense. First, it is socially constructed because of the involvement of more than one person and the use of language, a cultural tool, to make it what it is. Second, it is a construction, a front, a lie, because many members of the committee actually never are in a position to contribute. [52] |

Looking at the entire process of adjudication any one file after the fact—in an analysis not unlike the one MEHAN (1993) made about the "social construction" of a disability—we might easily come to the conclusion that the decision was "socially constructed." Accordingly, each member contributed his or her pieces, the building blocks from which the collective constructs the outcome. [53]

|

I experienced myself as taking on another person's position, being more or less convinced by the argument he or she has been making. At other times, nothing that another person said seemed to be able to get me off my own position. In a few cases, we seemed to get stuck one or two insisting on supporting funding, one or more others insisting on not funding. None seemed to be willing to move. As the committee chair, I asked those who had not contributed to the particular conversation to declare and support their own preferences with regard to the assessment of a file. Going around the room, a sense usually emerged for the group's predilection for one or the other solution and a decision could ultimately be agreed upon unanimously. In isolated instances, we deliberated for one or one-and-one-half hours before we got to that point. [54] |

When this occurred for the first time, images of some of my modeling efforts emerged; I had translated an actor network approach in which each statement made by a person in a meeting is modeled as a semiotic actor that stands in some relation to other statements (actors), supporting some of them while contradicting others.8) My modeling efforts show that even minute new input may provide sufficient change to the overall constellation to get the decision-making process going again and bring it to one solution or another. [55]

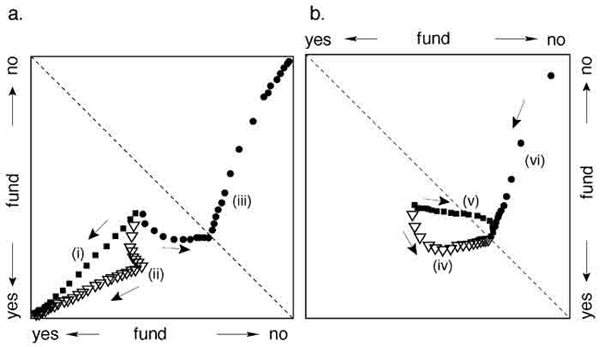

Figure 1 shows the outcome of the decision-making process for different scenarios. Or, in terms of the fluid ontology, the committee attempted to arrive on its own understanding of the proposal's identity. I take each statement that is made in a conversation as describing an aspect of the proposal's identity as a node; some statements support one another and are therefore modeled as supporting (reinforcing) links between nodes. Some pairs of statements are contradictory, each supporting a different identity, which I model in terms of links between nodes that weaken each other. Some statements do not relate to other statements, which is characterized in the model by the absence of a link. The final model is therefore a network of statements about a proposal that mutually reinforce, weaken, or are neutral to each other. The outcome of the process is a solution with the least constraint in the network. (More details of this form of modeling, and a concrete case of modeling one concrete decision-making process can be found in ROTH [2001].) The network relaxes. Mathematically, the system finds itself somewhere up on a mountainside in an n-dimensional landscape and is moving (could we say flowing?) down the steepest slope until it ends at some lowest place (lowest state). [56]

In Figure 1a, the committee starts mildly favoring a decision to fund. However, because of different ways in which the same propositions are affecting one another, the committee comes quickly to a supportive decision (Fig. 1a.i), after initial consideration to the contrary supports funding (Fig. 1a.ii), or during the considerations shifts to support not funding (Fig. 1a.iii). The fluid seems to flow into one direction but then moves off to the other.

Figure 1. Modeling group decision making when some propositions favor funding and other propositions favor not funding. Propositions

can be supportive of other propositions or contradictory. The "strength" of support or contradiction corresponds to how "emphatic"

the statement is presented in the meeting. a. The three scenarios are based on the same set of propositions (semiotic actors),

but which are related to one another more or less strongly because of different emphatic level with which they have been put

forward. The committee begins without strong commitment to recommend funding or not funding. b. Despite very different starting

positions, one strongly favoring not funding, the committee ends up in a stale-mate. The three scenarios are based on the

same set of propositions but which are put forward with different emphatic levels. [57]

Figure 1b models the different situations in which the committee was hung because both decisions (funding, not funding) were concurrently being supported so that a consensus could not be reached. The different trajectories in Figures 1b.iv and 1b.v model the different ways in which the committee reaches the state of indecision. In Figure 1b.vi, despite almost certain starting position of not funding, the committee moves toward a state where both funding and not funding are supported equally well. [58]

The model is somewhat simplistic in that it only models the changing strength of the propositions but not the relation between them; further, it models all statements given simultaneously rather than being added in time, continuously modifying the context in which future statements are made. Nevertheless, the model underscores that minor variations in initial conditions can lead to substantially different outcomes—one decision over another. [59]

|

In the meeting: At times, I feel ridiculous. The entire discussion has been in English or French, while the respective non- or poorly-speaking members evidently had attended to other things or given the impression of dozing off. Yet I am to formulate the consensus position—consensus of how many individuals? How many knew sufficiently about the file to make judgments about the "quality and significance of the published work," the degree of originality and nature of expected contribution to the advancement of knowledge," or the "appropriateness of the theoretical approach or framework"? De facto, the decisions are based on the two readers' decision and consensus, whatever their own competencies vis-à-vis the application and the field. I nevertheless contribute in a way so that the occasional observer can note that the committee as a whole and I in particular have "followed the rules."9) [60] |

In this example of the committee meeting, it was not only the final product that emerged, indeterminately from the complex and ever changing interactions between the members of the committee; the trajectory of each discussion itself emerged indeterminately. The expression, "laying a garden path in walking" may be the best description for our experiences as members on the committee. The notion of "indeterminate" here means that even after doing 140 odd files, we could predict with any certainty either process or product of our conversations other than in rather encompassing and indistinct terms. [61]

An important "biasing" aspect of the direction a committee will take is the real or perceived expertise of the reader or committee member. In the case of real or perceived expertise, the committee decision will be close to the opinion and assessment of this reader. If real or perceived expertise lies with another committee member who was not the reader, this person, depending on the level of his or her engagement, can change the direction of the discussion to the point of getting a well-rated proposal into the category of un-fundable projects. In some situations, even proposals with high ratings by both readers and the two or three assessors might end up not being funded as a consequence of the influences within the committee. Thus, I found myself in situations where I had to assist the program officer drafting the committee summary that would become part of the notification letter, particularly when there was the perception that the applicant might complain and file an appeal: "Should the committee make a negative recommendation contrary to the generally favorable recommendations of the assessors, the committee must take particular care to provide a clear rationale for that recommendation." (SSHRC 2000, p.17) [62]

|

Although my (i.e., Reader A's) recommendation as well as Reader B's recommendations had been high, we ultimately decided to give a score so low that the proposal was automatically excluded from funding, "to send a message to the researcher." More difficult to me, I was asked at times to articulate the "flaws" in the study and the committee consensus, although I had not been the chair at the time, but perhaps because I knew the application very well and was perhaps the only one to fully understand its complexities. It is a strange feeling to write a committee consensus that is opposite to your own thinking prior to the considerations. It is even more difficult to construct a consensus when some comments made during the deliberations have to be interpreted as "off the record" even though they were not marked so while they were made and other comments pertained to information that was not available in the proposal itself but to the deliberations about another of the applicant's research programs. [63] |

A most important issue in funding is systemic and systematic bias within the committee structure that excludes particular types and styles of research. While not apparent to me on the SSHRC committees on which I served, I have (a) noticed bias on the part of members of certain disciplines (psychology, cognitive science, computer science) for experimental designs and against qualitative research designs and ethnographic research. The same type of bias has been reported to me to occur, for example, on the SSHRC panel evaluating and adjudicating psychological research; according to my informant, qualitative research stood little to no chance of receiving funding because there was a systemic bias against this form of investigation. Finally, many German colleagues in science education (Didaktik der Naturwissenschaften) told me about the difficulties to get funding for research with qualitative designs, which they explained (we can take them as lay sociologists) by the predominance of experimental and quantitative psychologists on the DFG panels. One German psychologist, influential because taking key gatekeeper positions (LATOUR [1987] calls them obligatory points of passage) at DFG, has told me repeatedly that my own research program—though clearly successful on an international scale—would not be funded by his agency. [64]

5. Shoring the Agency from Appeals

5.1 Justifying committee recommendations

In an attempt to maintain fairness in the peer review process, the Social Sciences and Humanities Research Council of Canada established an appeal procedure that provides applicants an opportunity to seek reconsideration of a decision. The extent to which this procedure constitutes a true opportunity or whether it is simply a lip service to fairness is, of course, a matter of the appeal as a practical accomplishment rather than a matter that can be decided through theoretical considerations. The first step in dealing with potential appeals begins in the adjudication meeting, where the chair of the committee is asked to provide a summary that reflects the "committee decision." [65]

Independently of the fact whether there is actually a chance for overturning a committee decision, and perhaps in order to veil and cover up for the insufficiencies of the process, the Council, at least on paper, attempts to provide researchers with information on which to ground the appeal.

"It is essential for SSHRC to be able to provide the applicant with a clear, reasonable and sufficient explanation for the adjudication committee's recommendation and the Council's final decision. This is crucial for applications refused on merit and for applications that the committee judges meritorious but which cannot be funded. The recommendations also provide important feedback and encouragement to applicants, serving as a developmental instrument for future research proposals ..." (SSHRC 2000, p.19) [66]

(Interesting discursive move that the "Council" provides the feedback, whereas in other circumstances, it is the program officer. Perhaps this is useful on the inside. It is a Council decision as long as things go right, but it becomes the program officer decision when things go wrong—he or she can be fired. [The precariousness of the officers and the insecurities of their status because of constant renewal of contracts has been communicated to me by more than one program officer.]) [67]

In actual fact, the comments to the researchers are, in most instances, very brief. In my own case, the crucial assessment of my record of achievement was barely more than a two-liner: "Members found the candidate's record quite respectable, though it noted Assessor 8's concern that some of his research papers appeared to be somewhat repetitive. The committee found the supporting document of good quality." Do these two sentences constitute "clear, reasonable and sufficient explanation for the adjudication committee's recommendation"? In particular, does it provide sufficient grounds to decrease the assessment score of a researcher's record from 9 (out of 10) to 7.45, when he has published in the intervening period five books, 45 articles, and 15 chapters? The council guidelines for the adjudication process point out that that sufficient explanation is especially "crucial for applications refused on merit and for applications that the committee judges meritorious but which cannot be funded." Does such a brief statement really serve as the "developmental instrument for future research proposals"? How am I to develop if the assessors charge that my papers are repetitive was true? The previously quoted colleague had this to say:

"The committee is especially obliged by the standards of scholarly peer review to provide open and clear reasons for why it recommended such a severe reduction. Instead, the Committee provided one, 18-word sentence: 'It judged that savings could be made in the proposed expenditures for personnel, travel, supplies, and equipment.'" (Scholar's Letter to SSHRC, p.2) [68]

On paper at least, the agency appears to have a process that can be appealed. The policy states that committees should provide sufficient feedback about its deliberations, which could be used in an appeals process. Whereas in my NSF experience, researchers received feedback of considerable length, written by the first (Reader A) of four readers, the actual feedback provided by SSHRC to Canadian researchers is (ridiculously?) short. Although the Council policy posits "clear, reasonable and sufficient explanation," in actual practice, the feedback often is neither clear, nor reasonable or sufficient. [69]

My account thus far already articulated features of the adjudication that can be counted as mediating factors that lead to insufficient feedback from the agency. The program officer constructs this feedback during the month following the meeting from notes taken during the meeting and, particularly, on the basis of the chair's consensus statement at the end of a discussion. The officers are not a specialist in the adjudicated field and therefore does not necessarily understand the arguments put forward during the discussion. Their task is made more difficult by constructing notes with some delay (during the weeks after the meeting), not during the meeting pauses and at the end of each day as we had done on NSF panels—the notes were completed and ratified by the other readers before the meeting was adjourned. Finally, the shear quantity of feedback notes that officers have to construct further mediates the quality of the feedback. [70]

5.2 Constraining and limiting appeals

In the appeals process, the program officer attempts to get as many committee members as possible to agree on a telephone conference. The original file and the letter in which an applicant files the appeal are mailed to the members that agreed to read the proposal and the appeal, and to contribute to the conference discussion. [71]

The Council has constructed the appeals procedure such that it is very difficult if not impossible to get a decision revoked. These barriers are built (a) into the procedure and (b) into the fact that the committee deciding on the appeal is the same as the committee that took the original decision. [72]

|

Pertaining to my own appeal, an insider offered the following advice: "I am suggesting to you to wait for the committee's comments and to resubmit your project next year to one of the two education committees. It doesn't help at all to appeal, because you won't have valid grounds to appeal. In any case, it won't help you." It doesn't help at all to appeal because I won't have valid grounds? Was it meant to tell me that the process is inherently designed to stabilize the initial decision that a committee had taken? [73] |

First, the SSHRC Manual (2000, p.7) states, "Decisions may be appealed on the following grounds: where there has been an administrative or procedural error in the adjudication process; or where the decision is based on a factual error." The two types of errors are subsequently explained.

"Procedural error includes any departure from the Council's policy regarding undeclared conflict of interest or a failure to provide prescribed information to the adjudication committee ...

Factual error exists where there is compelling evidence that the committee based its decision not to recommend an award on a conclusion which is contrary to information clearly stated in the application. An example of such an error would be a committee statement that an application was not recommended due to the applicant's lack of any peer-reviewed publications, where in fact, the application lists several publications in media universally acknowledged to be peer-reviewed." (SSHRC 2000, p.7; also available at URL: http://www.sshrc.ca/web/apply/policies/appeals_e.asp) [74]

But the appeals document then continues

"The Council will not accept appeals where the committee, though it could be in error in interpreting the proposal and any assessments, has made a reasonable attempt to judge fairly the merit of an application. Nor is the appeal process intended to deal with differences of scholarly opinion among applicants, adjudication committees and external assessors." (http://www.sshrc.ca/web/apply/policies/appeals_e.asp) [75]

Thus, although there may be errors, the Council—or rather, the small network of people making the relevant decision—does not have to accept appeals on the grounds of error. Here, again, the appeal is a fluid object and though there might be evidence that errors have been committed, the appeal is pathological itself, insufficient to bring about a change in the original decision. "Nor is the appeal process intended to deal with differences of scholarly opinion among applicants, adjudication committees and external assessors"—thus if an assessor's statement "Many of his papers are repetitive, and he borrows extensively from others' more original contributions" is interpreted as a matter of scholarly opinion, the applicant has no grounds for appeal. [76]

|

I am on the telephone participating in a conference call to deal with several appeals that the program director has made the decision that a reconsideration of the file is in order. I recognize the voices of the program officer and those of several other members of the committee. (Is this surprising after we had been cooped up for a week from 8:30-6 p.m.?) In each case, we begin talking about the letter of appeal and then return to the original application to check whether we had erred. In talking, we seem to warm up. Our conversation takes the same tone that it had several months prior, we begin to use the same arguments. It is as if we are transported back in time to the same window-less room in the basement of the hotel. We increasingly convince ourselves that we had not erred—did we not come to the same conclusion again? And was the appeal not flawed in the same manner as the original proposal? [77] |

Second, the process is inherently biased against the applicant because the very same committee (or parts of it) that made the original decision evaluates the appeal. Whereas the legal system has another level where appeals are considered by a different panel, and other aspects of society make use of an ombudsperson, the funding agency takes appeals to the same committee, which then deliberates whether it erred in the first instance. Curiously, therefore, the committee deliberates whether it has erred though the Council states that it will not accept appeals, even if the committee had erred. This provides opportunities for rejecting appeals based on a factual error in initial deliberations, as long as the committee comes to the conclusion that it "has made a reasonable attempt to judge fairly the merit of an application." [78]

The managers of the SSHRC program do not have to go without sleep. They may not know the details of the actual evaluation and adjudication in which case they are not doing their job; worse, they may know the inadequacies of the process but not do anything about it.

"I was sorry to learn that your proposal was not funded. I sincerely hope that this will not affect your confidence in the peer review process. How could it, given your personal involvement and your important contribution to this process? I suggest that you first read the comments." (Program Director, May 14, 2002) [79]

|

It is because of my involvement in all parts of the review process, not only in this but also in other countries, that I am not confident in the peer review process (e.g. ROTH 2002). I have seen and experienced injustice that the peer review process brings with itself; it is time to do something about it. As a consolation, I am provided with two more options to deal with an assessment. First, the Council recommends, "In addition to the appeal process, applicants may address differences of opinion with assessors and the committee in a subsequent application" (see http://www.sshrc.ca/web/apply/policies/appeals_e.asp). I am reminded of the recommendations that my friend made—read the comments and reapply. The friend said that I would not stand a chance turning over a decision by appealing given the past history of the processes at the Counsel. [80] |

Second, the procedures also provide me, as all applications, with the following opportunity:

"Applicants may also submit with their application a letter to the program officer naming potential assessors who, in their opinion, would not be likely to provide an unbiased review. Applicants must provide a justification for excluding potential assessors. While the Council cannot be bound by this information, it will take it into consideration in the selection of external assessors." (http://www.sshrc.ca/web/apply/policies/appeals_e.asp) [81]

|

For a scholar who published a lot and who has taken a strong stance on many issues, it is virtually impossible to know let alone list those who provide biased reviews. Personally, I can list, for example, two such individuals, because they have written me acrimonious letters in the past, usually in their function as journal editor. (For an actor-network analysis of some of the correspondence and a corresponding institutional analysis of the publish-or-perish climate in the North American scholarly community see ROTH 2002.) Who provided the false, acrimonious and unprofessional review of my proposal is known only to the program officer, who knows neither the field of science education that the assessor referred to nor my stature and status, or the relation of my research to that of the mainstream. "Applicants have full access ... with the exception of the name of the assessor." I am the only person who, other than the assessor, could evaluate whether there is a bias based on past conflict; and I am the one person prevented from accessing the information and thereby preventing future injustice. [82] |

In this paper, I articulate some of the micro-processes in the evaluation and adjudication of proposals to funding agencies, and the idiosyncrasies and problematic issues that can arise. Unfortunately, whereas the Canadian SSHRC is open to cosmetic changes, it leaves researchers with little opportunity to appeal bias. The proposal and the assessors' reviews are fluids whose identities depend on the context so that the funding agency has in fact shored itself against any appeal. The program officers and directors and the president of the agency can go to sleep in peace every night feeling that the system that they have is the best that we can have. Though there might be differences and errors, everything averages out. And nothing is lost as the applicant can always reapply during the following year. The directors and program officers refer to the possibility to reapply and to have different evaluators, apparently even in the event that a biased and incomprehensible evaluation has been made. There is no recourse—a benefit to the agency, its directors and officers, a detriment to the individual researcher who has been hurt. [83]

Working on this text has made salient many questions in my mind, theoretical and empirical ones. In addition to the questions that opened this article, I am posing some in the following list without pre-supposing that the order is a statement about the importance of each question or set of questions.

From which or whose perspectives (proposal author, reviewer, evaluating committee member, committee chair, program officer, program chair, proposal author's superiors, lawyers, etc.) will we gain particular insights? Which or whose perspectives are of most practical relevance to the different actors in different parts of the network. What gains can be made if we take the perspectives of various non-human actors?

If there are multiple suitable perspectives, how do these relate to one another? Are there dominant perspectives and if so, at the cost of which other perspectives? Can there by multiple incompatible perspectives or should we seek to triangulate a "common" perspective? What are some reasonable or interesting solutions to the incompatibility-of-perspectives problem?

How should a social phenomenon such as funding be theorized? What advantages are there taking, for example, a grounded theory approach (STRAUSS & CORBIN 1990) versus making explicit one's ontology, such as I have done here?

Doing ethnography of one's own community, especially if it is of an agent that can withhold certain resources and therefore is in a power-over relation, comes both with risks (to the author) and opportunities (e.g., changes in praxis). What processes can be set in place so that such ethnographies are possible without punitive measures from those at obligatory passage points in power of withholding valued and desirable resources? Do we have to play the game, shut up, and thereby leave unjust practices intact so that we can cull the resources? What is/ought to be the roles of more prominent scholars in changing our practices and communities?

How similar/different are the funding-decision processes across different agencies? How similar/different are the processes across countries or continents? [84]

1) It is evident that ethics and professionalism prevent me from naming individuals (applicants, assessors, program officers, program directors) or particular files. It should also be evident that I put myself in difficult and dangerous waters—see the fluid analogy use below—by doing such an investigation that turns up the problems in the evaluation and adjudication process of the one agency that funds social science research in my area. <back>

2) Even mereology, the study of wholes and their parts, wrestles with difficult if not undecidable problems. What is the color of the boundary that runs precisely through the middle of a disk, symmetrically segmenting it into two segments one of which is red, the other green? SMITH (1997) provides the beginning of an answer to this question. <back>

3) I have once served as the chair of a committee in which all proposals were funded. The committee meeting therefore was a farce, because it was evident from the way the program officer interfered with the meeting that normal peer review processes were not followed and all proposals would be funded. <back>

4) This is the content of KAFKA's parable of the gatekeeper in the novel The Trial. A man from the countryside comes to the city gate (door of the Law) and yet is denied admittance by the gatekeeper. No reason is given for his denial, and seemingly the man has no reason to stay, and yet he sits down and waits. In this situation, the gatekeeper had no power whatsoever over the man until the main began waiting for the gatekeeper to let him in. <back>

5) I show below that the appeal structure is biased against the applicant who desires to appeal; people who work in the agency, as KAFKA's gatekeeper, tell that it is not worth appealing because it does not get you anywhere. <back>